Text mining to detect fraud

Fraud Detection in Python

Charlotte Werger

Data Scientist

Cleaning your text data

Must dos when working with textual data:

Tokenization

Remove all stopwords

Lemmatize your words

Stem your words

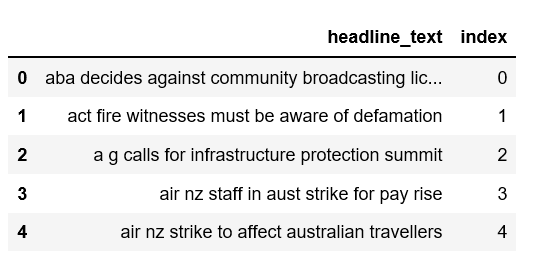

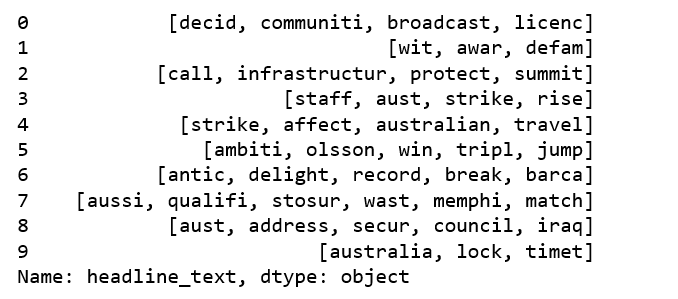

Go from this...

To this...

Data preprocessing part 1

# 1. Tokenization

from nltk import word_tokenize

text = df.apply(lambda row: word_tokenize(row["email_body"]), axis=1)

text = text.rstrip()

text = re.sub(r'[^a-zA-Z]', ' ', text)

# 2. Remove all stopwords and punctuation

from nltk.corpus import stopwords

import string

exclude = set(string.punctuation)

stop = set(stopwords.words('english'))

stop_free = " ".join([word for word in text

if((word not in stop) and (not word.isdigit()))])

punc_free = ''.join(word for word in stop_free

if word not in exclude)

Data preprocessing part 2

# Lemmatize words from nltk.stem.wordnet import WordNetLemmatizer lemma = WordNetLemmatizer() normalized = " ".join(lemma.lemmatize(word) for word in punc_free.split())# Stem words from nltk.stem.porter import PorterStemmer porter= PorterStemmer() cleaned_text = " ".join(porter.stem(token) for token in normalized.split())print (cleaned_text)

['philip','going','street','curious','hear','perspective','may','wish','offer','trading','floor','enron',

'stock','lower','joined','company','business','school','imagine','quite','happy','people','day',

'relate','somewhat','stock','around','fact','broke','day','ago','knowing','imagine','letting',

'event','get','much','taken','similar','problem','hope','everything','else','going','well','family',

'knee','surgery','yet','give','call','chance','later']

Let's practice!

Fraud Detection in Python