Introducing glmnet

Machine Learning with caret in R

Zach Mayer

Data Scientist at DataRobot and co-author of caret

Introducing glmnet

- Extension of glm models with built-in variable selection

- Helps deal with collinearity and small samples sizes

- Two primary forms

- Lasso regression: penalizes number of non-zero coefficients

- Ridge regression: penalizes absolute magnitude of coefficients

- Attempts to find a parsimonious (i.e. simple) model

- Pairs well with random forest models

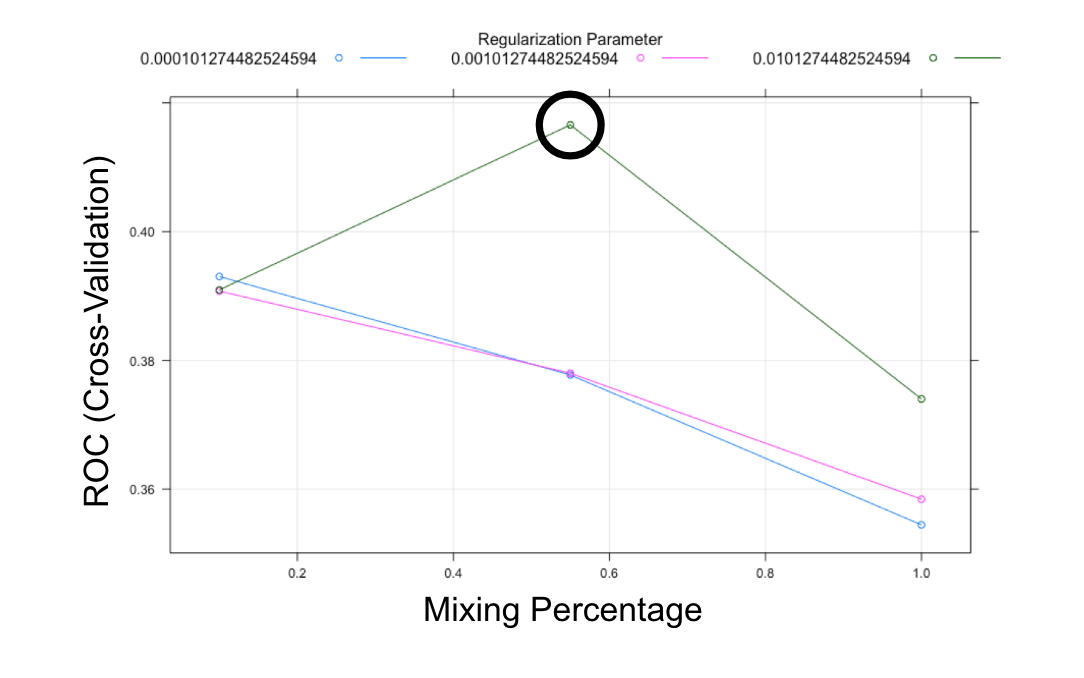

Tuning glmnet models

- Combination of lasso and ridge regression

- Can fit a mix of the two models

alpha [0, 1]: pure ridge to pure lassolambda (0, infinity): size of the penalty

Example: "don't overfit"

# Load data

overfit <- read.csv("overfit.csv")

# Make a custom trainControl

myControl <- trainControl(

method = "cv",

number = 10,

summaryFunction = twoClassSummary,

classProbs = TRUE, # <- Super important!

verboseIter = TRUE

)

Try the defaults

# Fit a model

set.seed(42)

model <- train(

y ~ .,

overfit,

method = "glmnet",

trControl = myControl

)

# Plot results

plot(model)

- 3 values of

alpha - 3 values of

lambda

Plot the results

Let’s practice!

Machine Learning with caret in R