Introduction to PySpark RDD

Big Data Fundamentals with PySpark

Upendra Devisetty

Science Analyst, CyVerse

What is RDD?

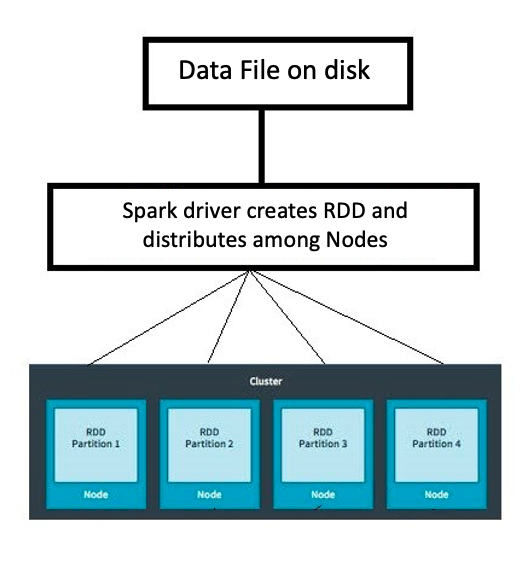

- RDD = Resilient Distributed Datasets

Decomposing RDDs

Resilient Distributed Datasets

Resilient: Ability to withstand failures

Distributed: Spanning across multiple machines

Datasets: Collection of partitioned data e.g, Arrays, Tables, Tuples etc.,

Creating RDDs. How to do it?

Parallelizing an existing collection of objects

External datasets:

Files in HDFS

Objects in Amazon S3 bucket

lines in a text file

From existing RDDs

Parallelized collection (parallelizing)

parallelize()for creating RDDs from python lists

numRDD = sc.parallelize([1,2,3,4])

helloRDD = sc.parallelize("Hello world")

type(helloRDD)

<class 'pyspark.rdd.PipelinedRDD'>

From external datasets

textFile()for creating RDDs from external datasets

fileRDD = sc.textFile("README.md")

type(fileRDD)

<class 'pyspark.rdd.PipelinedRDD'>

Understanding Partitioning in PySpark

A partition is a logical division of a large distributed data set

parallelize()method

numRDD = sc.parallelize(range(10), minPartitions = 6)

textFile()method

fileRDD = sc.textFile("README.md", minPartitions = 6)

- The number of partitions in an RDD can be found by using

getNumPartitions()method

Let's practice

Big Data Fundamentals with PySpark