Regularized linear regression

Dimensionality Reduction in Python

Jeroen Boeye

Head of Machine Learning, Faktion

Linear model concept

Creating our own dataset

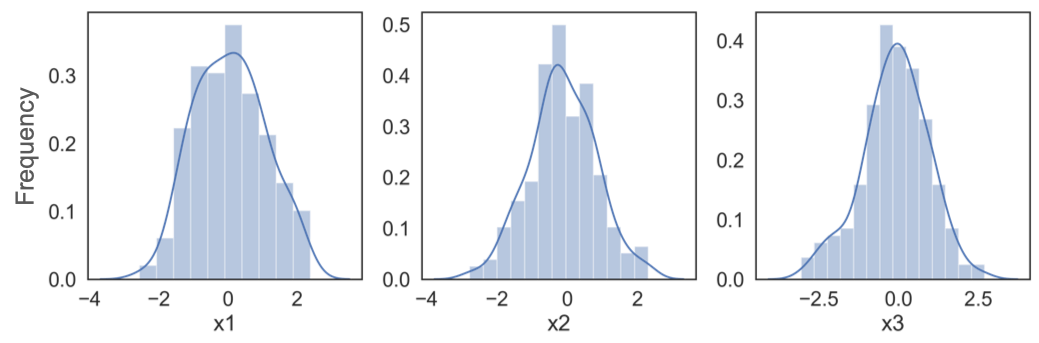

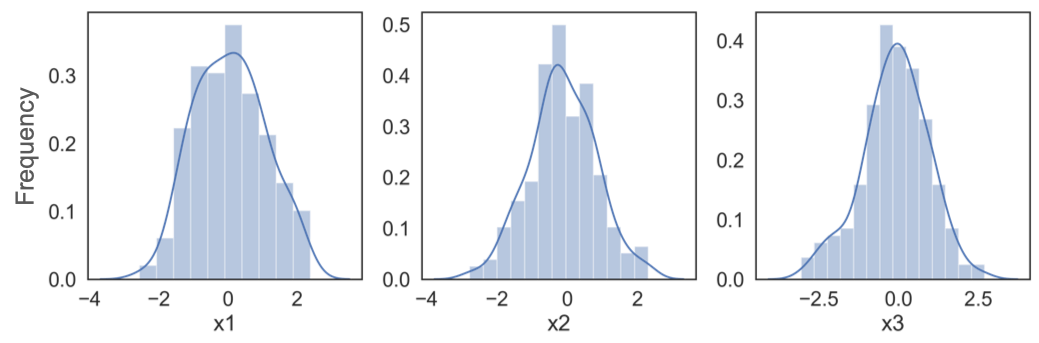

| x1 | x2 | x3 |

|---|---|---|

| 1.76 | -0.37 | -0.60 |

| 0.40 | -0.24 | -1.12 |

| 0.98 | 1.10 | 0.77 |

| ... | ... | ... |

Creating our own dataset

| x1 | x2 | x3 |

|---|---|---|

| 1.76 | -0.37 | -0.60 |

| 0.40 | -0.24 | -1.12 |

| 0.98 | 1.10 | 0.77 |

| ... | ... | ... |

Creating our own dataset

Creating our own target feature:

$y = 20 + 5x_1 + 2x_2 + 0x_3 + error$

Linear regression in Python

from sklearn.linear_model import LinearRegression

lr = LinearRegression()

lr.fit(X_train, y_train)

# Actual coefficients = [5 2 0]

print(lr.coef_)

[ 4.95 1.83 -0.05]

# Actual intercept = 20

print(lr.intercept_)

19.8

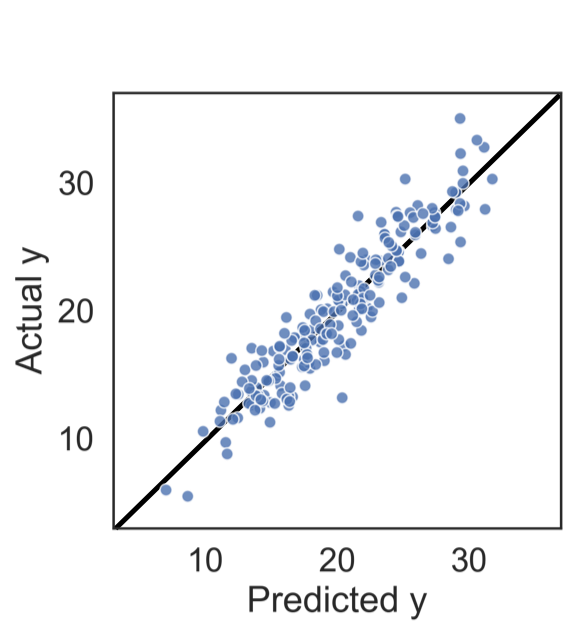

Linear regression in Python

# Calculates R-squared

print(lr.score(X_test, y_test))

0.976

Linear regression in Python

from sklearn.linear_model import LinearRegression

lr = LinearRegression()

lr.fit(X_train, y_train)

# Actual coefficients = [5 2 0]

print(lr.coef_)

[ 4.95 1.83 -0.05]

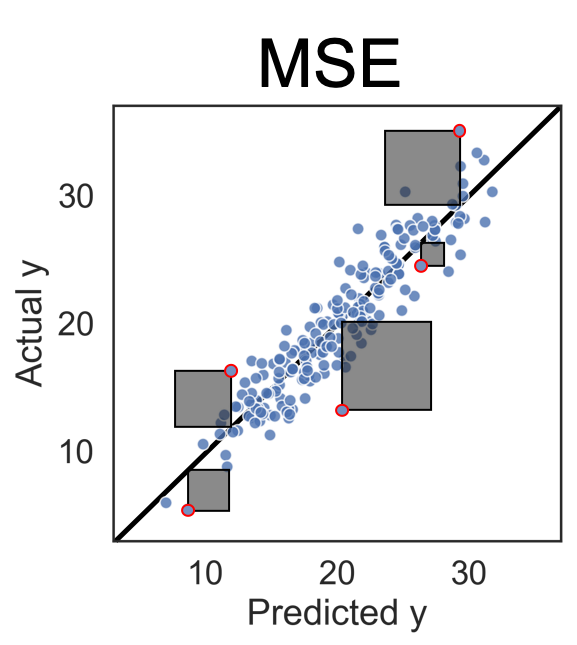

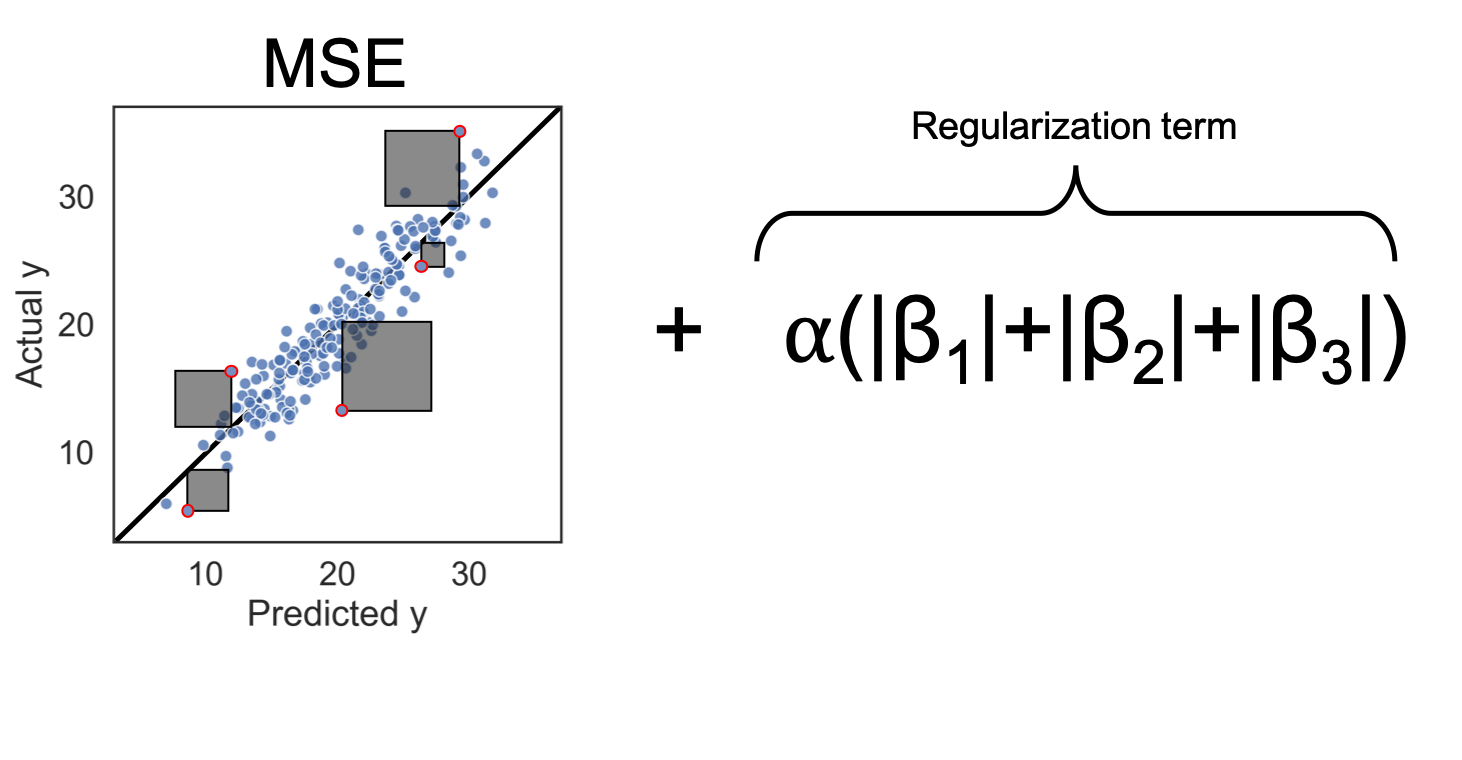

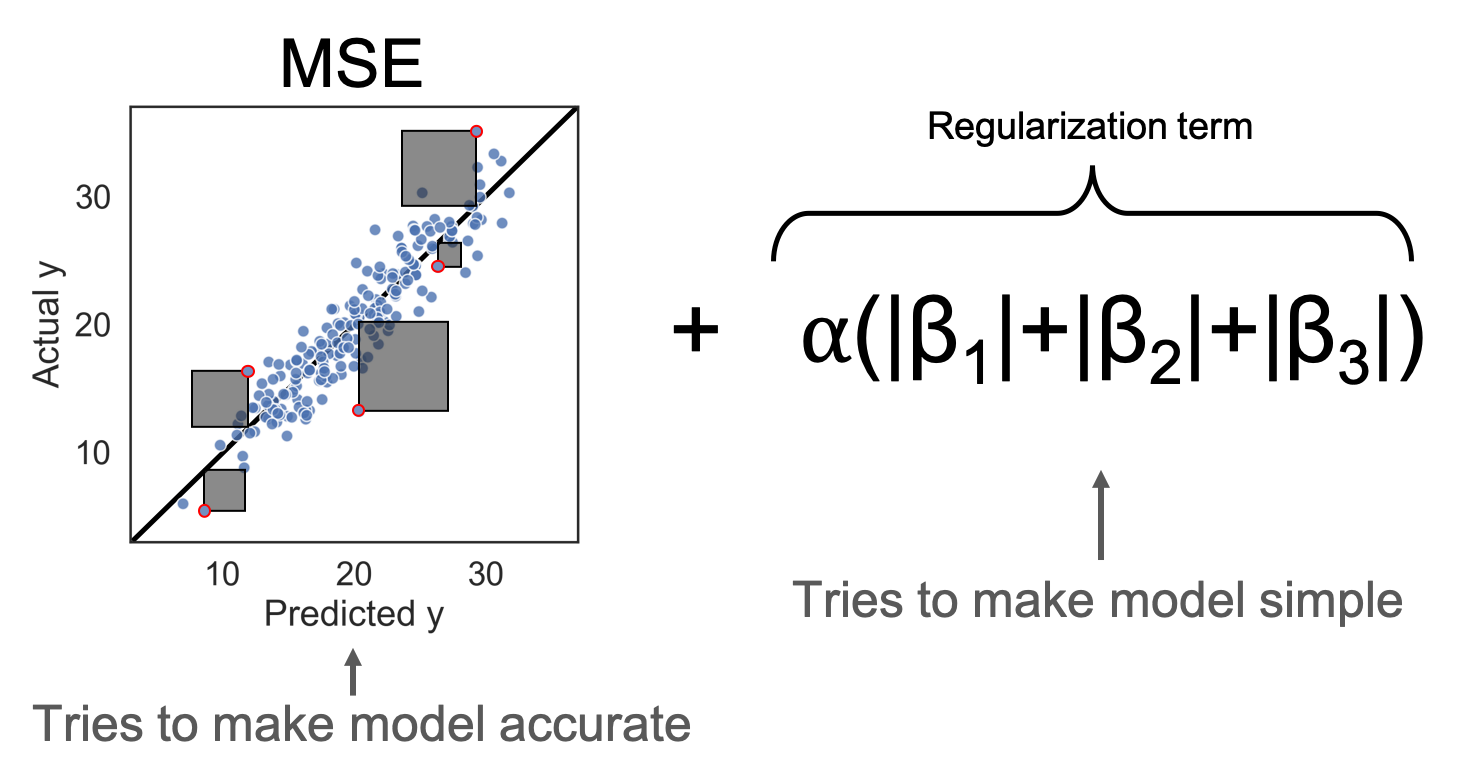

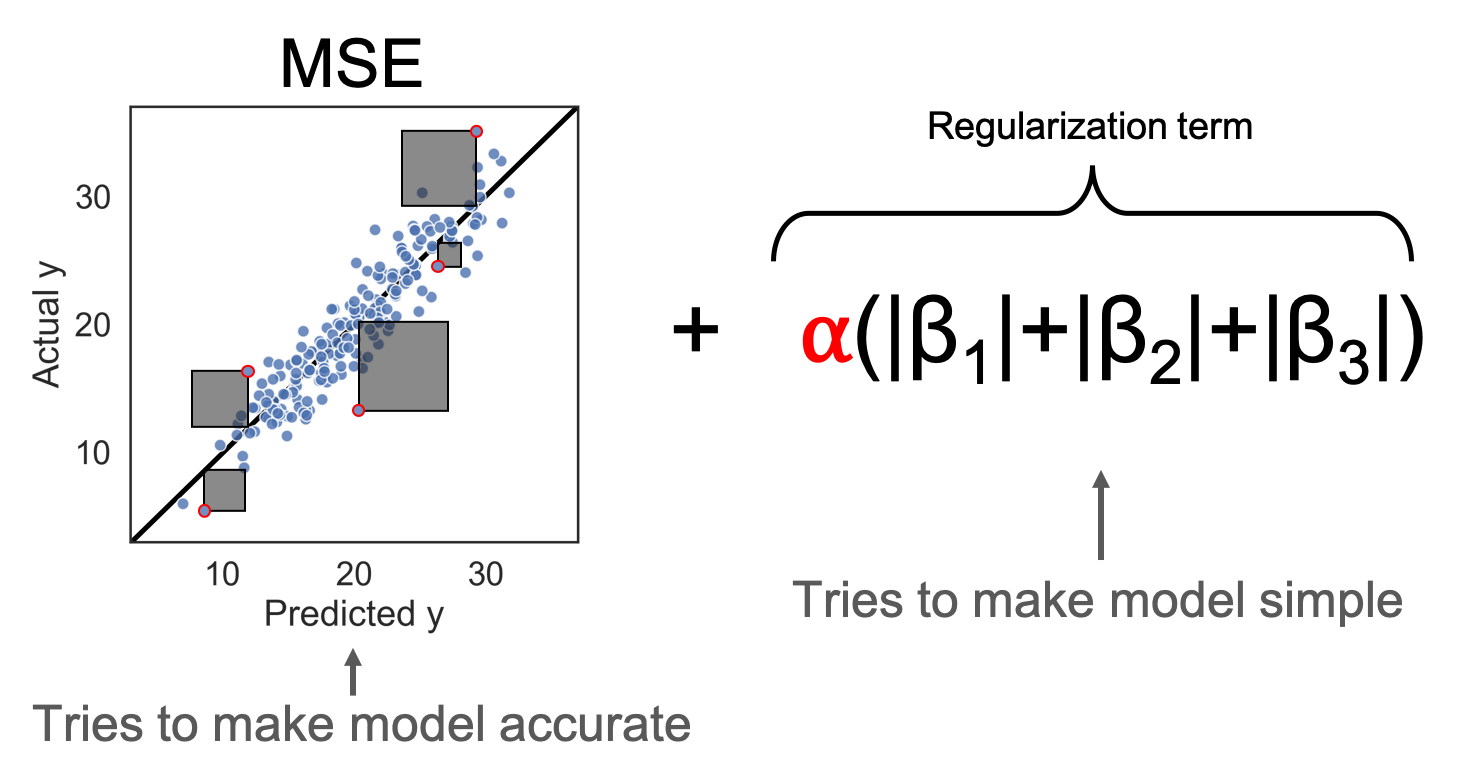

Loss function: Mean Squared Error

Loss function: Mean Squared Error

Adding regularization

Adding regularization

Adding regularization

Lasso regressor

from sklearn.linear_model import Lasso

la = Lasso()

la.fit(X_train, y_train)

# Actual coefficients = [5 2 0]

print(la.coef_)

[4.07 0.59 0. ]

print(la.score(X_test, y_test))

0.861

Lasso regressor

from sklearn.linear_model import Lasso

la = Lasso(alpha=0.05)

la.fit(X_train, y_train)

# Actual coefficients = [5 2 0]

print(la.coef_)

[ 4.91 1.76 0. ]

print(la.score(X_test, y_test))

0.974

Let's practice!

Dimensionality Reduction in Python