The curse of dimensionality

Dimensionality Reduction in Python

Jeroen Boeye

Head of Machine Learning, Faktion

From observation to pattern

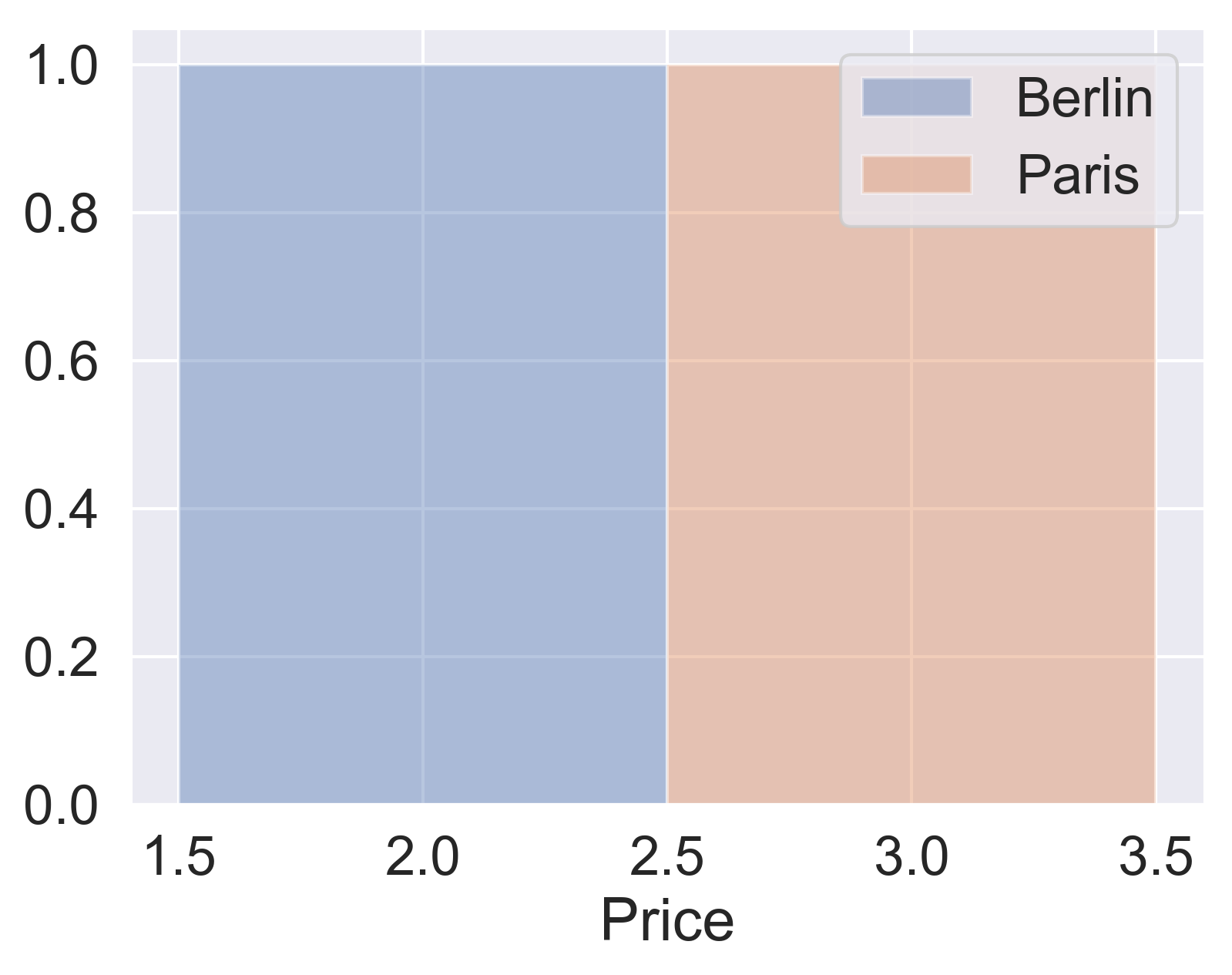

| City | Price |

|---|---|

| Berlin | 2 |

| Paris | 3 |

From observation to pattern

| City | Price |

|---|---|

| Berlin | 2 |

| Paris | 3 |

From observation to pattern

| City | Price |

|---|---|

| Berlin | 2.0 |

| Berlin | 3.1 |

| Berlin | 4.3 |

| Paris | 3.0 |

| Paris | 5.2 |

| ... | ... |

Building a city classifier - data split

Separate the feature we want to predict from the ones to train the model on.

y = house_df['City']

X = house_df.drop('City', axis=1)

Perform a 70% train and 30% test data split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

Building a city classifier - model fit

Create a Support Vector Machine Classifier and fit to training data

from sklearn.svm import SVC

svc = SVC()

svc.fit(X_train, y_train)

Building a city classifier - predict

from sklearn.metrics import accuracy_score

print(accuracy_score(y_test, svc.predict(X_test)))

0.826

print(accuracy_score(y_train, svc.predict(X_train)))

0.832

Adding features

| City | Price |

|---|---|

| Berlin | 2.0 |

| Berlin | 3.1 |

| Berlin | 4.3 |

| Paris | 3.0 |

| Paris | 5.2 |

| ... | ... |

Adding features

| City | Price | n_floors | n_bathroom | surface_m2 |

|---|---|---|---|---|

| Berlin | 2.0 | 1 | 1 | 190 |

| Berlin | 3.1 | 2 | 1 | 187 |

| Berlin | 4.3 | 2 | 2 | 240 |

| Paris | 3.0 | 2 | 1 | 170 |

| Paris | 5.2 | 2 | 2 | 290 |

| ... | ... | ... | ... | ... |

Let's practice!

Dimensionality Reduction in Python