Tree-based feature selection

Dimensionality Reduction in Python

Jeroen Boeye

Head of Machine Learning, Faktion

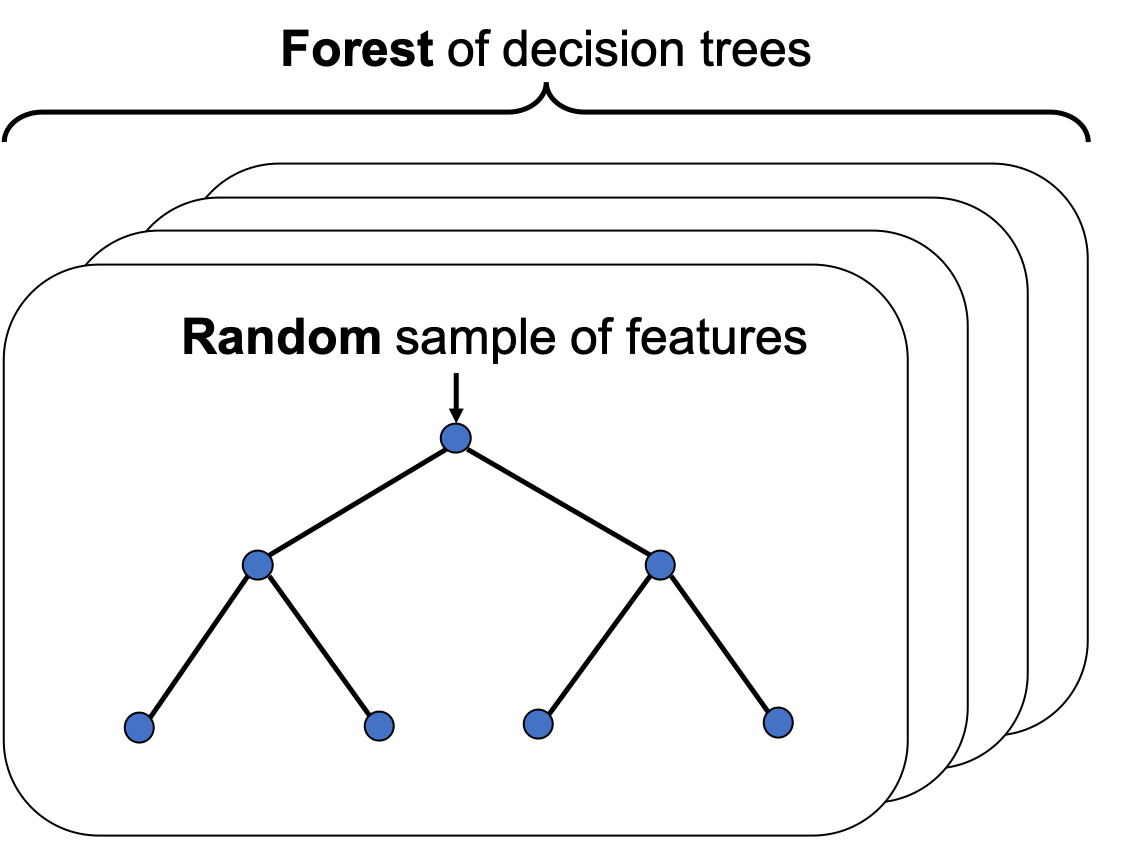

Random forest classifier

Random forest classifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

rf = RandomForestClassifier()

rf.fit(X_train, y_train)

print(accuracy_score(y_test, rf.predict(X_test)))

0.99

Random forest classifier

Feature importance values

rf = RandomForestClassifier()

rf.fit(X_train, y_train)

print(rf.feature_importances_)

array([0. , 0. , 0. , 0. , 0. , 0. , 0. , 0.04, 0. , 0.01, 0.01,

0. , 0. , 0. , 0. , 0.01, 0.01, 0. , 0. , 0. , 0. , 0.05,

...

0. , 0.14, 0. , 0. , 0. , 0.06, 0. , 0. , 0. , 0. , 0. ,

0. , 0.07, 0. , 0. , 0.01, 0. ])

print(sum(rf.feature_importances_))

1.0

Feature importance as a feature selector

mask = rf.feature_importances_ > 0.1

print(mask)

array([False, False, ..., True, False])

X_reduced = X.loc[:, mask]

print(X_reduced.columns)

Index(['chestheight', 'neckcircumference', 'neckcircumferencebase',

'shouldercircumference'], dtype='object')

RFE with random forests

from sklearn.feature_selection import RFE

rfe = RFE(estimator=RandomForestClassifier(),

n_features_to_select=6, verbose=1)

rfe.fit(X_train,y_train)

Fitting estimator with 94 features.

Fitting estimator with 93 features

...

Fitting estimator with 8 features.

Fitting estimator with 7 features.

print(accuracy_score(y_test, rfe.predict(X_test))

0.99

RFE with random forests

from sklearn.feature_selection import RFE

rfe = RFE(estimator=RandomForestClassifier(),

n_features_to_select=6, step=10, verbose=1)

rfe.fit(X_train,y_train)

Fitting estimator with 94 features.

Fitting estimator with 84 features.

...

Fitting estimator with 24 features.

Fitting estimator with 14 features.

print(X.columns[rfe.support_])

Index(['biacromialbreadth', 'handbreadth', 'handcircumference',

'neckcircumference', 'neckcircumferencebase', 'shouldercircumference'], dtype='object')

Let's practice!

Dimensionality Reduction in Python