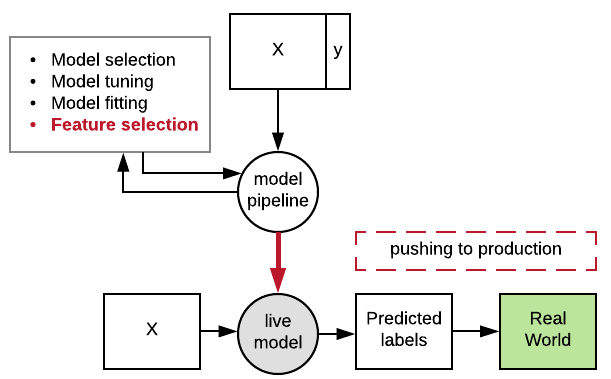

Model deployment

Designing Machine Learning Workflows in Python

Dr. Chris Anagnostopoulos

Honorary Associate Professor

Serializing your model

Store a classifier to file:

import pickle

clf = RandomForestClassifier().fit(X_train, y_train)

with open('model.pkl', 'wb') as file:

pickle.dump(clf, file=file)

Load it again from file:

with open('model.pkl', 'rb') as file:

clf2 = pickle.load(file)

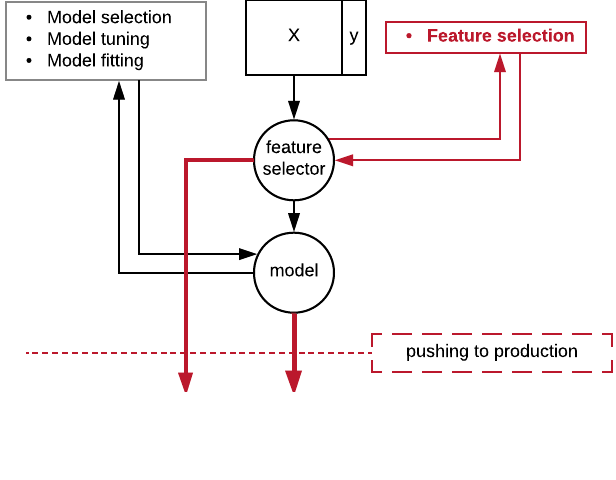

Serializing your pipeline

Development environment:

vt = SelectKBest(f_classif).fit(

X_train, y_train)

clf = RandomForestClassifier().fit(

vt.transform(X_train), y_train)

with open('vt.pkl', 'wb') as file:

pickle.dump(vt)

with open('clf.pkl', 'wb') as file:

pickle.dump(clf)

Serializing your pipeline

Production environment:

with open('vt.pkl', 'rb') as file:

vt = pickle.load(vt)

with open('clf.pkl', 'rb') as file:

clf = pickle.load(clf)

clf.predict(vt.transform(X_new))

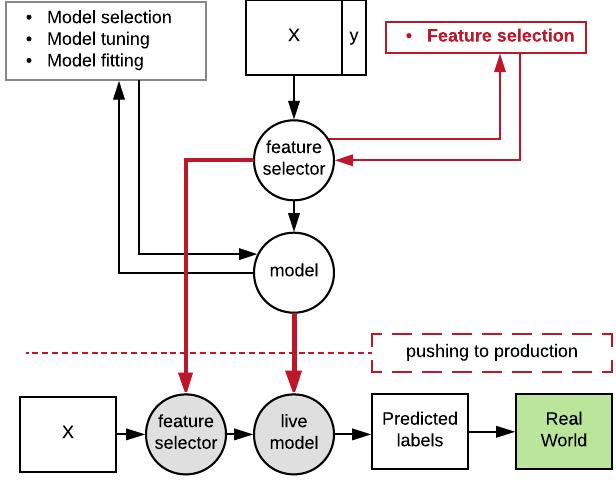

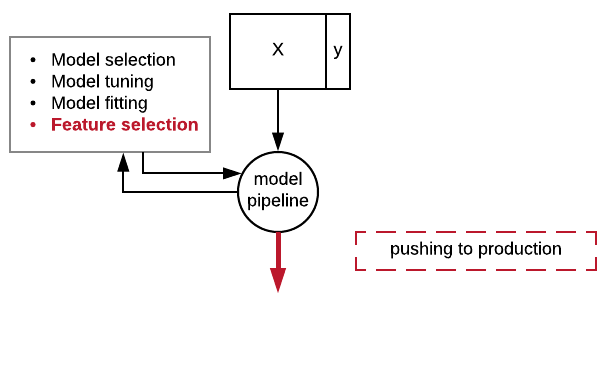

Serializing your pipeline

Development environment:

pipe = Pipeline([ ('fs', SelectKBest(f_classif)), ('clf', RandomForestClassifier()) ]) params = dict(fs__k=[2, 3, 4], clf__max_depth=[5, 10, 20]) gs = GridSearchCV(pipe, params) gs = gs.fit(X_train, y_train)with open('pipe.pkl', 'wb') as file: pickle.dump(gs, file)

Serializing your pipeline

Production environment:

with open('pipe.pkl', 'rb') as file:

gs = pickle.dump(gs, file)

gs.predict(X_test)

Custom feature transformations

checking_status duration ... own_telephone foreign_worker

0 1 6 ... 1 1

1 0 48 ... 0 1

def negate_second_column(X):

Z = X.copy()

Z[:,1] = -Z[:,1]

return Z

pipe = Pipeline([('ft', FunctionTransformer(negate_second_column)),

('clf', RandomForestClassifier())])

Production ready!

Designing Machine Learning Workflows in Python