Data fusion

Designing Machine Learning Workflows in Python

Dr. Chris Anagnostopoulos

Honorary Associate Professor

Computers, ports, and protocols

The LANL cyber dataset

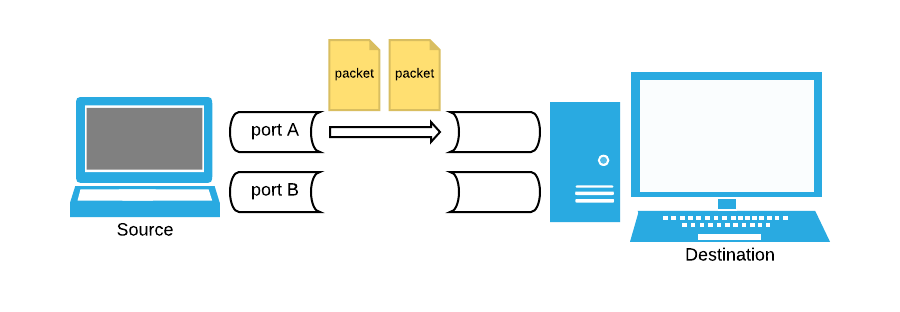

flows: Flows are sessions of continuous data transfer between a port on a source computer and a port on a destination computer, following a certain protocol.

flows.iloc[1]

time 471692

duration 0

source_computer C5808

source_port N2414

destination_computer C26871

destination_port N19148

protocol 6

packet_count 1

byte_count 60

1 https://csr.lanl.gov/data/cyber1/

The LANL cyber dataset

attack: information about certain attacks performed by the security team itself during a test.

attacks.head()

time user@domain source_computer destination_computer

0 151036 U748@DOM1 C17693 C305

1 151648 U748@DOM1 C17693 C728

2 151993 U6115@DOM1 C17693 C1173

3 153792 U636@DOM1 C17693 C294

4 155219 U748@DOM1 C17693 C5693

How can we construct labeled examples from this data?

1 https://csr.lanl.gov/data/cyber1/

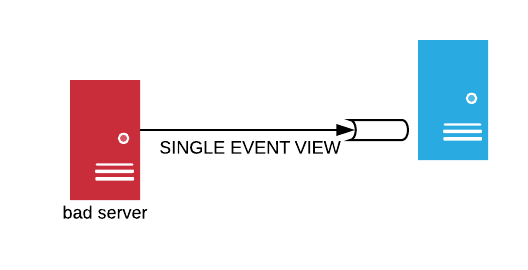

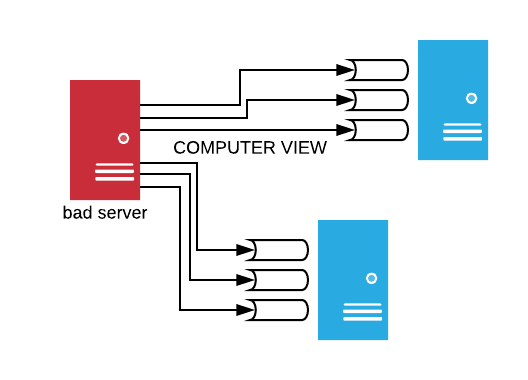

Labeling events versus labeling computers

A single event cannot be easily labeled.

But an entire computer is either infected or not.

Group and featurize

Unit of analysis = destination_computer

flows_grouped = flows.groupby('destination_computer')list(flows_grouped)[0]

('C10047',

time duration ... packet_count byte_count

2791 471694 0 ... 12 6988

2792 471694 0 ... 1 193

...

2846 471694 38 ... 157 84120

Group and featurize

From one DataFrame per computer, to one feature vector per computer.

def featurize(df):

return {

'unique_ports': len(set(df['destination_port'])),

'average_packet': np.mean(df['packet_count']),

'average_duration': np.mean(df['duration'])

}

Group and featurize

out = flows.groupby('destination_computer').apply(featurize)

X = pd.DataFrame(list(out), index=out.index)X.head()

average_duration ... unique_ports

destination_computer ...

C10047 7.538462 ... 13

C10054 0.000000 ... 1

C10131 55.000000 ... 1

...

[5 rows x 3 columns]

Labeled dataset

bads = set(attacks['source_computer'].append(attacks['destination_computer']))

y = [x in bads for x in X.index]

The pair (X, y) is now a standard labeled classification dataset.

X_train, X_test, y_train, y_test = train_test_split(X, y)

clf = AdaBoostClassifier()

accuracy_score(y_test, clf.fit(X_train, y_train).predict(X_test))

0.92

Ready to catch a hacker?

Designing Machine Learning Workflows in Python