Document clustering

Cluster Analysis in Python

Shaumik Daityari

Business Analyst

Document clustering: concepts

- Clean data before processing

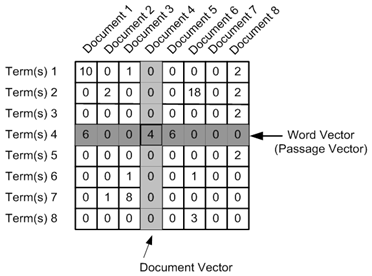

- Determine the importance of the terms in a document (in TF-IDF matrix)

- Cluster the TF-IDF matrix

- Find top terms, documents in each cluster

Clean and tokenize data

- Convert text into smaller parts called tokens, clean data for processing

from nltk.tokenize import word_tokenize import re def remove_noise(text, stop_words = []): tokens = word_tokenize(text)cleaned_tokens = [] for token in tokens: token = re.sub('[^A-Za-z0-9]+', '', token)if len(token) > 1 and token.lower() not in stop_words: # Get lowercase cleaned_tokens.append(token.lower()) return cleaned_tokensremove_noise("It is lovely weather we are having. I hope the weather continues.")

['lovely', 'weather', 'hope', 'weather', 'continues']

TF-IDF (Term Frequency - Inverse Document Frequency)

- A weighted measure: evaluate how important a word is to a document in a collection

from sklearn.feature_extraction.text import TfidfVectorizertfidf_vectorizer = TfidfVectorizer(max_df=0.8, max_features=50, min_df=0.2, tokenizer=remove_noise)tfidf_matrix = tfidf_vectorizer.fit_transform(data)

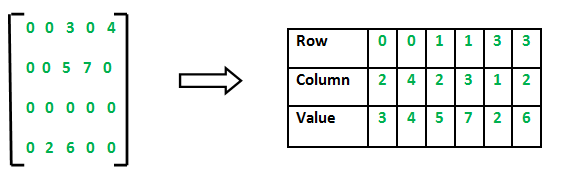

Clustering with sparse matrix

kmeans()in SciPy does not support sparse matrices- Use

.todense()to convert to a matrix

cluster_centers, distortion = kmeans(tfidf_matrix.todense(), num_clusters)

Top terms per cluster

- Cluster centers: lists with a size equal to the number of terms

- Each value in the cluster center is its importance

- Create a dictionary and print top terms

terms = tfidf_vectorizer.get_feature_names_out() for i in range(num_clusters): center_terms = dict(zip(terms, list(cluster_centers[i])))sorted_terms = sorted(center_terms, key=center_terms.get, reverse=True)print(sorted_terms[:3])

['room', 'hotel', 'staff']

['bad', 'location', 'breakfast']

More considerations

- Work with hyperlinks, emoticons etc.

- Normalize words (run, ran, running -> run)

.todense()may not work with large datasets

Next up: exercises!

Cluster Analysis in Python