Informed Search: Coarse to Fine

Hyperparameter Tuning in Python

Alex Scriven

Data Scientist

Informed vs Uninformed Search

So far everything we have done has been uninformed search:

Uninformed search: Where each iteration of hyperparameter tuning does not learn from the previous iterations.

This is what allows us to parallelize our work. Though this doesn't sound very efficient?

Informed vs Uninformed

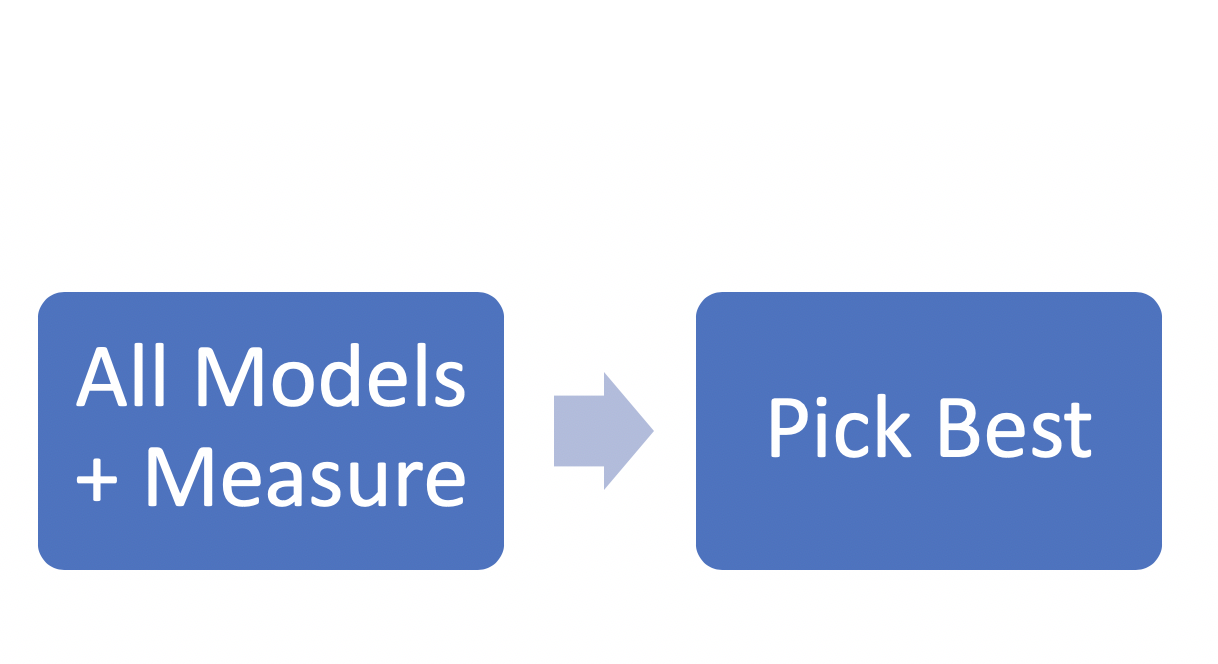

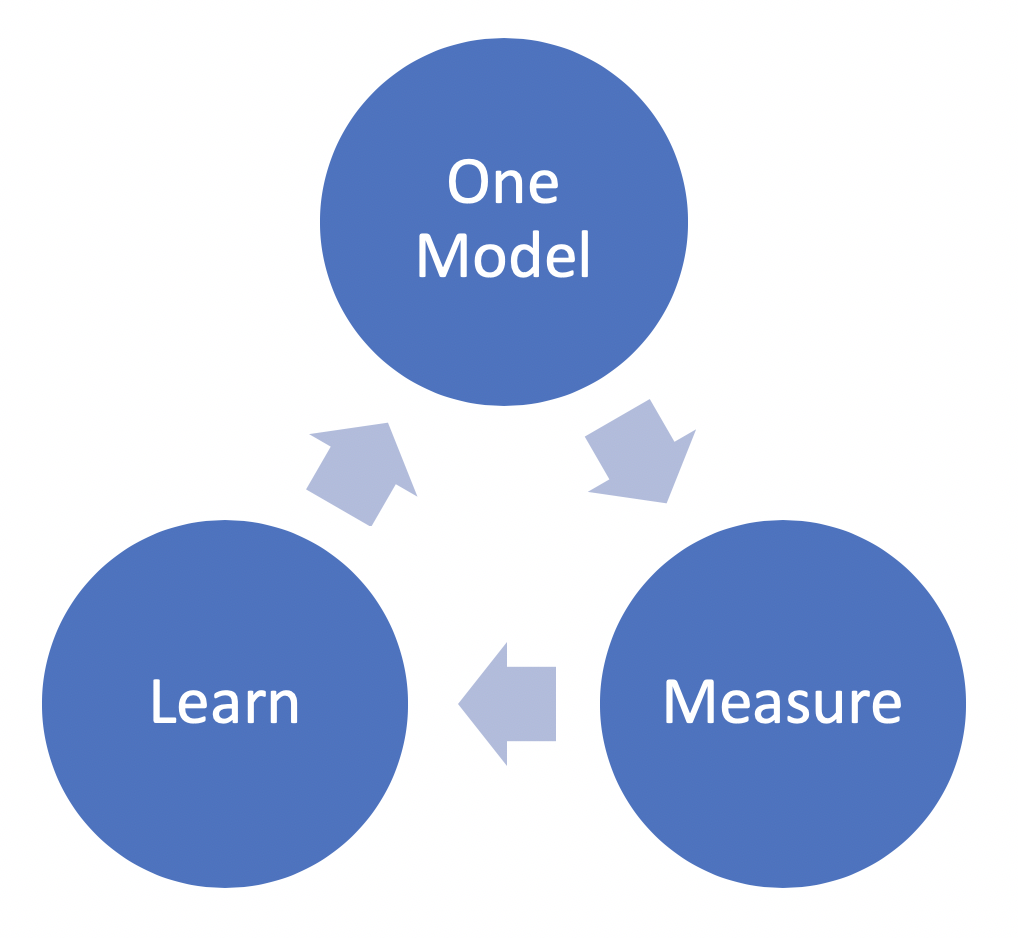

The process so far:

An alternate way:

Coarse to Fine Tuning

A basic informed search methodology:

Start out with a rough, random approach and iteratively refine your search.

The process is:

- Random search

- Find promising areas

- Grid search in the smaller area

- Continue until optimal score obtained

You could substitute (3) with further random searches before the grid search

Why Coarse to Fine?

Coarse to fine tuning has some advantages:

- Utilizes the advantages of grid and random search.

- Wide search to begin with

- Deeper search once you know where a good spot is likely to be

- Better spending of time and computational efforts mean you can iterate quicker

No need to waste time on search spaces that are not giving good results!

Note: This isn't informed on one model but batches

Undertaking Coarse to Fine

Let's take an example with the following hyperparameter ranges:

max_depth_listbetween 1 and 65min_sample_listbetween 3 and 17learn_rate_list150 values between 0.01 and 150

How many possible models do we have?

combinations_list = [list(x) for x in product(max_depth_list, min_sample_list, learn_rate_list)]

print(len(combinations_list))

134400

Visualizing Coarse to Fine

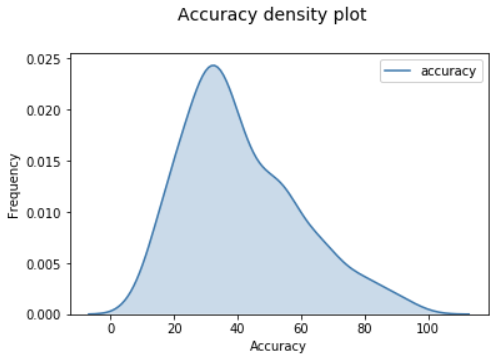

Let's do a random search on just 500 combinations.

Here we plot our accuracy scores:

Which models were the good ones?

Visualizing Coarse to Fine

Top results:

| max_depth | min_samples_leaf | learn_rate | accuracy |

|---|---|---|---|

| 10 | 7 | 0.01 | 96 |

| 19 | 7 | 0.023355705 | 96 |

| 30 | 6 | 1.038389262 | 93 |

| 27 | 7 | 1.11852349 | 91 |

| 16 | 7 | 0.597651007 | 91 |

Visualizing Coarse to Fine

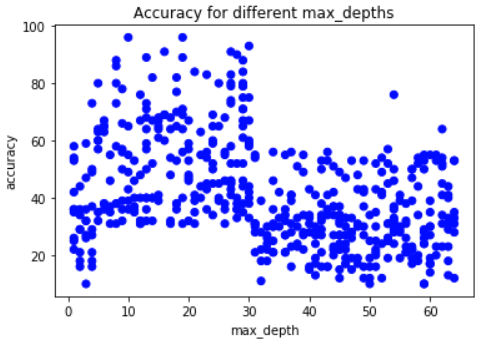

Let's visualize the max_depth values vs accuracy score:

Visualizing coarse to Fine

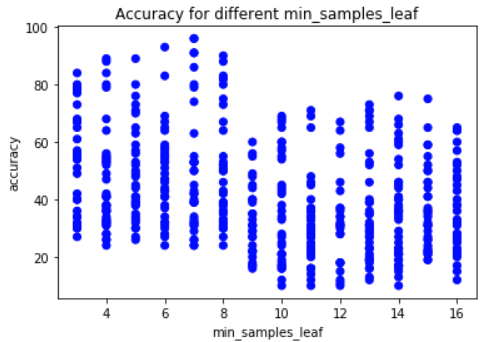

min_samples_leaf better below 8

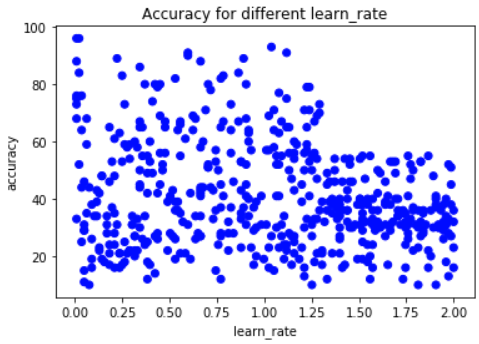

learn_rate worse above 1.3

The next steps

What we know from iteration one:

max_depthbetween 8 and 30learn_rateless than 1.3min_samples_leafperhaps less than 8

Where to next? Another random or grid search with what we know!

Note: This was only bivariate analysis. You can explore looking at multiple hyperparameters (3, 4 or more!) on a single graph, but that's beyond the scope of this course.

Let's practice!

Hyperparameter Tuning in Python