Hyperparameter tuning in python

Hyperparameter Tuning in Python

Alex Scriven

Data Scientist

Introduction

Why study this course?

- New, complex algorithms with many hyperparameters

- Tuning can take a lot of time

- Develops deeper understanding beyond the default settings

You may be surprised what you find under the hood!

The dataset

The dataset relates to credit card defaults.

It contains variables related to the financial history of some consumers in Taiwan. It has 30,000 users and 24 attributes.

Our modeling target is whether they defaulted on their loan

It has already been preprocessed and at times we will take smaller samples to demonstrate a concept

Extra information about the dataset can be found here:

https://archive.ics.uci.edu/ml/datasets/default+of+credit+card+clients

Parameters Overview

What is a parameter?

- Components of the model learned during the modeling process

- You do not set these manually (you can't in fact!)

- The algorithm will discover these for you

Parameters in Logistic Regression

A simple logistic regression model:

log_reg_clf = LogisticRegression() log_reg_clf.fit(X_train, y_train)print(log_reg_clf.coef_)

array([[-2.88651273e-06, -8.23168511e-03, 7.50857018e-04,

3.94375060e-04, 3.79423562e-04, 4.34612046e-04,

4.37561467e-04, 4.12107102e-04, -6.41089138e-06,

-4.39364494e-06, cont... ]])

Parameters in Logistic Regression

Tidy up the coefficients:

# Get the original variable names original_variables = list(X_train.columns)# Zip together the names and coefficients zipped_together = list(zip(original_variables, log_reg_clf.coef_[0])) coefs = [list(x) for x in zipped_together]# Put into a DataFrame with column labels coefs = pd.DataFrame(coefs, columns=["Variable", "Coefficient"])

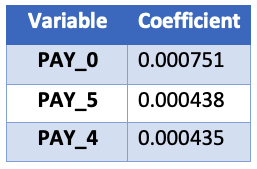

Parameters in Logistic Regression

Now sort and print the top three coefficients

coefs.sort_values(by=["Coefficient"], axis=0, inplace=True, ascending=False)

print(coefs.head(3))

Where to find Parameters

To find parameters we need:

- To know a bit about the algorithm

- Consult the Scikit Learn documentation

Parameters will be found under the 'Attributes' section, not the 'parameters' section!

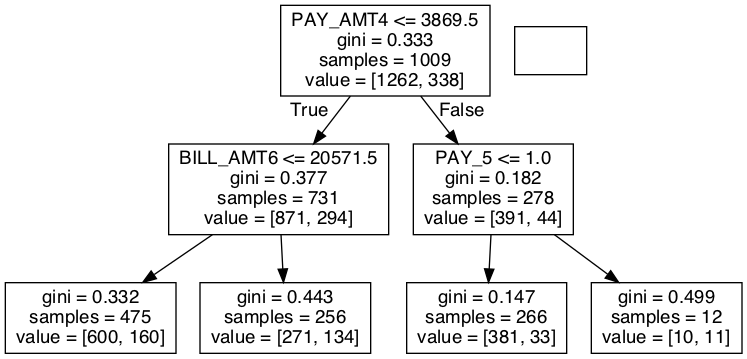

Parameters in Random Forest

What about tree based algorithms?

Random forest has no coefficients, but node decisions (what feature and what value to split on).

# A simple random forest estimator rf_clf = RandomForestClassifier(max_depth=2) rf_clf.fit(X_train, y_train)# Pull out one tree from the forest chosen_tree = rf_clf.estimators_[7]

For simplicity we will show the final product (an image) of the decision tree. Feel free to explore the package used for this (graphviz & pydotplus) yourself.

Extracting Node Decisions

We can pull out details of the left, second-from-top node:

# Get the column it split on split_column = chosen_tree.tree_.feature[1] split_column_name = X_train.columns[split_column]# Get the level it split on split_value = chosen_tree.tree_.threshold[1]print("This node split on feature {}, at a value of {}" .format(split_column_name, split_value))

"This node split on feature PAY_0, at a value of 1.5"

Let's practice!

Hyperparameter Tuning in Python