Introducing Grid Search

Hyperparameter Tuning in Python

Alex Scriven

Data Scientist

Automating 2 Hyperparameters

Your previous work:

neighbors_list = [3,5,10,20,50,75]

accuracy_list = []

for test_number in neighbors_list:

model = KNeighborsClassifier(n_neighbors=test_number)

predictions = model.fit(X_train, y_train).predict(X_test)

accuracy = accuracy_score(y_test, predictions)

accuracy_list.append(accuracy)

Which we then collated in a dataframe to analyse.

Automating 2 Hyperparameters

What about testing values of 2 hyperparameters?

Using a GBM algorithm:

learn_rate[0.001, 0.01, 0.05]max_depth[4,6,8,10]

We could use a (nested) for loop!

Automating 2 Hyperparameters

Firstly a model creation function:

def gbm_grid_search(learn_rate, max_depth): model = GradientBoostingClassifier( learning_rate=learn_rate, max_depth=max_depth)predictions = model.fit(X_train, y_train).predict(X_test)return([learn_rate, max_depth, accuracy_score(y_test, predictions)])

Automating 2 Hyperparameters

Now we can loop through our lists of hyperparameters and call our function:

results_list = []

for learn_rate in learn_rate_list:

for max_depth in max_depth_list:

results_list.append(gbm_grid_search(learn_rate,max_depth))

Automating 2 Hyperparameters

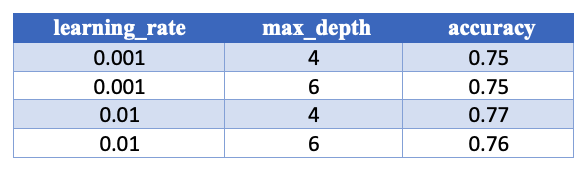

We can put these results into a DataFrame as well and print out:

results_df = pd.DataFrame(results_list, columns=['learning_rate', 'max_depth', 'accuracy'])

print(results_df)

How many models?

There were many more models built by adding more hyperparameters and values.

- The relationship is not linear, it is exponential

- One more value of a hyperparameter is not just one model

- 5 for Hyperparameter 1 and 10 for Hyperparameter 2 is 50 models!

What about cross-validation?

- 10-fold cross-validation would make 50x10 = 500 models!

From 2 to N hyperparameters

What about adding more hyperparameters?

We could nest our loop!

# Adjust the list of values to test

learn_rate_list = [0.001, 0.01, 0.1, 0.2, 0.3, 0.4, 0.5]

max_depth_list = [4,6,8, 10, 12, 15, 20, 25, 30]

subsample_list = [0.4,0.6, 0.7, 0.8, 0.9]

max_features_list = ['auto', 'sqrt']

From 2 to N hyperparameters

Adjust our function:

def gbm_grid_search(learn_rate, max_depth,subsample,max_features):

model = GradientBoostingClassifier(

learning_rate=learn_rate,

max_depth=max_depth,

subsample=subsample,

max_features=max_features)

predictions = model.fit(X_train, y_train).predict(X_test)

return([learn_rate, max_depth, accuracy_score(y_test, predictions)])

From 2 to N hyperparameters

Adjusting our for loop (nesting):

for learn_rate in learn_rate_list:

for max_depth in max_depth_list:

for subsample in subsample_list:

for max_features in max_features_list:

results_list.append(gbm_grid_search(learn_rate,max_depth,

subsample,max_features))

results_df = pd.DataFrame(results_list, columns=['learning_rate',

'max_depth', 'subsample', 'max_features','accuracy'])

print(results_df)

From 2 to N hyperparameters

How many models now?

- 7x9x5x2 = 630 (6,300 if cross-validated!)

We can't keep nesting forever!

Plus, what if we wanted:

- Details on training times & scores

- Details on cross-validation scores

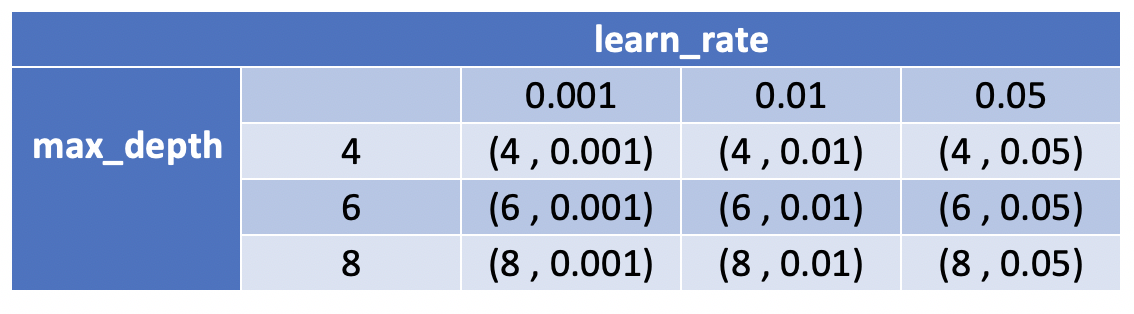

Introducing Grid Search

Let's create a grid:

- Down the left all values of max_depth

- Across the top all values of learning_rate

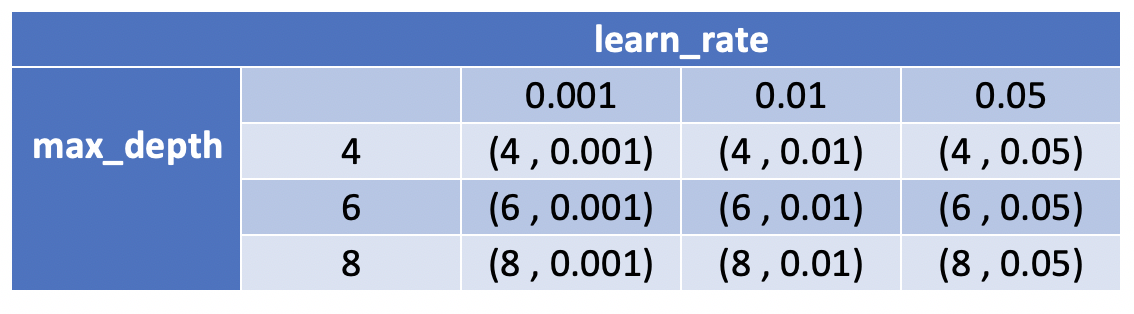

Introducing Grid Search

Working through each cell on the grid:

(4,0.001) is equivalent to making an estimator like so:

GradientBoostingClassifier(max_depth=4, learning_rate=0.001)

Grid Search Pros & Cons

Some advantages of this approach:

Advantages:

- You don't have to write thousands of lines of code

- Finds the best model within the grid (*special note here!)

- Easy to explain

Grid Search Pros & Cons

Some disadvantages of this approach:

- Computationally expensive! Remember how quickly we made 6,000+ models?

- It is 'uninformed'. Results of one model don't help creating the next model.

We will cover 'informed' methods later!

Let's practice!

Hyperparameter Tuning in Python