Hyperparameters Overview

Hyperparameter Tuning in Python

Alex Scriven

Data Scientist

What is a hyperparameter

Hyperparameters:

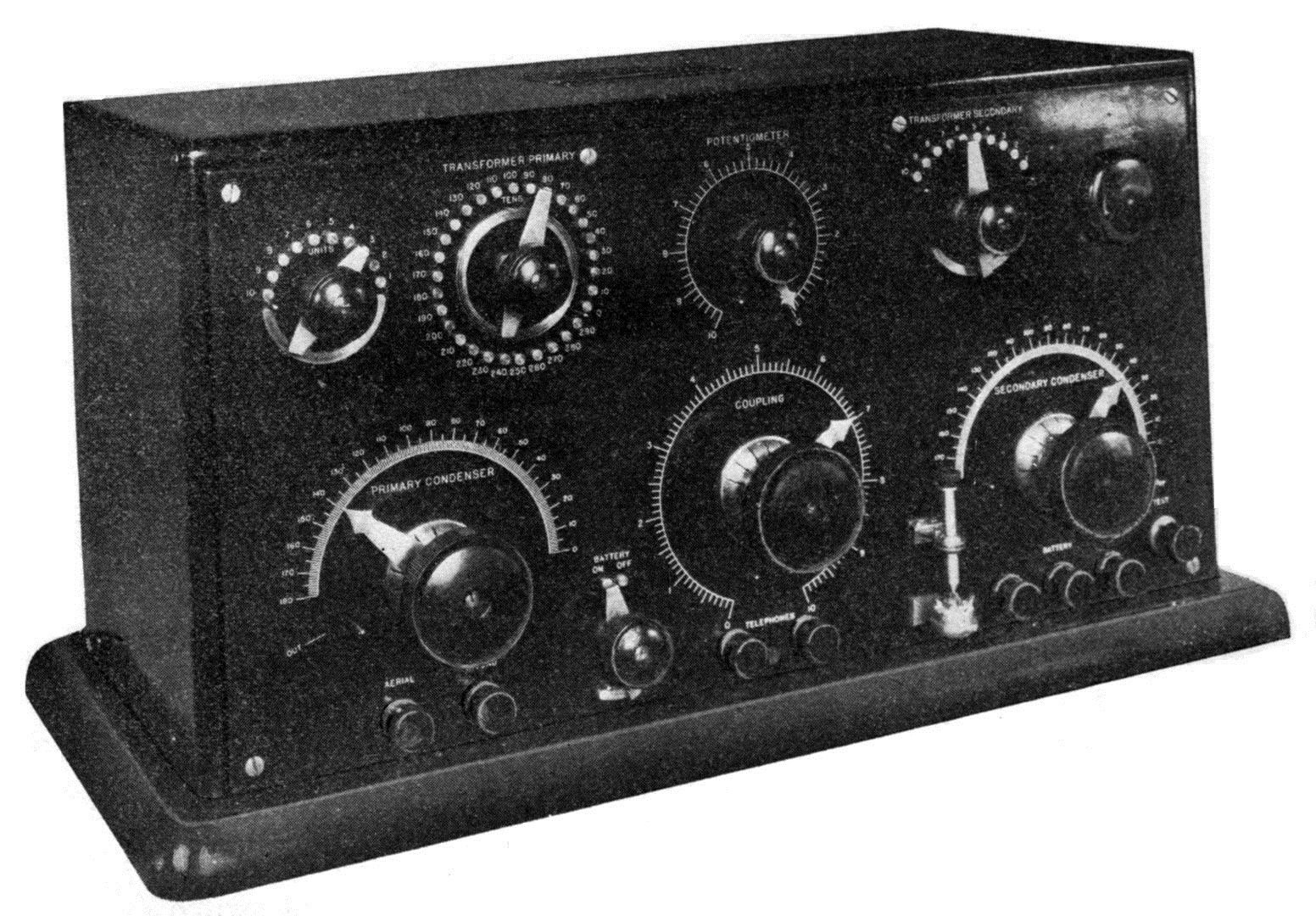

- Something you set before the modeling process (like knobs on an old radio)

- You also 'tune' your hyperparameters!

- The algorithm does not learn these

Hyperparameters in Random Forest

Create a simple random forest estimator and print it out:

rf_clf = RandomForestClassifier() print(rf_clf)RandomForestClassifier(n_estimators='warn', criterion='gini', max_depth=None, max_features='auto', max_leaf_nodes=None, min_impurity_decrease=0.0, min_impurity_split=None, min_samples_leaf=1, min_samples_split=2, min_weight_fraction_leaf=0.0, n_jobs=None, oob_score=False, random_state=None, verbose=0,bootstrap=True, class_weight=None, warm_start=False)

More info: http://scikit-learn.org

A single hyperparameter

Take the n_estimators parameter.

Data Type & Default Value:

n_estimators : integer, optional (default=10)

Definition:

The number of trees in the forest.

Setting hyperparameters

Set some hyperparameters at estimator creation:

rf_clf = RandomForestClassifier(n_estimators=100, criterion='entropy')

print(rf_clf)

RandomForestClassifier(n_estimators=100, criterion='entropy',

max_depth=None, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_jobs=None,

oob_score=False, random_state=None, verbose=0,bootstrap=True,

class_weight=None, warm_start=False)

Hyperparameters in Logistic Regression

Find the hyperparameters of a Logistic Regression:

log_reg_clf = LogisticRegression()print(log_reg_clf) LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True, intercept_scaling=1, max_iter=100, multi_class='warn', n_jobs=None, penalty='l2', random_state=None, solver='warn', tol=0.0001, verbose=0, warm_start=False)

There are less hyperparameters to tune with this algorithm!

Hyperparameter Importance

Some hyperparameters are more important than others.

Some will not help model performance:

For the random forest classifier:

n_jobsrandom_stateverbose

Not all hyperparameters make sense to 'train'

Random Forest: Important Hyperparameters

Some important hyperparameters:

n_estimators(high value)max_features(try different values)max_depth&min_sample_leaf(important for overfitting)- (maybe)

criterion

Remember: this is only a guide

How to find hyperparameters that matter?

Some resources for learning this:

- Academic papers

- Blogs and tutorials from trusted sources (Like DataCamp!)

- The Scikit Learn module documentation

- Experience

Let's practice!

Hyperparameter Tuning in Python