Understanding a grid search output

Hyperparameter Tuning in Python

Alex Scriven

Data Scientist

Analyzing the output

Let's analyze the GridSearchCV outputs.

Three different groups for the GridSearchCV properties;

- A results log

cv_results_

- The best results

best_index_,best_params_&best_score_

- 'Extra information'

scorer_,n_splits_&refit_time_

Accessing object properties

Properties are accessed using the dot notation.

For example:

grid_search_object.property

Where property is the actual property you want to retrieve

The .cv_results_ property

The cv_results_ property:

Read this into a DataFrame to print and analyze:

cv_results_df = pd.DataFrame(grid_rf_class.cv_results_)

print(cv_results_df.shape)

(12, 23)

- The 12 rows for the 12 squares in our grid or 12 models we ran

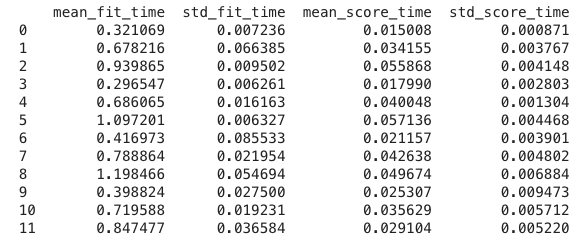

The .cv_results_ 'time' columns

The time columns refer to the time it took to fit (and score) the model.

Remember how we did a 5-fold cross-validation? This ran 5 times and stored the average and standard deviation of the times it took in seconds.

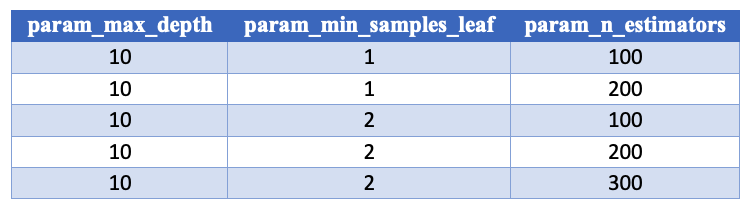

The .cv_results_ 'param_' columns

The param_ columns store the parameters it tested on that row, one column per parameter

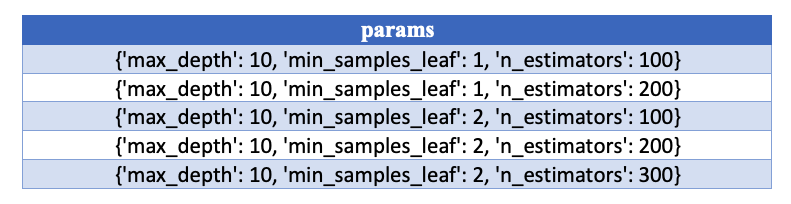

The .cv_results_ 'param' column

The params column contains dictionary of all the parameters:

pd.set_option("display.max_colwidth", -1)

print(cv_results_df.loc[:, "params"])

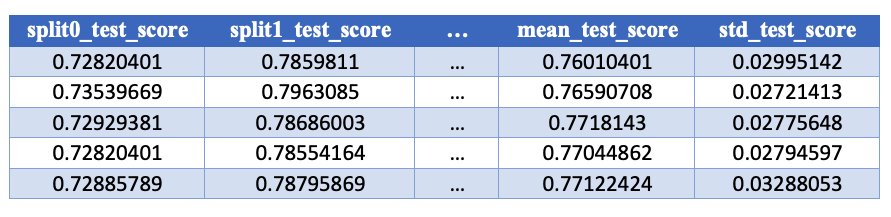

The .cv_results_ 'test_score' columns

The test_score columns contain the scores on our test set for each of our cross-folds as well as some summary statistics:

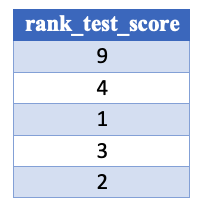

The .cv_results_ 'rank_test_score' column

The rank column, ordering the mean_test_score from best to worst:

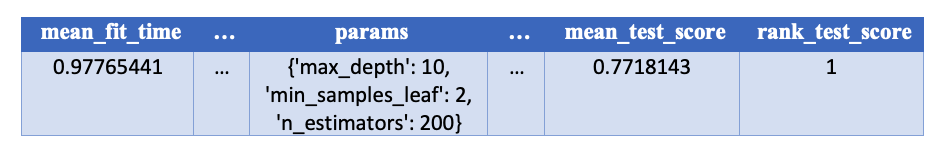

Extracting the best row

We can select the best grid square easily from cv_results_ using the rank_test_score column

best_row = cv_results_df[cv_results_df["rank_test_score"] == 1]

print(best_row)

The .cv_results_ 'train_score' columns

The test_score columns are then repeated for the training_scores.

Some important notes to keep in mind:

return_train_scoremust beTrueto include training scores columns.There is no ranking column for the training scores, as we only care about test set performance

The best grid square

Information on the best grid square is neatly summarized in the following three properties:

best_params_, the dictionary of parameters that gave the best score.best_score_, the actual best score.best_index_, the row in ourcv_results_.rank_test_scorethat was the best.

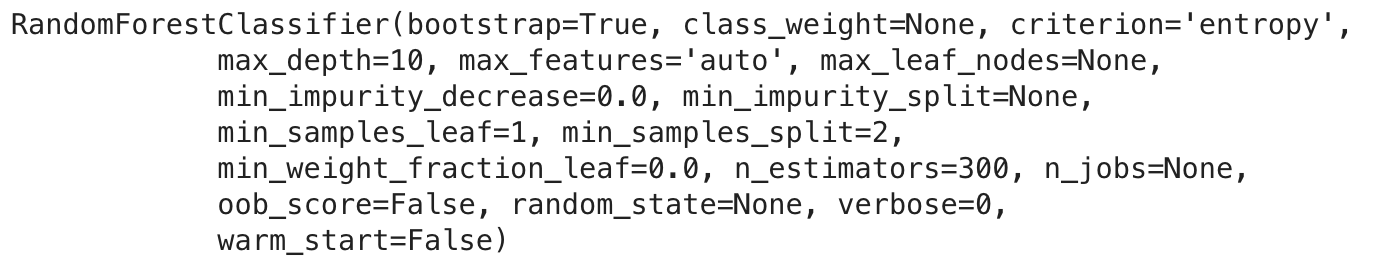

The best_estimator_ property

The best_estimator_ property is an estimator built using the best parameters from the grid search.

For us this is a Random Forest estimator:

type(grid_rf_class.best_estimator_)

sklearn.ensemble.forest.RandomForestClassifier

We could also directly use this object as an estimator if we want!

The best_estimator_ property

print(grid_rf_class.best_estimator_)

Extra information

Some extra information is available in the following properties:

scorer_

What scorer function was used on the held out data. (we set it to AUC)

n_splits_

How many cross-validation splits. (We set to 5)

refit_time_

The number of seconds used for refitting the best model on the whole dataset.

Let's practice!

Hyperparameter Tuning in Python