Hyperparameter Values

Hyperparameter Tuning in Python

Alex Scriven

Data Scientist

Hyperparameter Values

Some hyperparameters are more important than others to begin tuning.

But which values to try for hyperparameters?

- Specific to each algorithm & hyperparameter

- Some best practice guidelines & tips do exist

Let's look at some top tips!

Conflicting Hyperparameter Choices

Be aware of conflicting hyperparameter choices.

LogisticRegression()conflicting parameter options ofsolver&penaltythat conflict.

The 'newton-cg', 'sag' and 'lbfgs' solvers support only l2 penalties.

Some aren't explicit but will just 'ignore' (from ElasticNet with the normalize hyperparameter):

This parameter is ignored when fit_intercept is set to False

Make sure to consult the Scikit Learn documentation!

Silly Hyperparameter Values

Be aware of setting 'silly' values for different algorithms:

- Random forest with low number of trees

- Would you consider it a 'forest' with only 2 trees?

- 1 Neighbor in KNN algorithm

- Averaging the 'votes' of one person doesn't sound very robust!

- Increasing a hyperparameter by a very small amount

Spending time documenting sensible values for hyperparameters is a valuable activity.

Automating Hyperparameter Choice

In the previous exercise, we built models as:

knn_5 = KNeighborsClassifier(n_neighbors=5)

knn_10 = KNeighborsClassifier(n_neighbors=10)

knn_20 = KNeighborsClassifier(n_neighbors=20)

This is quite inefficient. Can we do better?

Automating Hyperparameter Tuning

Try a for loop to iterate through options:

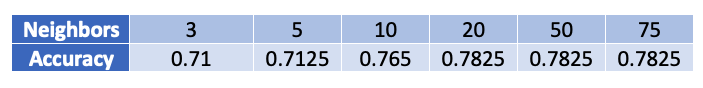

neighbors_list = [3,5,10,20,50,75]accuracy_list = []for test_number in neighbors_list: model = KNeighborsClassifier(n_neighbors=test_number) predictions = model.fit(X_train, y_train).predict(X_test)accuracy = accuracy_score(y_test, predictions) accuracy_list.append(accuracy)

Automating Hyperparameter Tuning

We can store the results in a DataFrame to view:

results_df = pd.DataFrame({'neighbors':neighbors_list, 'accuracy':accuracy_list})

print(results_df)

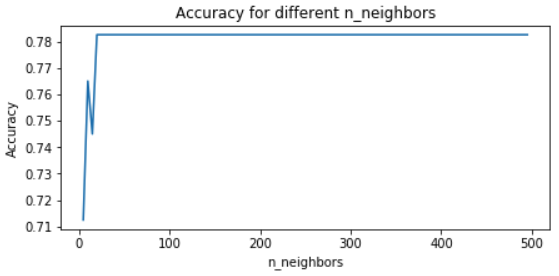

Learning Curves

Let's create a learning curve graph

We'll test many more values this time

neighbors_list = list(range(5,500, 5))accuracy_list = [] for test_number in neighbors_list: model = KNeighborsClassifier(n_neighbors=test_number) predictions = model.fit(X_train, y_train).predict(X_test) accuracy = accuracy_score(y_test, predictions) accuracy_list.append(accuracy) results_df = pd.DataFrame({'neighbors':neighbors_list, 'accuracy':accuracy_list})

Learning Curves

We can plot the larger DataFrame:

plt.plot(results_df['neighbors'], results_df['accuracy'])# Add the labels and title plt.gca().set(xlabel='n_neighbors', ylabel='Accuracy', title='Accuracy for different n_neighbors') plt.show()

Learning Curves

Our graph:

A handy trick for generating values

Python's range function does not work for decimal steps.

A handy trick uses NumPy's np.linspace(start, end, num)

- Create a number of values (

num) evenly spread within an interval (start,end) that you specify.

print(np.linspace(1,2,5))

[1. 1.25 1.5 1.75 2. ]

Let's practice!

Hyperparameter Tuning in Python