Gradient boosting

Ensemble Methods in Python

Román de las Heras

Data Scientist, Appodeal

Intro to gradient boosting machine

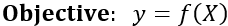

- Initial model (weak estimator): $y\sim f_1(X)$

- New model fits to residuals: $y-f_1(X)\sim f_2(X)$

- New additive model: $y\sim f_1(X)+f_2(X)$

- Repeat $n$ times or until error is small enough

- Final additive model: $$y\sim f_1(X)+f_2(X)+ ... +f_n(x)=\sum_{i=1}^{n} f_i(X)$$

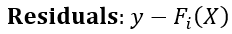

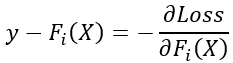

Equivalence to gradient descent

Gradient Descent:

Residuals = Negative Gradient

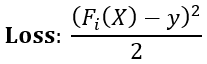

Gradient boosting classifier

Gradient Boosting Classifier

from sklearn.ensemble import GradientBoostingClassifier

clf_gbm = GradientBoostingClassifier(

n_estimators=100,

learning_rate=0.1,

max_depth=3,

min_samples_split,

min_samples_leaf,

max_features

)

n_estimators- Default: 100

learning_rate- Default: 0.1

max_depth- Default: 3

min_samples_splitmin_samples_leafmax_features

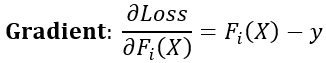

Gradient boosting regressor

Gradient Boosting Regressor

from sklearn.ensemble import GradientBoostingRegressor

reg_gbm = GradientBoostingRegressor(

n_estimators=100,

learning_rate=0.1,

max_depth=3,

min_samples_split,

min_samples_leaf,

max_features

)

Time to boost!

Ensemble Methods in Python