Batch size and batch normalization

Introduction to Deep Learning with Keras

Miguel Esteban

Data Scientist & Founder

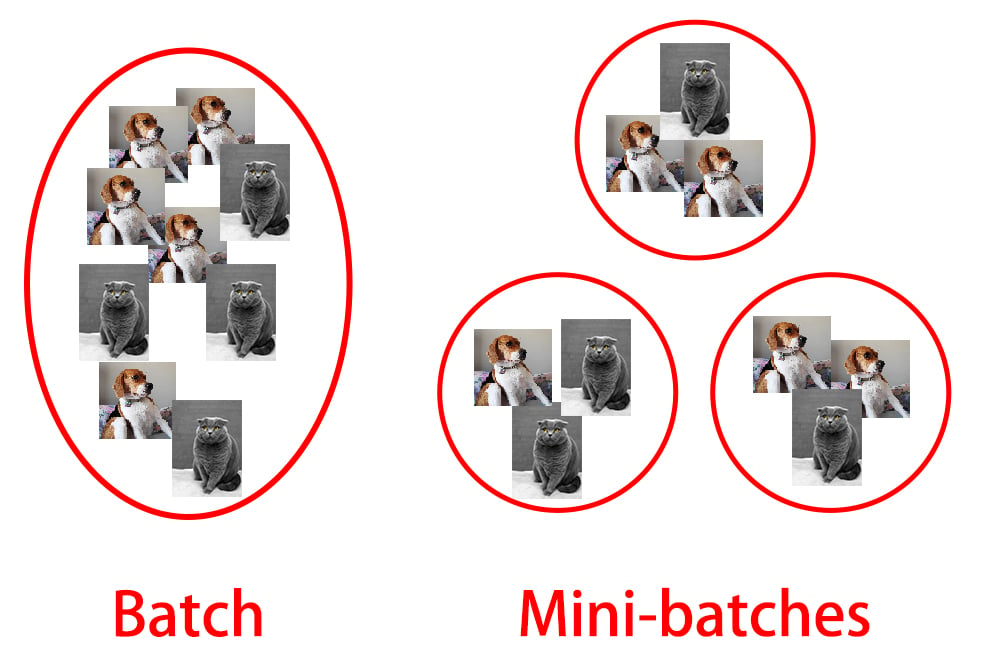

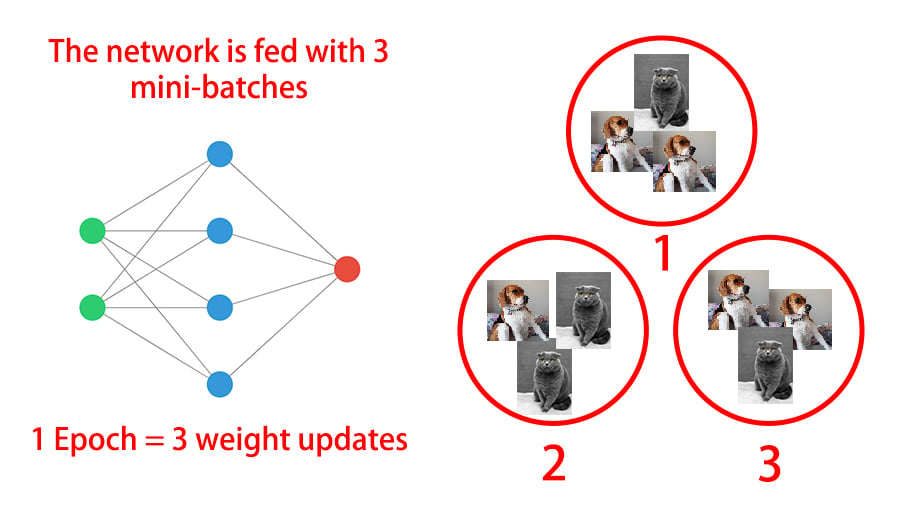

Mini-batches

Advantages

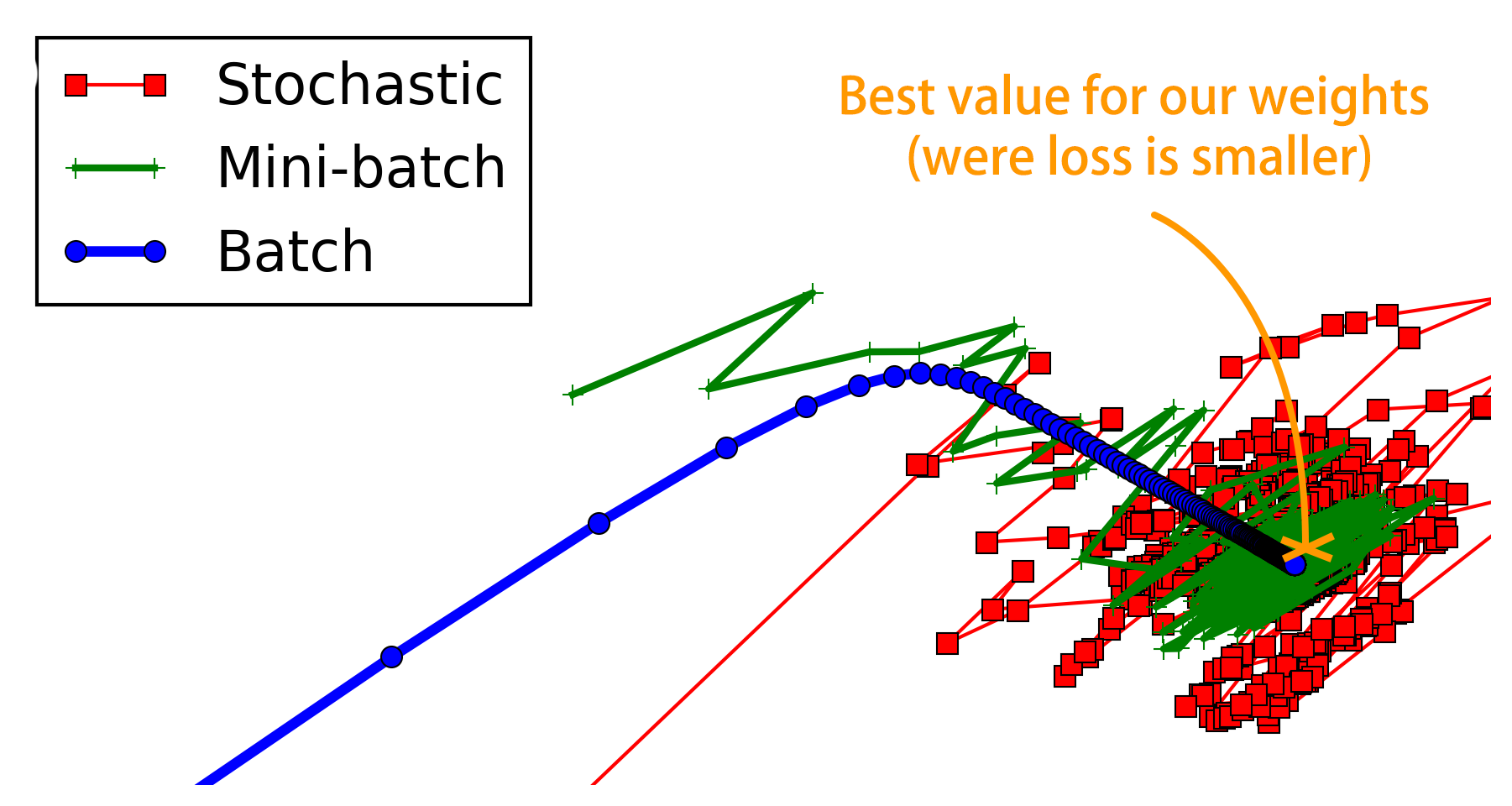

- Networks train faster (more weight updates in same amount of time)

- Less RAM memory required, can train on huge datasets

- Noise can help networks reach a lower error, escaping local minima

Disadvantages

- More iterations need to be run

- Need to be adjusted, we need to find a good batch size

1 Stack Exchange

Batch size in Keras

# Fitting an already built and compiled model

model.fit(X_train, y_train, epochs=100, batch_size=128)

^^^^^^^^^^^^^^

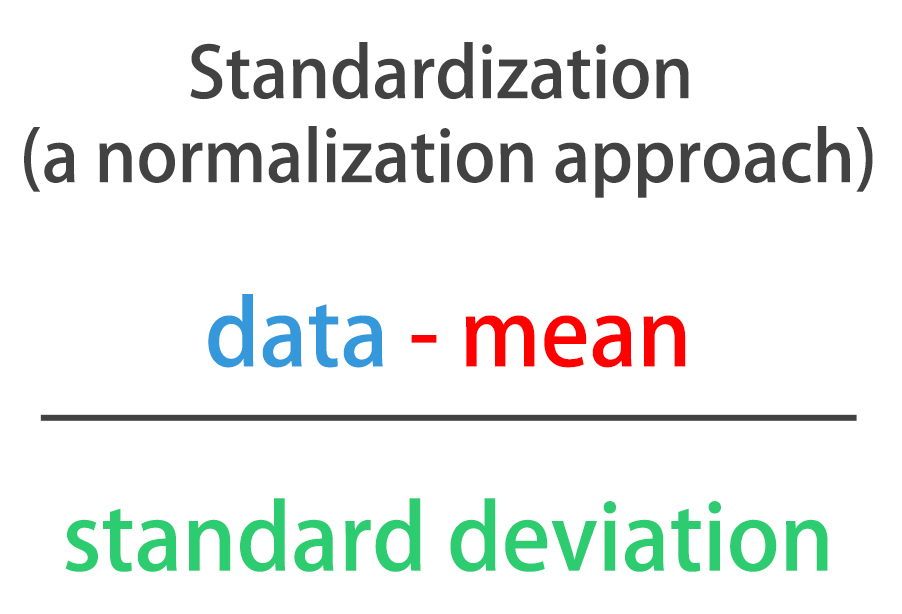

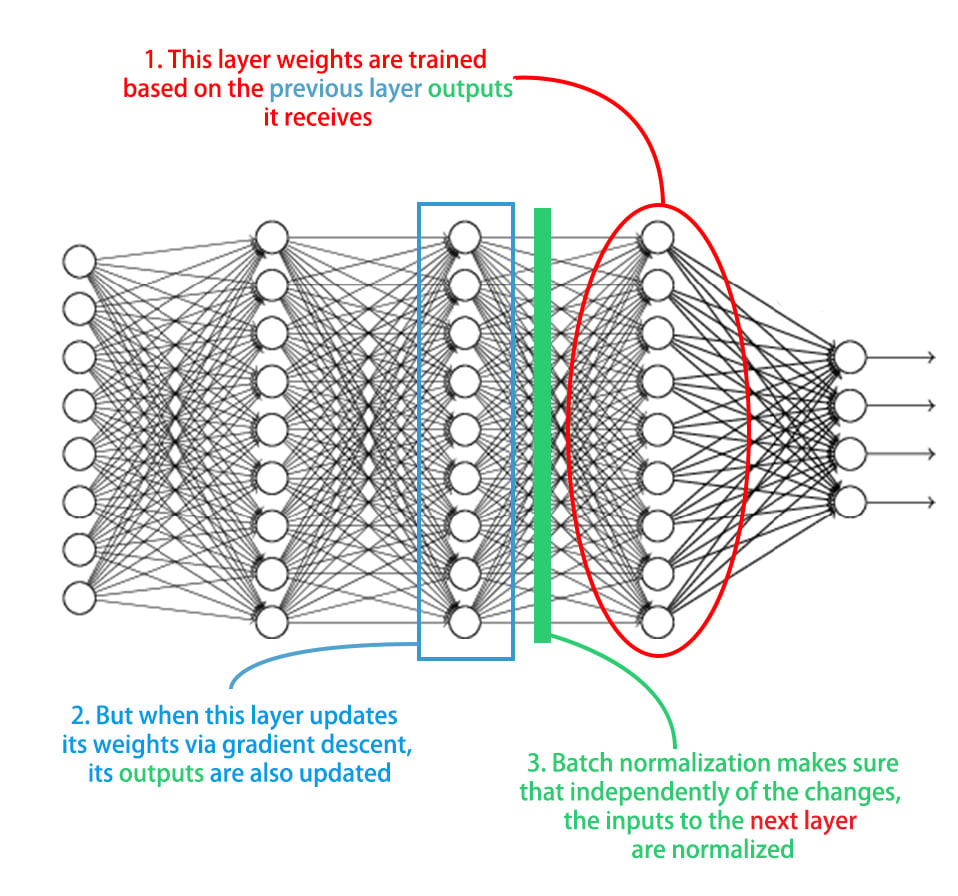

Batch normalization advantages

- Improves gradient flow

- Allows higher learning rates

- Reduces dependence on weight initializations

- Acts as an unintended form of regularization

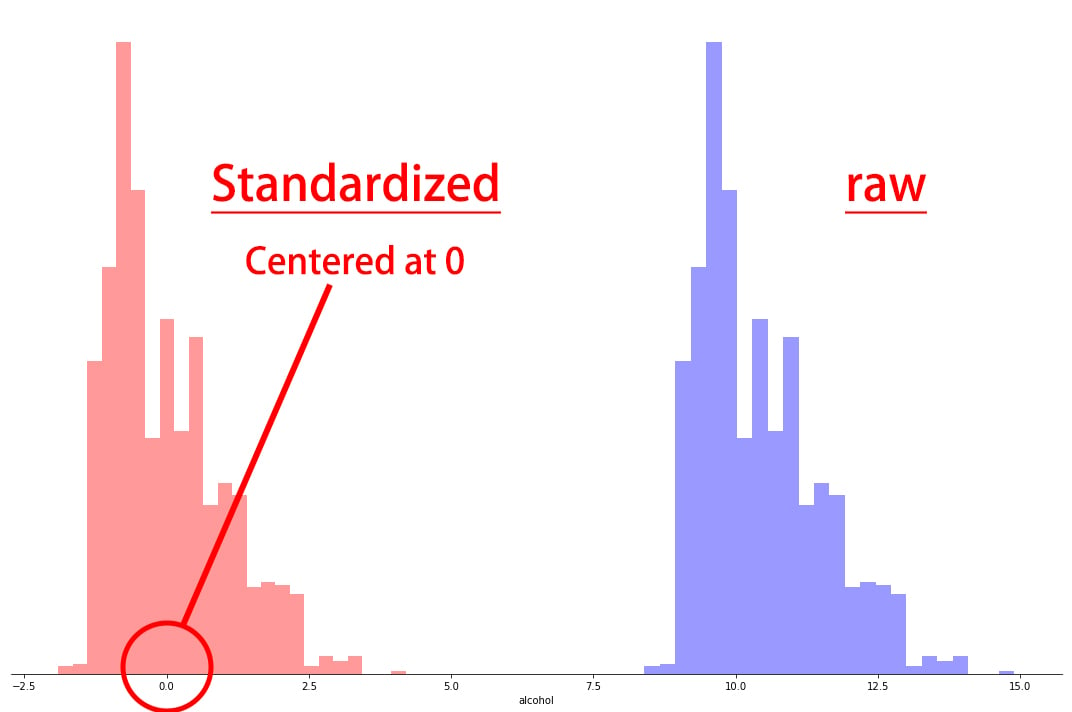

- Limits internal covariate shift

Batch normalization in Keras

# Import BatchNormalization from keras layers from tensorflow.keras.layers import BatchNormalization# Instantiate a Sequential model model = Sequential()# Add an input layer model.add(Dense(3, input_shape=(2,), activation = 'relu'))# Add batch normalization for the outputs of the layer above model.add(BatchNormalization())# Add an output layer model.add(Dense(1, activation='sigmoid'))

Let's practice!

Introduction to Deep Learning with Keras