Vanishing and exploding gradients

Recurrent Neural Networks (RNNs) for Language Modeling with Keras

David Cecchini

Data Scientist

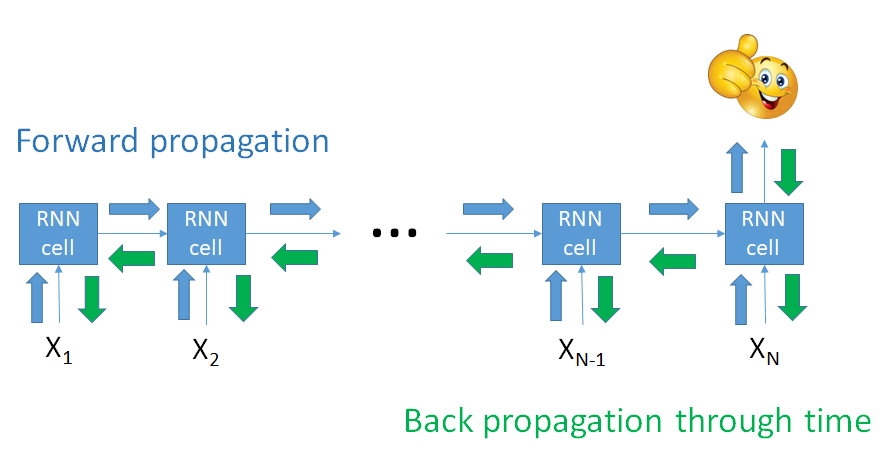

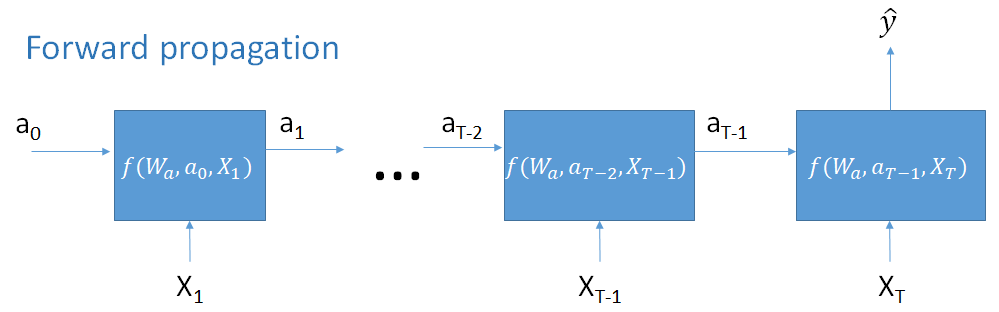

Training RNN models

Example: $$a_2 = f(W_a, a_1, x_2)$$

$$= f(W_a, f(W_a, a_0, x_1), x_2)$$

Remember that:

$$ a_T = f(W_a, a_{T-1}, x_T) $$

$a_T$ also depends on $a_{T-1}$ which depends on $a_{T-2}$ and $W_a$, and so on !

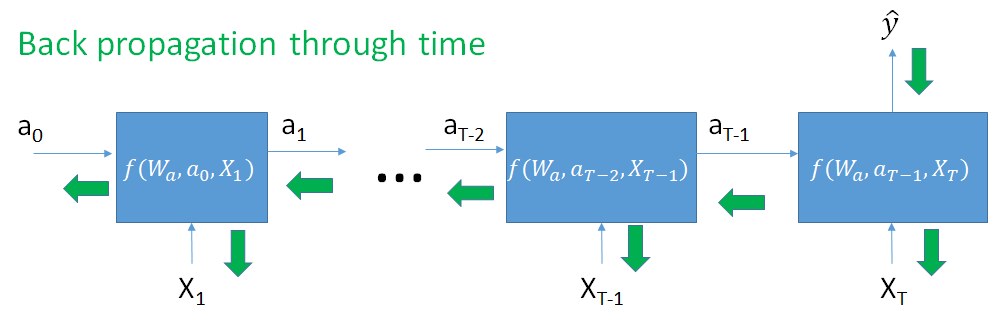

BPTT continuation

Computing derivatives leads to

$$ \frac{\partial a_t}{\partial W_a} = (W_a)^{t-1}g(X) $$

$(W_a)^{t-1}$ can converge to 0

or diverge to $+\infty$!

Solutions to the gradient problems

Some solutions are known:

Exploding gradients

- Gradient clipping / scaling

Vanishing gradients

- Better initialize the matrix W

- Use regularization

- Use ReLU instead of tanh / sigmoid / softmax

- Use LSTM or GRU cells!

Let's practice!

Recurrent Neural Networks (RNNs) for Language Modeling with Keras