Introduction to RNN inside Keras

Recurrent Neural Networks (RNNs) for Language Modeling with Keras

David Cecchini

Data Scientist

What is keras?

High-level API

Run on top of Tensorflow 2

Easy to install and use

$pip install tensorflow

Fast experimentation:

from tensorflow import keras

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

keras.models

keras.models.Sequential

keras.models.Model

keras.layers

LSTMGRUDenseDropoutEmbeddingBidirectional

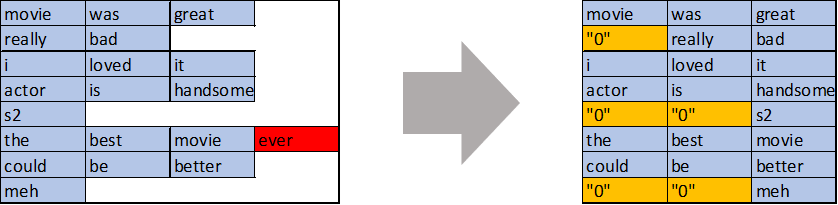

keras.preprocessing

keras.preprocessing.sequence.pad_sequences(texts, maxlen=3)

keras.datasets

Many useful datasets

- IMDB Movie reviews

- Reuters newswire

And more!

For a complete list and usage examples, see keras documentation

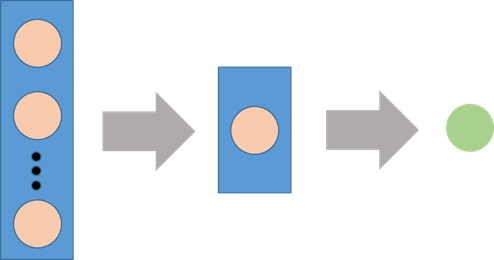

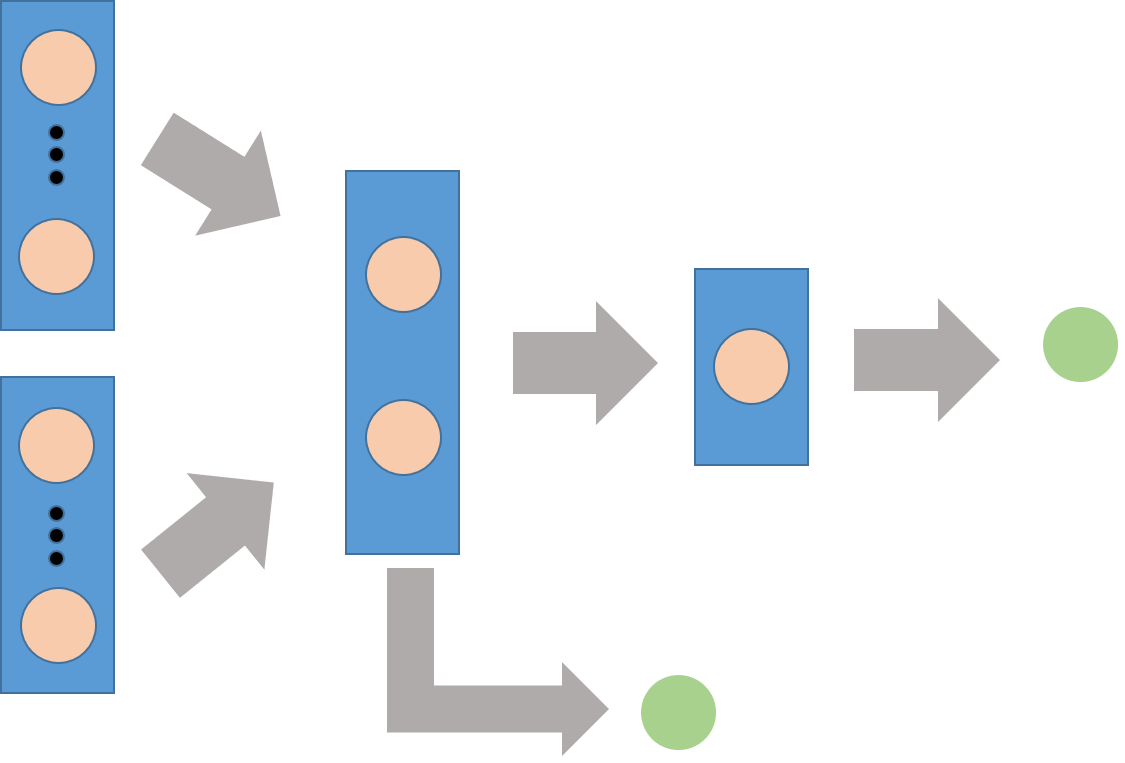

Creating a model

# Import required modules

from tensorflow import keras

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Instantiate the model class

model = Sequential()

# Add the layers

model.add(Dense(64, activation='relu', input_dim=100))

model.add(Dense(1, activation='sigmoid'))

# Compile the model

model.compile(optimizer='adam', loss='mean_squared_error', metrics=['accuracy'])

Training the model

The method .fit() trains the model on the training set

model.fit(X_train, y_train, epochs=10, batch_size=32)

epochsdetermine how many weight updates will be done on the modelbatch_sizesize of the data on each step

Model evaluation and usage

Evaluate the model:

model.evaluate(X_test, y_test)

[0.3916562925338745, 0.89324]

Make predictions on new data:

model.predict(new_data)

array([[0.91483957],[0.47130653]], dtype=float32)

Full example: IMDB Sentiment Classification

# Build and compile the model model = Sequential()model.add(Embedding(10000, 128)) model.add(LSTM(128, dropout=0.2)) model.add(Dense(1, activation='sigmoid'))model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Training

model.fit(x_train, y_train, epochs=5)

# Evaluation

score, acc = model.evaluate(x_test, y_test)

Time to practice!

Recurrent Neural Networks (RNNs) for Language Modeling with Keras