Text Generation Models

Recurrent Neural Networks (RNNs) for Language Modeling with Keras

David Cecchini

Data Scientist

Similar to a classification model

The Text Generation Model:

- Uses the vocabulary as classes

- The last layer applies a softmax with vocabulary size units

- Uses

categorical_crossentropyas loss function

Example model using keras

model = Sequential()model.add(LSTM(units, input_shape=(chars_window, n_vocab), dropout=0.15, recurrent_dropout=0.15, return_sequences=True))model.add(LSTM(units, dropout=dropout, recurrent_dropout=0.15, return_sequences=False))model.add(Dense(n_vocab, activation='softmax'))model.compile(loss='categorical_crossentropy', optimizer='adam')

But not really classification model

Difference to classification:

- Computes loss, but not performance metrics (accuracy)

- Humans see results and evaluate performance.

- If not good, train more epochs or add complexity to the model (add more memory cells, add layers, etc.).

- Used with generation rules according to task

- Generate next char

- Generate one word

- Generate one sentence

- Generate one paragraph

Other applications

- Name creation

- Baby names

- New star names, etc.

- Generate marked text

- LaTeX

- Markdown

- XML, etc.

- Programming code

- News articles

- Chatbots

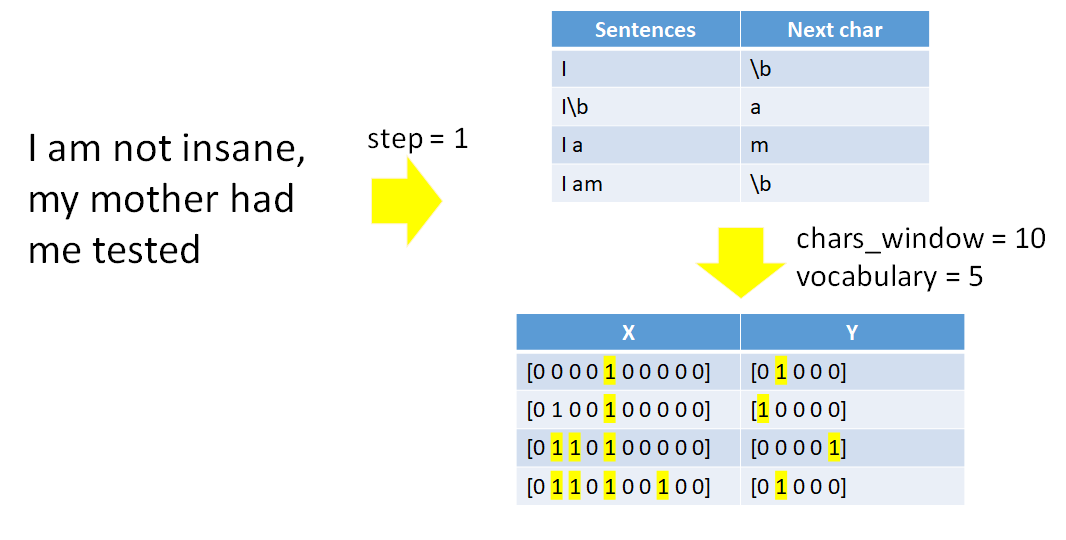

Data prep

Let's practice!

Recurrent Neural Networks (RNNs) for Language Modeling with Keras