Assessing the model's performance

Recurrent Neural Networks (RNNs) for Language Modeling with Keras

David Cecchini

Data Scientist

Accuracy is not too informative

20 classes task with 80% accuracy. Is the model good?

- Can it classify all the classes correctly?

- Is the accuracy the same for each class?

- Is the model overfitting on the majority class?

I have no idea!

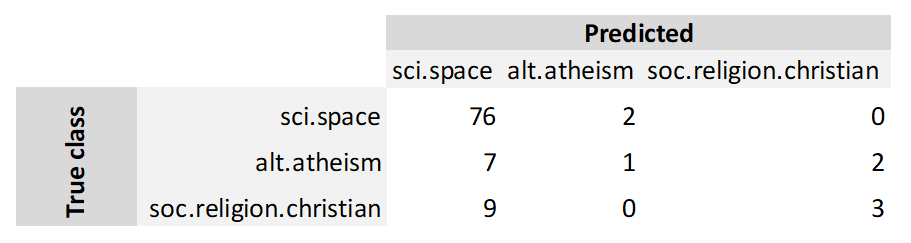

Confusion matrix

Checking true and predicted for each class

Precision

Precision

$$\text{Precision}_{\text{class}} = \frac{\text{Correct}_{\text{class}}}{\text{Predicted}_{\text{class}}}$$

In the example:

$$ \text{Precision}_{\text{sci.space}} = \frac{76}{76+7+9} = 0.83 $$ $$ \text{Precision}_{\text{alt.atheism}} = \frac{1}{2+1+0} = 0.33 $$ $$ \text{Precision}_{\text{soc.religion.christian}} = \frac{3}{0+2+3} = 0.60 $$

Recall

Recall

$$\text{Recall}_{\text{class}} = \frac{\text{Correct}_{class}}{N_\text{class}}$$

In the example:

$$ \text{Recall}_{\text{sci.space}} = \frac{76}{76+2+0} = 0.97 $$ $$ \text{Recall}_{\text{alt.atheism}} = \frac{1}{7+1+2} = 0.10 $$ $$ \text{Recall}_{\text{soc.religion.christian}} = \frac{3}{9+0+3} = 0.25 $$

F1-Score

F1-Score

$$\text{F1 score} = 2 * \frac{\text{precision}_{\text{class}} * \text{recall}_{\text{class}}}{\text{precision}_{\text{class}} + \text{recall}_{\text{class}}}$$

In the example:

$$ f1score_{sci.space} = 2 \frac{0.83 * 0.97}{0.83 + 0.97} = 0.89 $$ $$ f1score_{alt.atheism} = 2 \frac{033 * 0.10}{033 + 0.10} = 0.15 $$ $$ f1score_{soc.religion.christian} = 2 \frac{060 * 0.25}{060 + 0.25} = 0.35 $$

Sklearn confusion matrix

from sklearn.metrics import confusion_matrix# Build the confusion matrix confusion_matrix(y_true, y_pred)

Output:

array([[76, 2, 0],

[ 7, 1, 2],

[ 9, 0, 3]], dtype=int64)

Performance metrics

Metrics from sklearn

# Functions of sklearn

from sklearn.metrics import confusion_matrix

from sklearn.metrics import precision_score

from sklearn.metrics import recall_score

from sklearn.metrics import f1_score

from sklearn.metrics import accuracy_score

from sklearn.metrics import classification_report

Performance metrics

# Accuracy

print(accuracy_score(y_true, y_pred))

$ 0.80

Add average=None to precison, recall and f1 score functions

print(precision_score(y_true, y_pred, average=None))

print(recall_score(y_true, y_pred, average=None))

print(f1_score(y_true, y_pred, average=None))

$ array([0.83, 0.33, 0.60])

$ array([0.97, 0.10, 0.25])

$ array([0.89, 0.15, 0.35])

Classification report

One function measure all:

lab_names = ['sci.space', 'alt.atheism', 'soc.religion.christian']

print(classification_report(y_true, y_pred, target_names=lab_names))

precision recall f1-score support

sci.space 0.83 0.97 0.89 78

alt.atheism 0.33 0.10 0.15 10

soc.religion.christian 0.60 0.25 0.35 12

micro avg 0.80 0.80 0.80 100

macro avg 0.59 0.44 0.47 100

weighted avg 0.75 0.80 0.76 100

Let's practice!

Recurrent Neural Networks (RNNs) for Language Modeling with Keras