Introduction to language models

Recurrent Neural Networks (RNNs) for Language Modeling with Keras

David Cecchini

Data Scientist

Sentence probability

Many available models

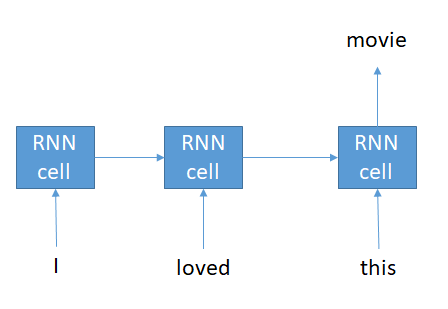

- Probability of "I loved this movie".

- Unigram

- $$P(\text{sentence}) = P(\text{I})P(\text{loved})P(\text{this})P(\text{movie})$$

- N-gram

- N = 2 (bigram): $$P(\text{sentence}) = P(\text{I})P(\text{loved} | \text{I})P(\text{this} | \text{loved})P(\text{movie} | \text{this})$$

- N = 3 (trigram): $$P(\text{sentence}) = P(\text{I})P(\text{loved} | \text{I})P(\text{this} | \text{I loved})P(\text{movie} | \text{loved this})$$

Sentence probability (cont.)

- Skip gram

- $$P(\text{sentence}) = P(\text{context of I} | \text{I})P(\text{context of loved} | \text{loved}) \ $$ $$P(\text{context of this} | \text{this})P(\text{context of movie} | \text{movie})$$

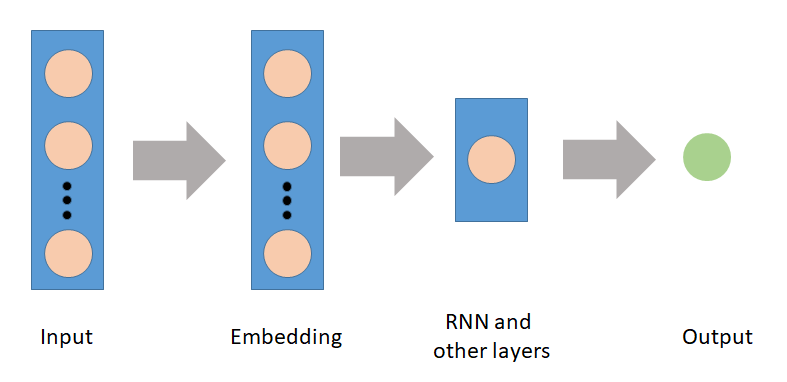

- Neural Networks

- The probability of the sentence is given by a

softmaxfunction on the output layer of the network

- The probability of the sentence is given by a

Link to RNNs

Language models are everywhere in RNNs!

- The network itself

Link to RNN (cont.)

- Embedding layer

Building vocabulary dictionaries

# Get unique words

unique_words = list(set(text.split(' ')))

# Create dictionary: word is key, index is value

word_to_index = {k:v for (v,k) in enumerate(unique_words)}

# Create dictionary: index is key, word is value

index_to_word = {k:v for (k,v) in enumerate(unique_words)}

Preprocessing input

# Initialize variables X and y X = [] y = []# Loop over the text: length `sentence_size` per time with step equal to `step` for i in range(0, len(text) - sentence_size, step):X.append(text[i:i + sentence_size]) y.append(text[i + sentence_size])

# Example (numbers are numerical indexes of vocabulary):

# Sentence is: "i loved this movie" -> (["i", "loved", "this"], "movie")

X[0],y[0] = ([10, 444, 11], 17)

Transforming new texts

# Create list to keep the sentences of indexes new_text_split = []# Loop and get the indexes from dictionary for sentence in new_text:sent_split = []for wd in sentence.split(' '):ix = wd_to_index[wd]sent_split.append(ix)new_text_split.append(sent_split)

Let's practice!

Recurrent Neural Networks (RNNs) for Language Modeling with Keras