Introduction to the course

Recurrent Neural Networks (RNNs) for Language Modeling with Keras

David Cecchini

Data Scientist

Text data is available online

Applications of machine learning to text data

Four applications:

- Sentiment analysis

- Multi-class classification

- Text generation

- Machine neural translation

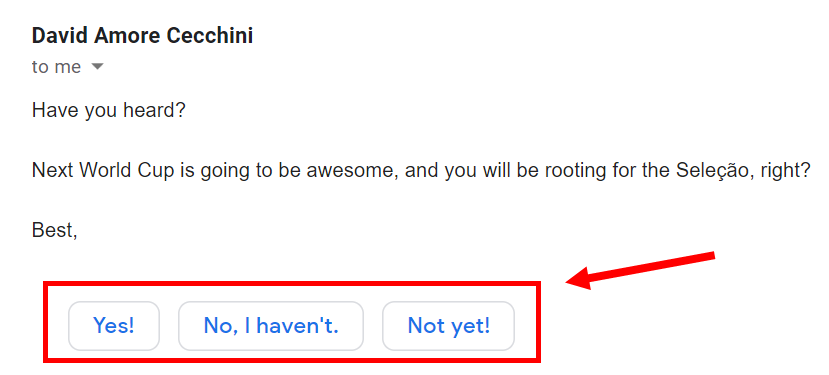

Sentiment analysis

Multi-class classification

Text generation

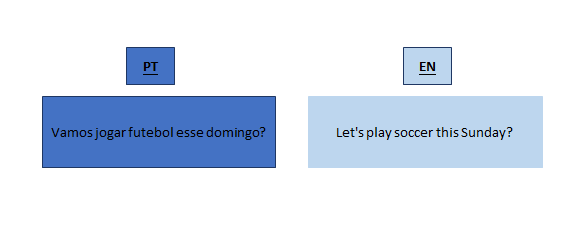

Neural machine translation

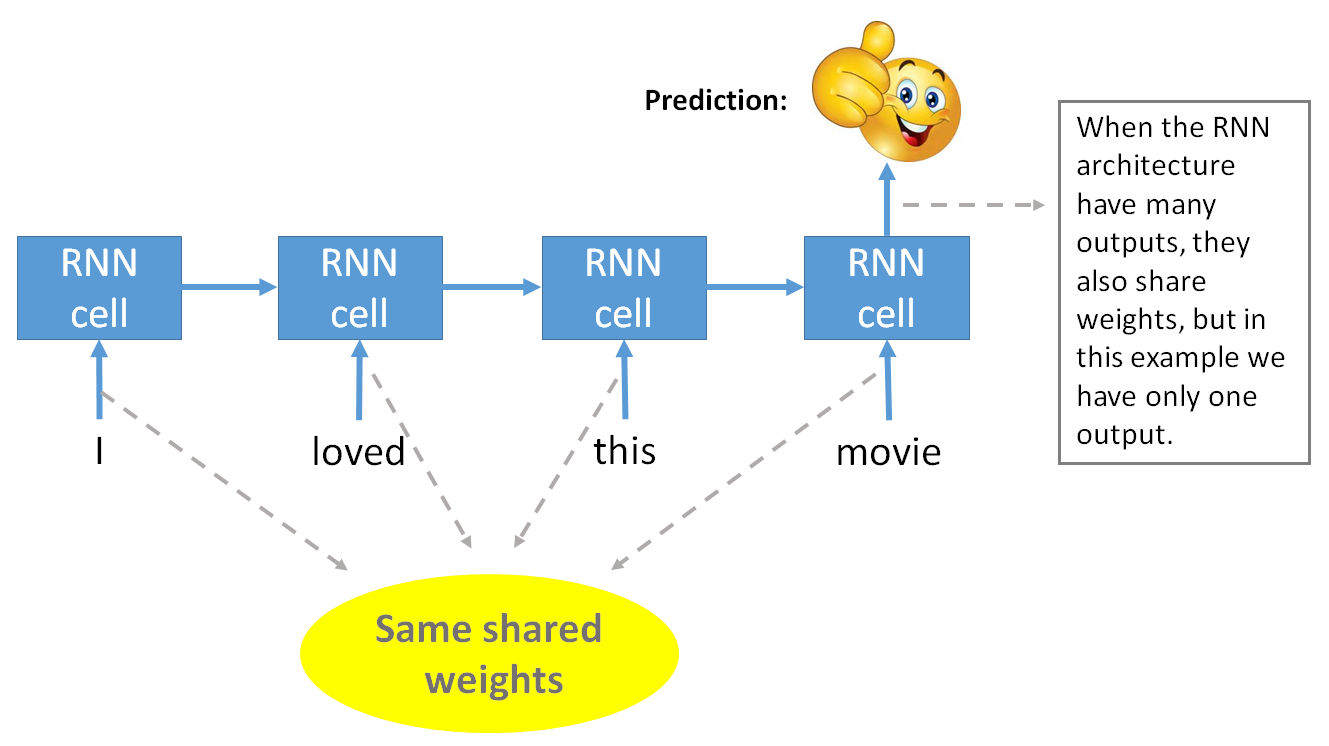

Recurrent Neural Networks

Sequence to sequence models

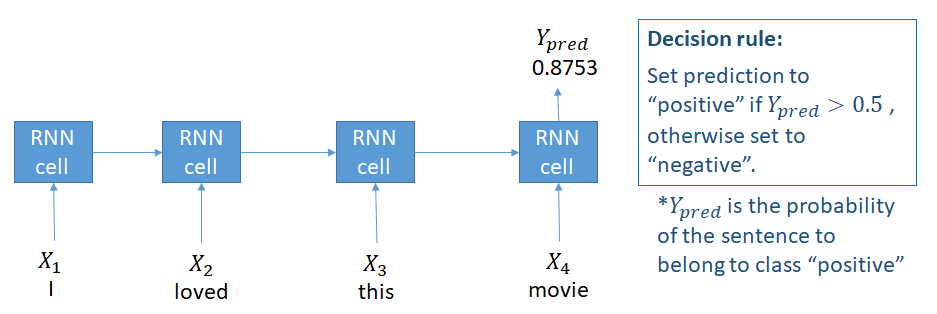

Many to one: classification

Sequence to sequence models

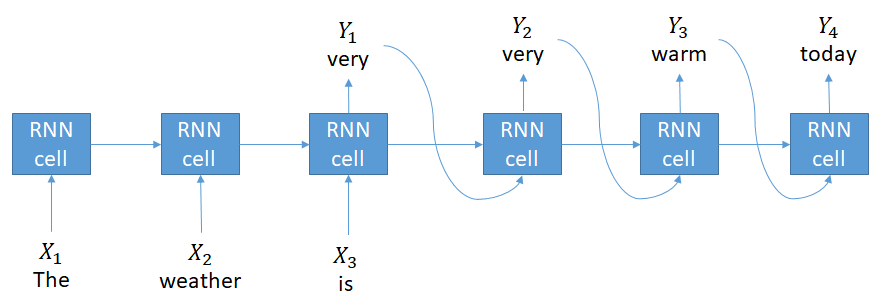

Many to many: text generation

Sequence to sequence models

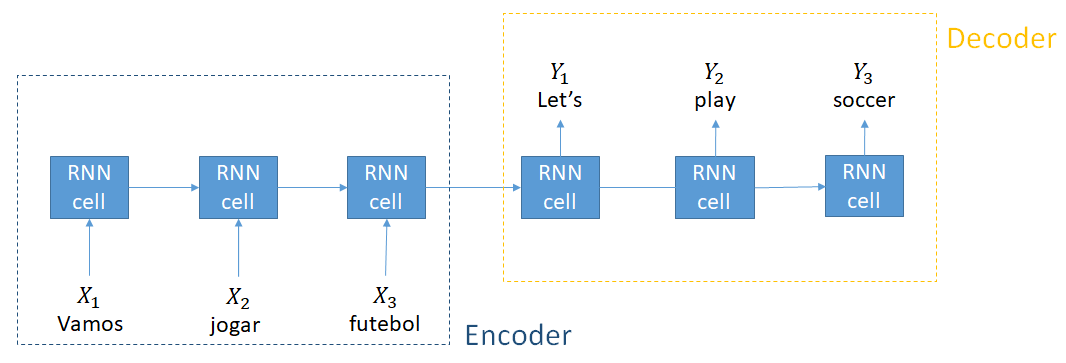

Many to many: neural machine translation

Sequence to sequence models

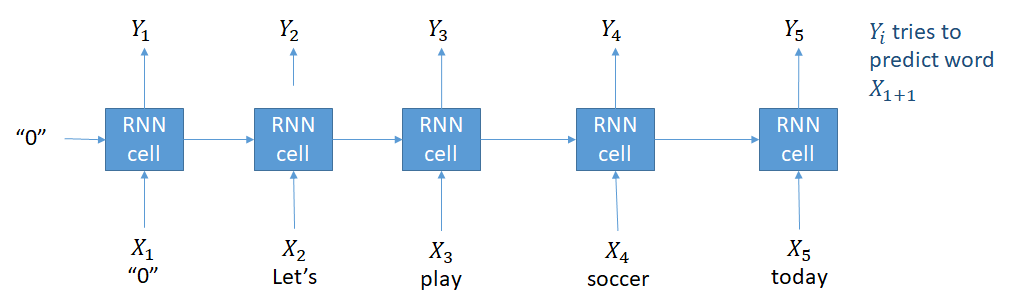

Many to many: language model

Let's practice!

Recurrent Neural Networks (RNNs) for Language Modeling with Keras