Accessing private objects in S3

Introduction to AWS Boto in Python

Maksim Pecherskiy

Data Engineer

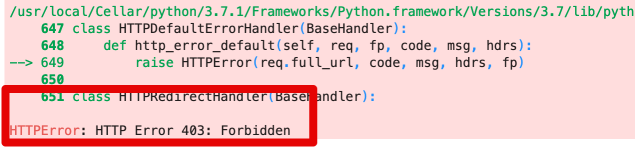

Downloading a private file

df = pd.read_csv('https://gid-staging.potholes.csv')

Downloading private files

Download File

s3.download_file(

Filename='potholes_local.csv',

Bucket='gid-staging',

Key='2019/potholes_private.csv')

Read From Disk

pd.read_csv('./potholes_local.csv')

Accessing private files

Use '.get_object()'

obj = s3.get_object(Bucket='gid-requests', Key='2019/potholes.csv')

print(obj)

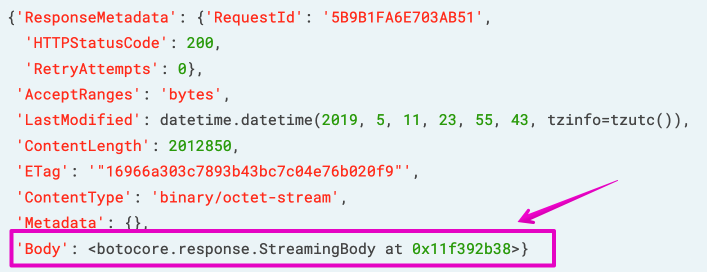

Accessing private files

Accessing private Files

Get the object

obj = s3.get_object(

Bucket='gid-requests',

Key='2019/potholes.csv')

Read StreamingBody into Pandas

pd.read_csv(obj['Body'])

Pre-signed URLs

- Expire after a certain timeframe

- Great for temporary access

Example

https://?AWSAccessKeyId=12345&Signature=rBmnrwutb6VkJ9hE8Uub%2BBYA9mY%3D&Expires=1557624801

Pre-signed URLs

Upload a file

s3.upload_file(

Filename='./potholes.csv',

Key='potholes.csv',

Bucket='gid-requests')

Pre-signed URLs

Generate Presigned URL

share_url = s3.generate_presigned_url(

ClientMethod='get_object',

ExpiresIn=3600,

Params={'Bucket': 'gid-requests','Key': 'potholes.csv'}

)

Open in Pandas

pd.read_csv(share_url)

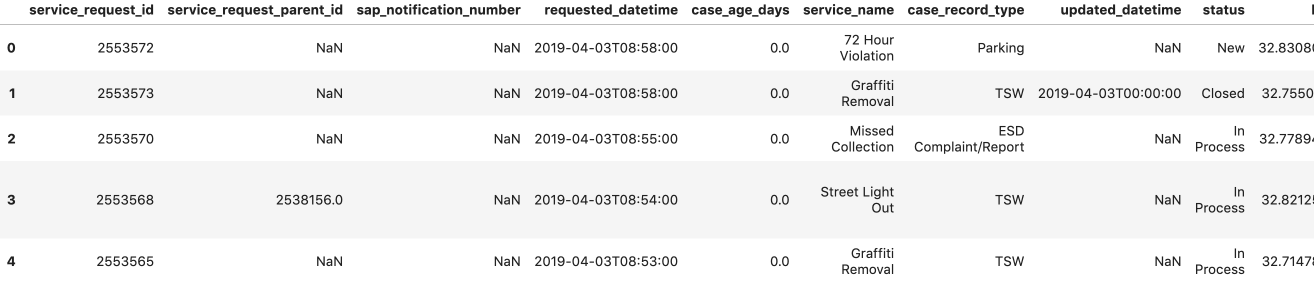

Load multiple files into one DataFrame

# Create list to hold our DataFrames df_list = []# Request the list of csv's from S3 with prefix; Get contents response = s3.list_objects( Bucket='gid-requests', Prefix='2019/')# Get response contents request_files = response['Contents']

Load multiple files into one DataFrame

# Iterate over each object for file in request_files: obj = s3.get_object(Bucket='gid-requests', Key=file['Key'])# Read it as DataFrame obj_df = pd.read_csv(obj['Body'])# Append DataFrame to list df_list.append(obj_df)

Load multiple files into one DataFrame

# Concatenate all the DataFrames in the list df = pd.concat(df_list)# Preview the DataFrame df.head()

Review Accessing private objects in S3

Download then open

s3.download_file()

Open directly

s3.get_object()

Generate presigned URL

s3.generate_presigned_url()

Review - Sharing URLs

Public Files: Public Object URL

Generate using .format()

'https://{bucket}.{key}'

Private Files: Presigned URL

Generate using .get_presigned_url()

'https://?AWSAccessKeyId=12345&Signature=rBmnrwutb6VkJ9hE8Uub%2BBYA9mY%3D&Expires=1557624801'

Let's practice!

Introduction to AWS Boto in Python