Training the model with Teacher Forcing

Machine Translation with Keras

Thushan Ganegedara

Data Scientist and Author

Model training in detail

- Model training requires:

- A loss function (e.g. categorical crossentropy)

- An optimizer (e.g. Adam)

Model training in detail

To compute loss, following items are required:

- Probabilistic predictions generated using inputs (

[batch_size, seq_len, vocab_size])- e.g.

[[0.11,...,0.81,0.04], [0.05,...,0.01, 0.93], ..., [0.78,..., 0.03,0.01]]

- e.g.

- Actual onehot encoded French targets (

[batch_size, seq_len, vocab_size])- e.g.

[[0, ..., 1, 0], [0, ..., 0, 1],..., [0, ..., 1, 0]]

- e.g.

- Crossentropy: difference between the targets and predicted words

- Probabilistic predictions generated using inputs (

The loss is passed to an optimizer which will change the model parameters to minimize the loss

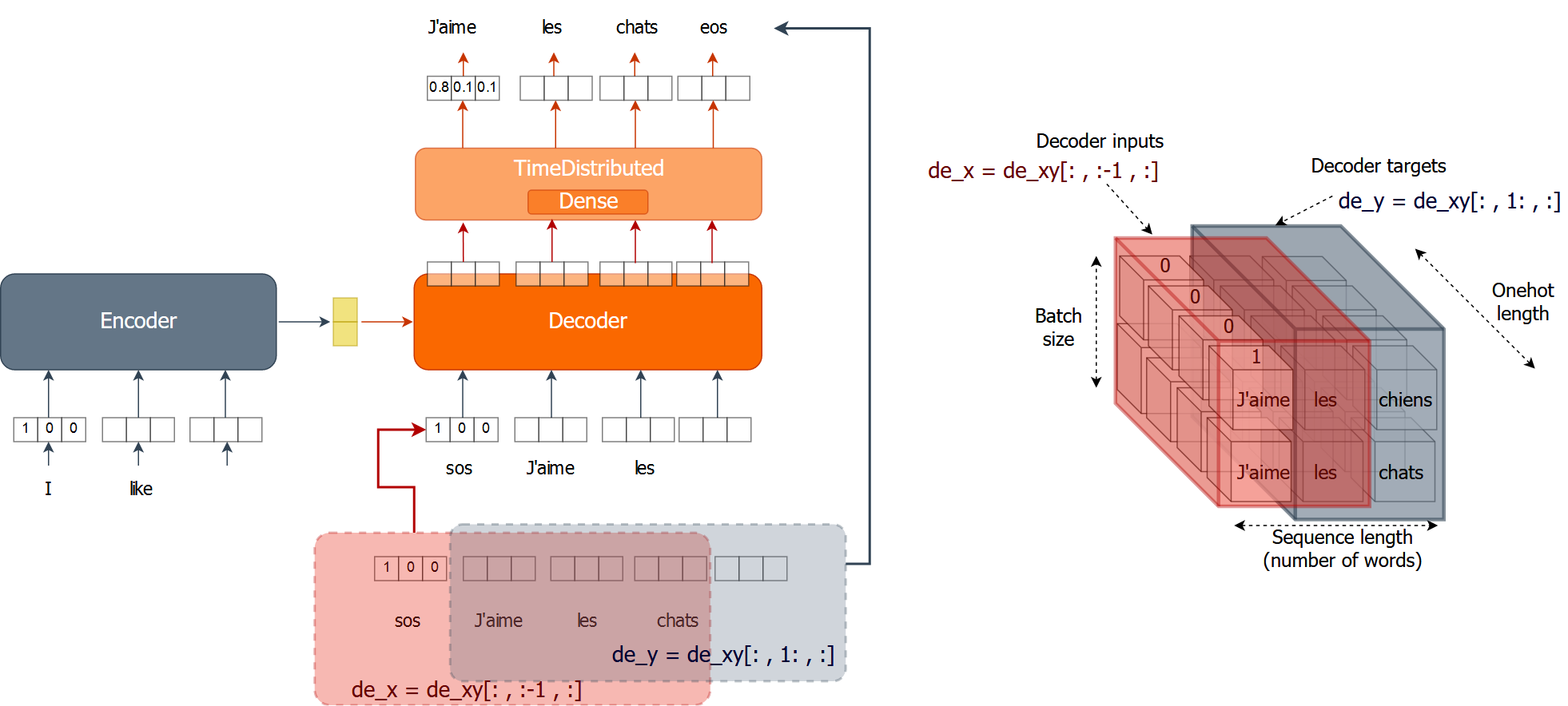

Training the model with Teacher Forcing

n_epochs, bsize = 3, 250 for ei in range(n_epochs): for i in range(0,data_size,bsize):# Encoder inputs, decoder inputs and outputs en_x = sents2seqs('source', en_text[i:i+bsize], onehot=True, reverse=True) de_xy = sents2seqs('target', fr_text[i:i+bsize], onehot=True)# Separating decoder inputs and outputs de_x = de_xy[:,:-1,:] de_y = de_xy[:,1:,:]# Training and evaulating on a single batch nmt_tf.train_on_batch([en_x,de_x], de_y) res = nmt_tf.evaluate([en_x,de_x], de_y, batch_size=bsize, verbose=0) print("{} => Train Loss:{}, Train Acc: {}".format(ei+1,res[0], res[1]*100.0))

Array slicing in detail

de_x = de_xy[:,:-1,:]

de_y = de_xy[:,1:,:]

Creating training and validation data

train_size, valid_size = 800, 200 # Creating data indices inds = np.arange(len(en_text)) np.random.shuffle(inds)# Separating train and valid indices train_inds = inds[:train_size] valid_inds = inds[train_size:train_size+valid_size]# Extracting train and valid data tr_en = [en_text[ti] for ti in train_inds] tr_fr = [fr_text[ti] for ti in train_inds] v_en = [en_text[vi] for vi in valid_inds] v_fr = [fr_text[vi] for vi in valid_inds]print('Training (EN):\n', tr_en[:2], '\nTraining (FR):\n', tr_fr[:2]) print('\nValid (EN):\n', tr_en[:2], '\nValid (FR):\n', tr_fr[:2])

Valid (EN): ['she saw that rusty blue truck .', 'france is usually snowy during autumn , ...']

Valid (FR): ['elle a vu ce camion bleu rouillé .', 'la france est généralement enneigée en automne ...']

Training with validation

for ei in range(n_epochs): for i in range(0,train_size,bsize): en_x = sents2seqs('source', tr_en[i:i+bsize], onehot=True, reverse=True) de_xy = sents2seqs('target', tr_fr[i:i+bsize], onehot=True) de_x, de_y = de_xy[:,:-1,:], de_xy[:,1:,:] nmt_tf.train_on_batch([en_x, de_x], de_y)v_en_x = sents2seqs('source', v_en, onehot=True, reverse=True) v_de_xy = sents2seqs('target', v_fr, onehot=True) v_de_x, v_de_y = v_de_xy[:,:-1,:], v_de_xy[:,1:,:]res = nmt_tf.evaluate([v_en_x, v_de_x], v_de_y, batch_size=valid_size, verbose=0) print("Epoch {} => Loss:{}, Val Acc: {}".format(ei+1,res[0], res[1]*100.0))

Epoch 1 => Loss:4.784221172332764, Val Acc: 1.4999999664723873

Epoch 2 => Loss:4.716882228851318, Val Acc: 44.458332657814026

Epoch 3 => Loss:4.63267183303833, Val Acc: 47.333332896232605

Let's train!

Machine Translation with Keras