Introduction to Teacher Forcing

Machine Translation with Keras

Thushan Ganegedara

Data Scientist and Author

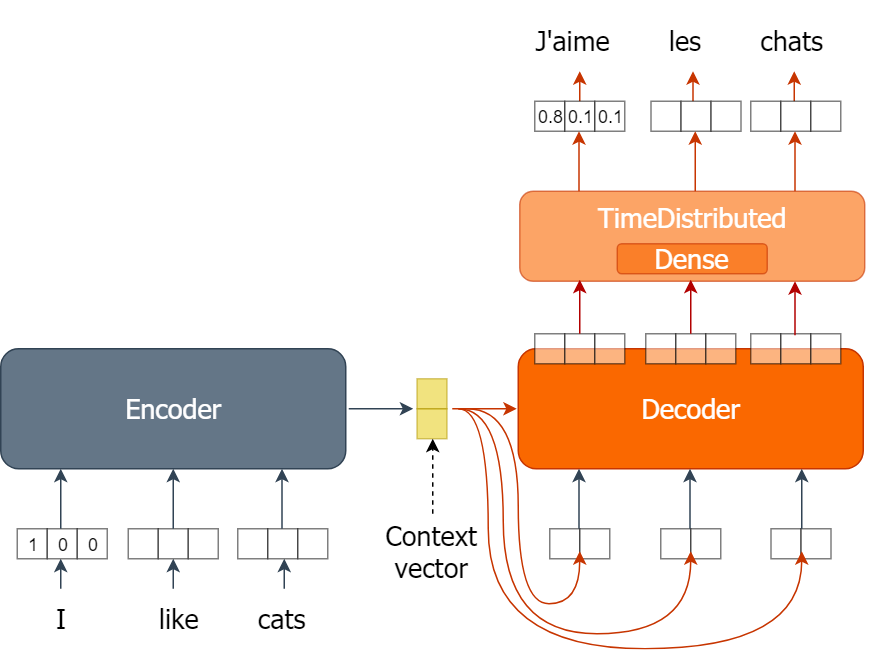

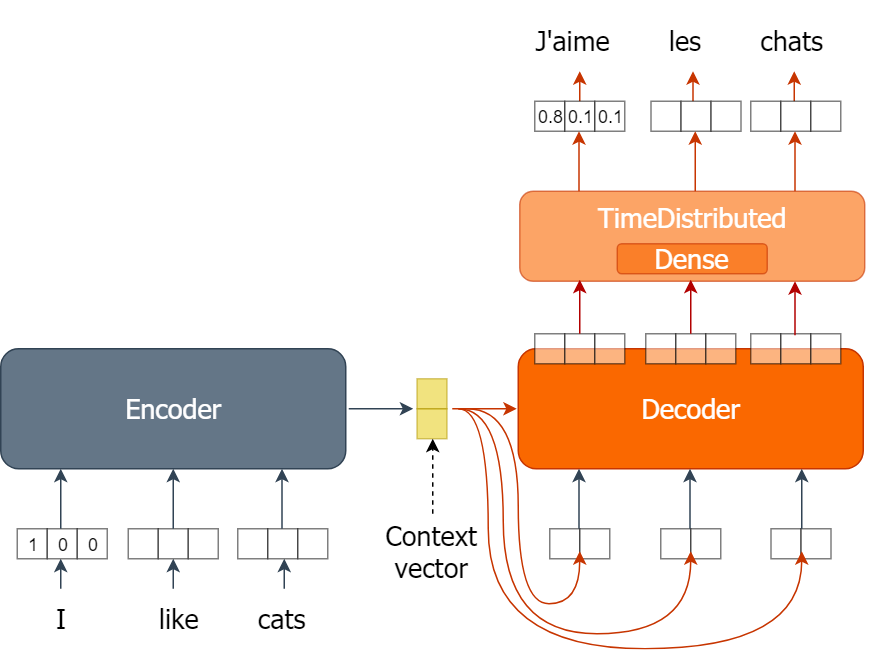

The previous machine translator model

- The previous model

- Encoder GRU

- Consumes English words

- Outputs a context vector

- Decoder GRU

- Consumes the context vector

- Outputs a sequence of GRU outputs

- Decoder Prediction layer

- Consumes the sequence of GRU outputs

- Outputs prediction probabilities for French words

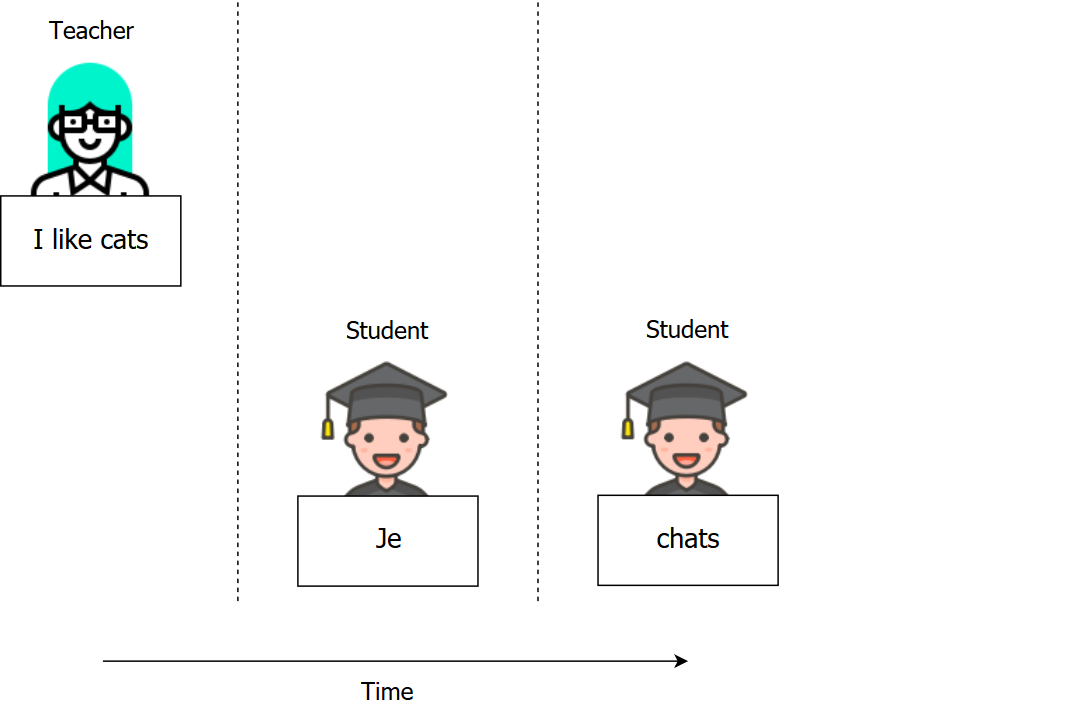

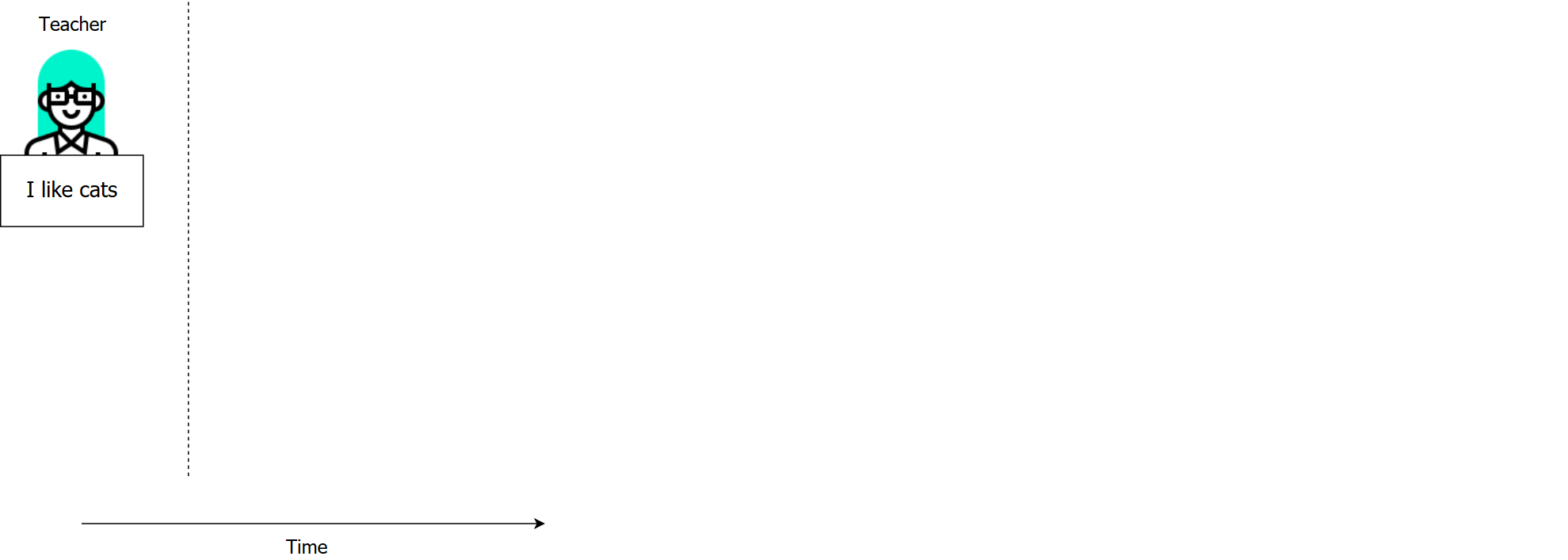

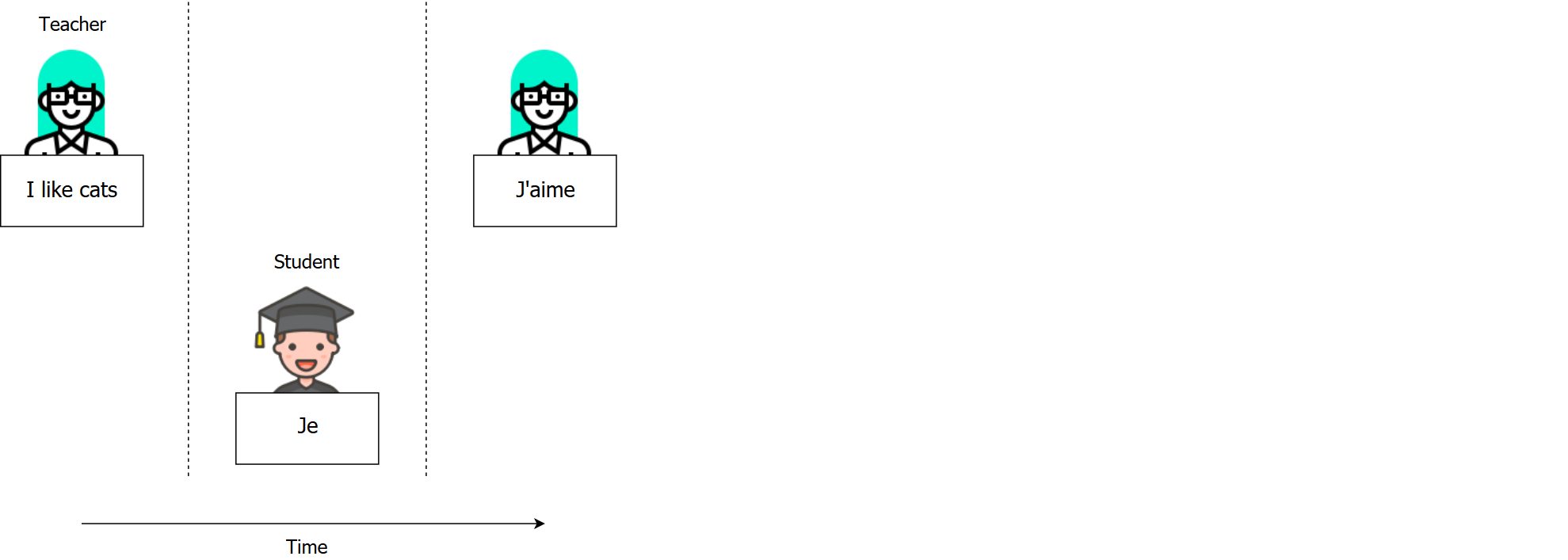

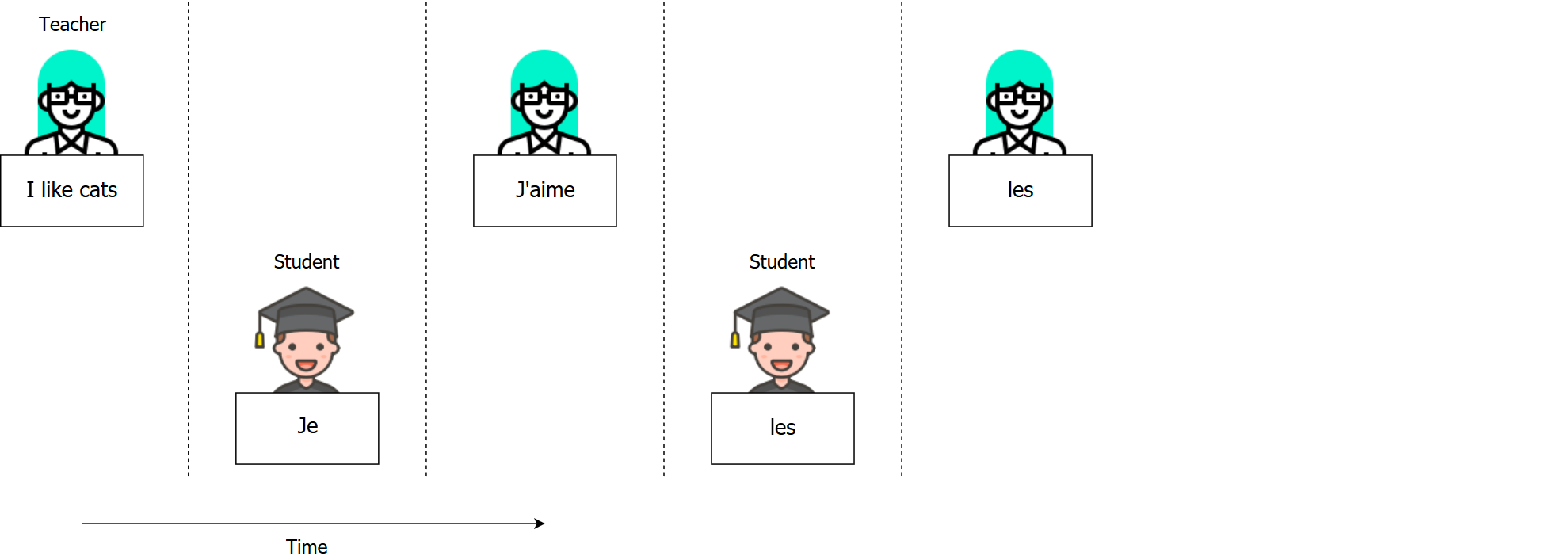

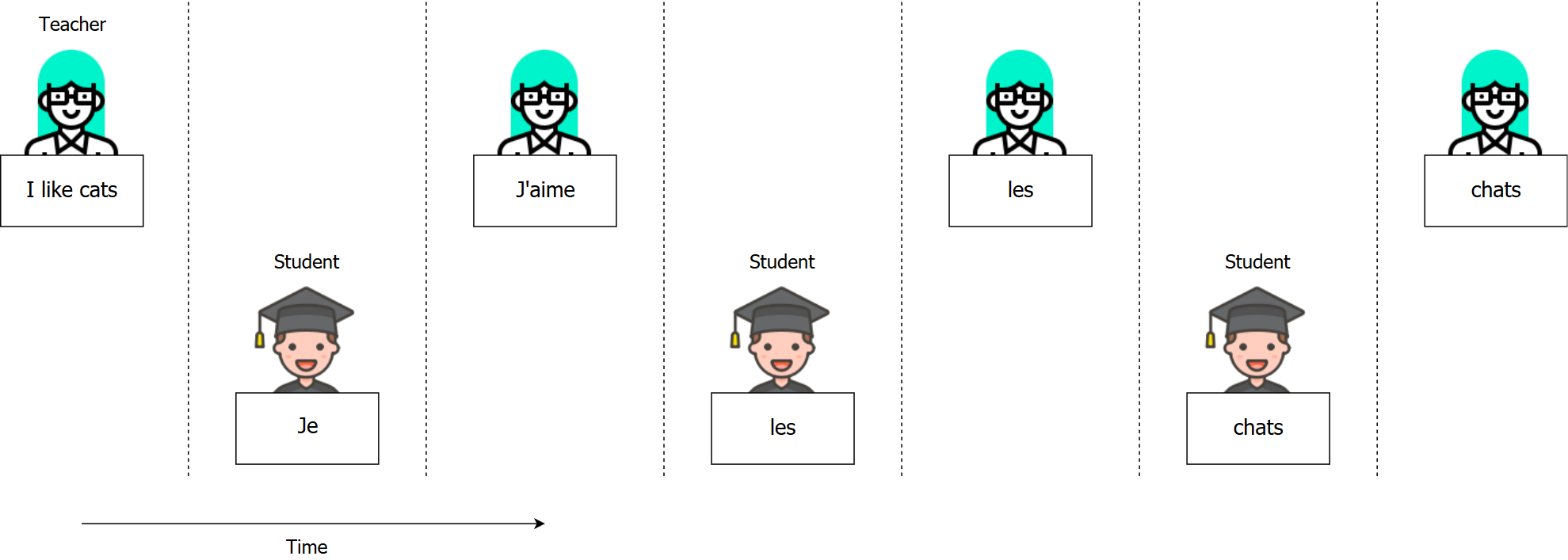

Analogy: Training without Teacher Forcing

Analogy: Training without Teacher Forcing

Analogy: Training without Teacher Forcing

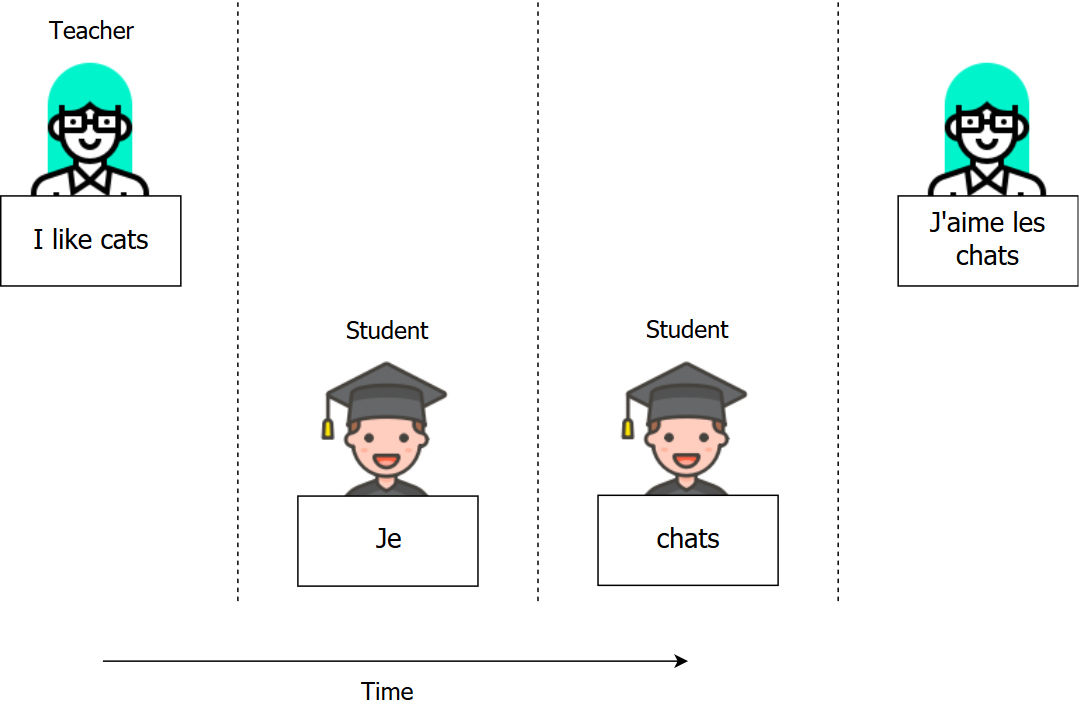

Analogy: Training with Teacher Forcing

Analogy: Training with Teacher Forcing

Analogy: Training with Teacher Forcing

Analogy: Training with Teacher Forcing

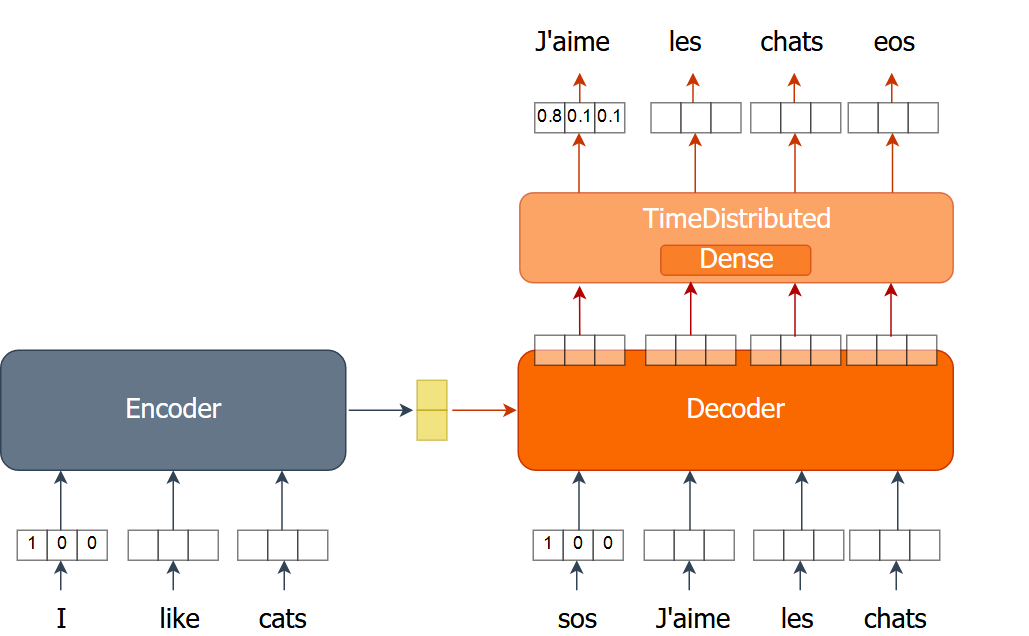

The previous machine translator model

- The previous model

- Teacher-forced model

Implementing the model with Teacher Forcing

- Encoder

en_inputs = layers.Input(shape=(en_len, en_vocab)) en_gru = layers.GRU(hsize, return_state=True) en_out, en_state = en_gru(en_inputs) - Decoder GRU

de_inputs = layers.Input(shape=(fr_len-1, fr_vocab)) de_gru = layers.GRU(hsize, return_sequences=True) de_out = de_gru(de_inputs, initial_state=en_state)

Inputs and outputs

- Encoder input - e.g.

I,like,dogs - Decoder input - e.g.

J'aime,les - Decoder output - e.g.

les,chiens

Implementing the model with Teacher Forcing

- Encoder

en_inputs = layers.Input(shape=(en_len, en_vocab)) en_gru = layers.GRU(hsize, return_state=True) en_out, en_state = en_gru(en_inputs) - Decoder GRU

de_inputs = layers.Input(shape=(fr_len-1, fr_vocab)) de_gru = layers.GRU(hsize, return_sequences=True) de_out = de_gru(de_inputs, initial_state=en_state) - Decoder Prediction

de_dense = layers.TimeDistributed(layers.Dense(fr_vocab, activation='softmax')) de_pred = de_dense(de_out)

Compiling the model

nmt_tf = Model(inputs=[en_inputs, de_inputs], outputs=de_pred)

nmt_tf.compile(optimizer='adam', loss="categorical_crossentropy", metrics=["acc"])

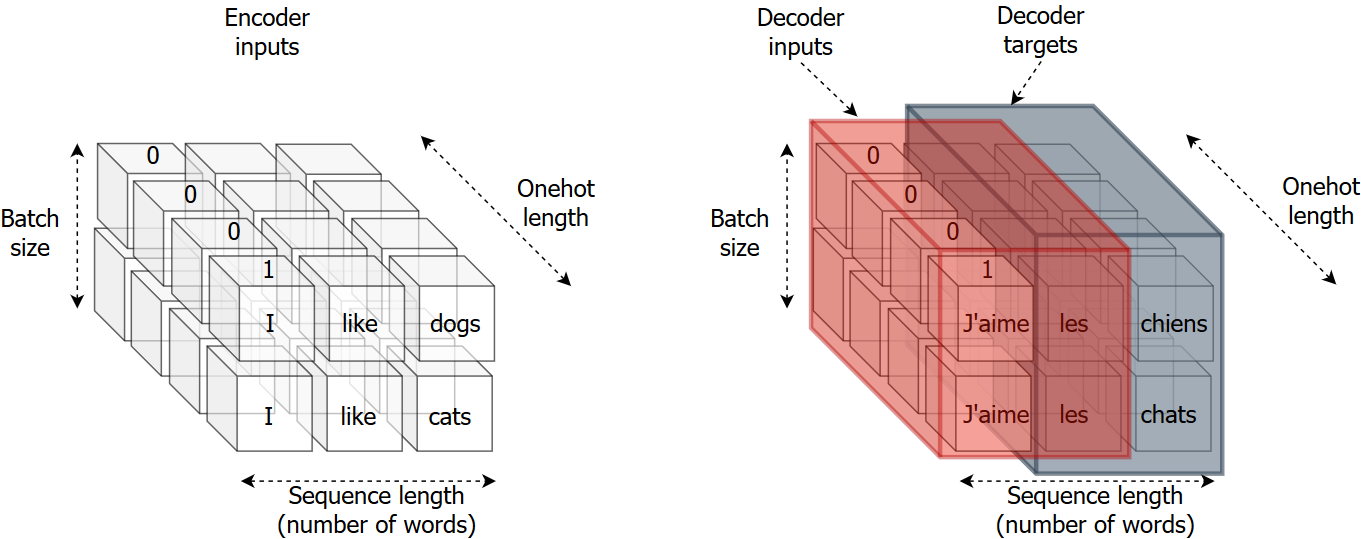

Preprocessing data

Encoder

- Inputs - All English words (onehot encoded)

en_x = sents2seqs('source', en_text, onehot=True, reverse=True)

- Inputs - All English words (onehot encoded)

Decoder

de_xy = sents2seqs('target', fr_text, onehot=True)- Inputs - All French words except the last word (onehot encoded)

de_x = de_xy[:,:-1,:]

- Outputs/Targets - All French words except the first word (onehot encoded)

de_y = de_xy[:,1:,:]

- Inputs - All French words except the last word (onehot encoded)

Let's practice!

Machine Translation with Keras