Using word embedding for machine translation

Machine Translation with Keras

Thushan Ganegedara

Data Scientist and Author

Introduction to word embeddings

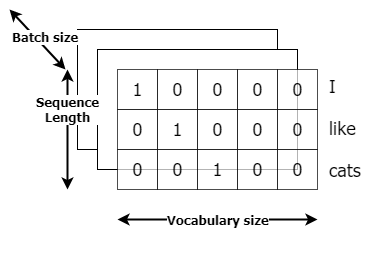

One hot encoded vectors

cat_vector = np.array([[1,0,0,0...,0]]) dog_vector = np.array([[0,1,0,0...,0]]) window_vector = np.array([[0,0,1,0...,0]])Word vectors

cat_vector = np.array([[0.393,-0.263,0.086,0.011,-0.322,...,0.388]]) dog_vector = np.array([[0.399,-0.300,0.047,-0.059,-0.111,...,0.037]]) window_vector = np.array([[0.133,0.149,-0.307,0.090,-0.143,...,0.526]])

Similarity between word vectors

from sklearn.metrics.pairwise import cosine_similarity

cat_vector = np.array([[0.393,-0.263,0.086,0.011,-0.322,...,0.388]])

dog_vector = np.array([[0.399,-0.300,0.047,-0.059,-0.111,...,0.037]])

window_vector = np.array([[0.133,0.149,-0.307,0.090,-0.143,...,0.526]])

cosine_similarity(cat_vector, dog_vector)

0.601

cosine_similarity(cat_vector, window_vector)

0.323

1 https://nlp.stanford.edu/projects/glove/

Implementing embeddings for the encoder

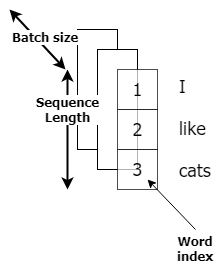

- Without an embedding layer

en_inputs = Input(shape=(en_len, en_vocab))

Implementing embeddings for the encoder

- With an embedding layer

en_inputs = Input(shape=(en_len,))

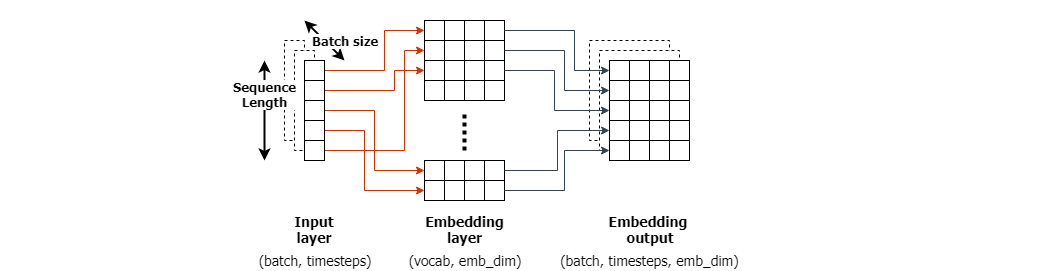

Implementing embeddings for the encoder

en_inputs = Input(shape=(en_len,))

en_emb = Embedding(en_vocab, 96, input_length=en_len)(en_inputs)

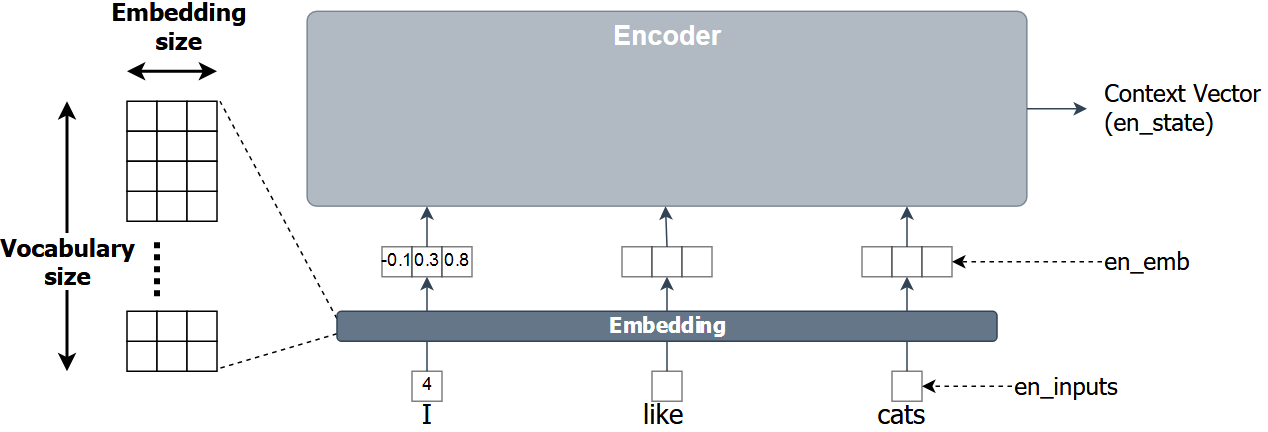

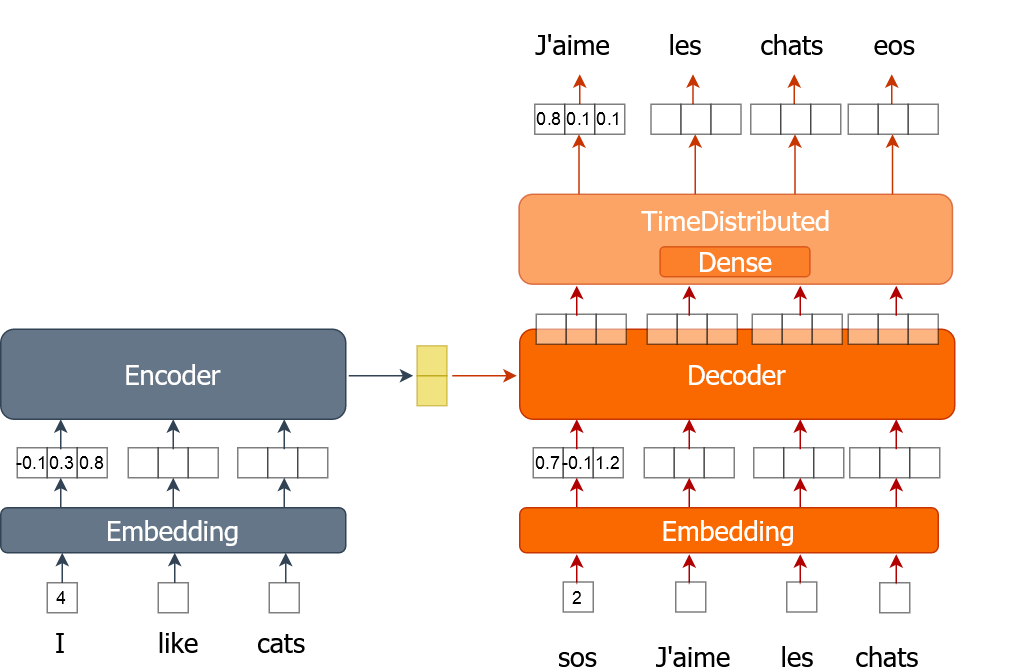

Implementing the encoder with embedding

en_inputs = Input(shape=(en_len,))

en_emb = Embedding(en_vocab, 96, input_length=en_len)(en_inputs)

en_out, en_state = GRU(hsize, return_state=True)(en_emb)

Implementing the decoder with embedding

de_inputs = Input(shape=(fr_len-1,))

de_emb = Embedding(fr_vocab, 96, input_length=fr_len-1)(de_inputs)

de_out, _ = GRU(hsize, return_sequences=True, return_state=True(

de_emb, initial_state=en_state)

Training the model

for ei in range(3): for i in range(0, train_size, bsize): en_x = sents2seqs('source', tr_en[i:i+bsize], onehot=False, reverse=True)de_xy = sents2seqs('target', tr_fr[i:i+bsize], onehot=False) de_x = de_xy[:,:-1]de_xy_oh = sents2seqs('target', tr_fr[i:i+bsize], onehot=True) de_y = de_xy_oh[:,1:,:]nmt_emb.train_on_batch([en_x, de_x], de_y) res = nmt_emb.evaluate([en_x, de_x], de_y, batch_size=bsize, verbose=0) print("{} => Loss:{}, Train Acc: {}".format(ei+1,res[0], res[1]*100.0))

Let's practice!

Machine Translation with Keras