Implementing the full encoder decoder model

Machine Translation with Keras

Thushan Ganegedara

Data Scientist and Author

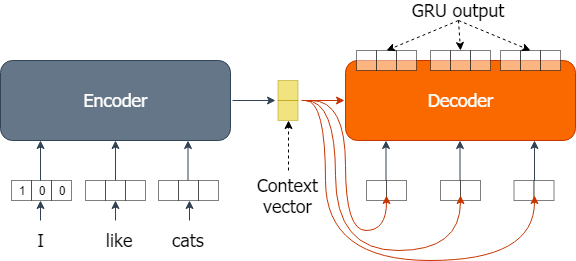

What you implemented so far

- The encoder consumes the English (i.e. source) input

- The encoder produces the context vector

- The decoder consumes a repeated set of context vectors

- The decoder outputs GRU output sequence

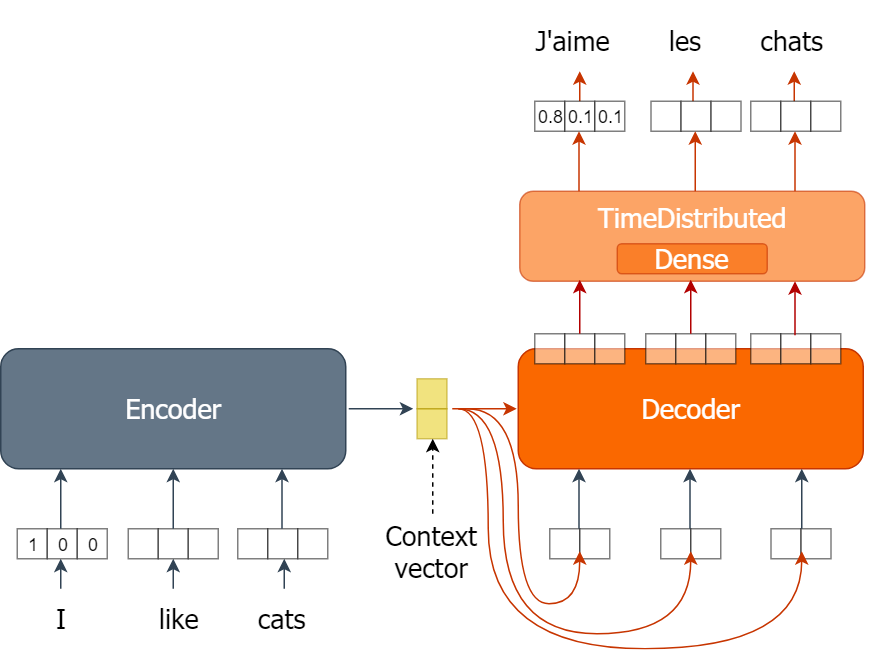

Top part of the decoder

- Implemented with

TimeDistributedandDenselayers.

Implementing the full model

Encoder

en_inputs = Input(shape=(en_len, en_vocab)) en_gru = GRU(hsize, return_state=True) en_out, en_state = en_gru(en_inputs)Decoder

de_inputs = RepeatVector(fr_len)(en_state) de_gru = GRU(hsize, return_sequences=True) de_out = de_gru(de_inputs, initial_state=en_state)

Implementing the full model

- The softmax prediction layer

de_dense = keras.layers.Dense(fr_vocab, activation='softmax')

de_dense_time = keras.layers.TimeDistributed(de_dense)

de_pred = de_seq_dense(de_out)

Compiling the model

Defining the full model

nmt = keras.models.Model(inputs=en_inputs, outputs=de_pred)

Compiling the model

nmt.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['acc'])

Let's practice!

Machine Translation with Keras