Dense and TimeDistributed layers

Machine Translation with Keras

Thushan Ganegedara

Data Scientist and Author

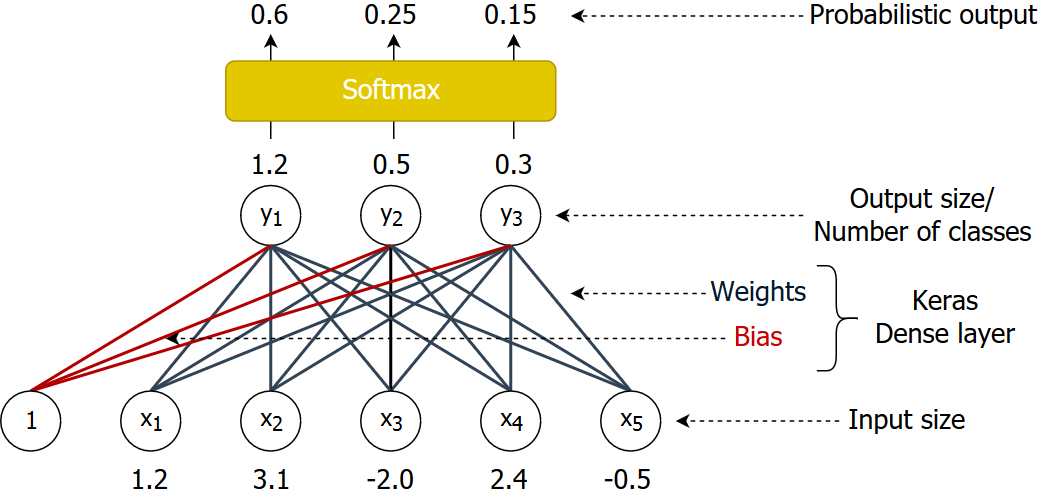

Introduction to the Dense layer

- Takes an input vector and converts to a probabilistic prediction.

- y = Weights.x + Bias

Understanding the Dense layer

Defining and using a Dense layer

dense = Dense(3, activation='softmax')

inp = Input(shape=(3,))

pred = dense(inp)

model = Model(inputs=inp, outputs=pred)

Defining a Dense layer with custom initialization

from tensorflow.keras.initializers import RandomNormal

init = RandomNormal(mean=0.0, stddev=0.05, seed=6000)

dense = Dense(3, activation='softmax',

kernel_initializer=init, bias_initializer=init)

Inputs and outputs of the Dense layer

- Dense softmax layer

- Takes a

(batch size, input size)array- e.g.

x = [[1, 6, 8], [8, 9, 10]] # a 2x3 array

- e.g.

- Produces a

(batch size, num classes)array- e.g. Number of classes = 4

- e.g.

y = [[0.1, 0.3, 0.4, 0.2], [0.2, 0.5, 0.1, 0.2]] # a 2x4 array

- Output for each sample is a probability distribution over the classes

- Sums to 1 along columns

- Can get the class for each sample using

np.argmax(y, axis=-1)- e.g.

np.argmax(y,axis=-1)produces[2,1]

- e.g.

- Takes a

Understanding the TimeDistributed layer

- Allows

Denselayers to process time-series inputs

dense_time = TimeDistributed(Dense(3, activation='softmax'))

inp = Input(shape=(2, 3))

pred = dense_time(inp)

model = Model(inputs=inp, outputs=pred)

Inputs and outputs of the TimeDistributed layer

- Takes a

(batch size, sequence length, input size)array

x = [[[1, 6], [8, 2], [1, 2]],

[[8, 9], [10, 8], [1, 0]]] # a 2x3x2 array

- Produces a

(batch size, sequence length, num classes)array- e.g. Number of classes = 3

y = [[[0.1, 0.5, 0.4], [0.8, 0.1, 0.1], [0.6, 0.2, 0.2]],

[[0.2, 0.5, 0.3], [0.2, 0.5, 0.3], [0.2, 0.8, 0.0]]] # a 2x3x3 array

- Output for each sample is a probability distribution over the classes

- Can get the class for each sample using

np.argmax(y, axis=-1)

Slicing data on time dimension

y = [[[0.1, 0.5, 0.4], [0.8, 0.1, 0.1], [0.6, 0.2, 0.2]],

[[0.2, 0.5, 0.3], [0.2, 0.5, 0.3], [0.2, 0.8, 0.0]]] # a 2x3x3 array

classes = np.argmax(y, axis=-1) # a 2 x 3 array

Iterating through time-distributed data

for t in range(3):

# Get the t-th time-dimension slice of y and classes

for prob, c in zip(y[:,t,:], classes[:,t]):

print("Prob: ", prob, ", Class: ", c)

Prob: [0.1 0.5 0.4] , Class: 1

Prob: [0.2 0.5 0.3] , Class: 1

Prob: [0.8 0.1 0.1] , Class: 0

...

Let's practice!

Machine Translation with Keras