Non-negative matrix factorization (NMF)

Unsupervised Learning in Python

Benjamin Wilson

Director of Research at lateral.io

Non-negative matrix factorization

- NMF = "non-negative matrix factorization"

- Dimension reduction technique

- NMF models are interpretable (unlike PCA)

- Easy to interpret means easy to explain!

- However, all sample features must be non-negative (>= 0)

Interpretable parts

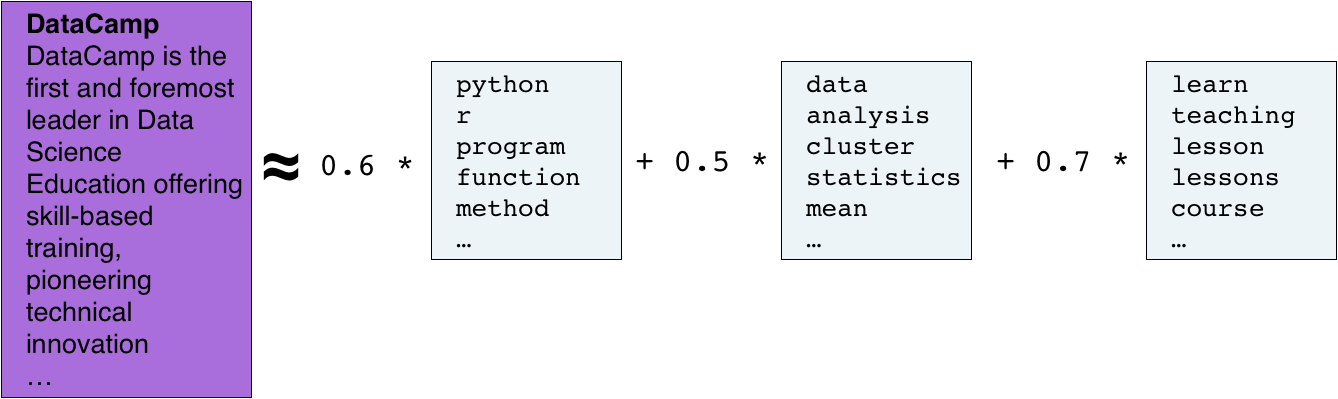

- NMF expresses documents as combinations of topics (or "themes")

Interpretable parts

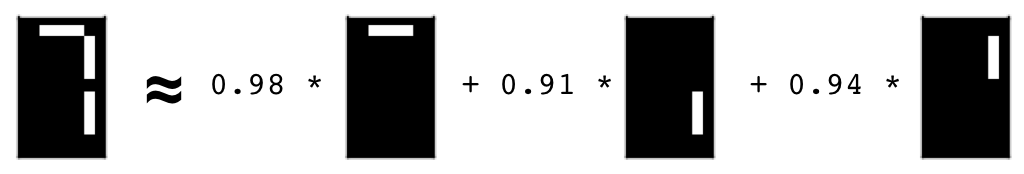

- NMF expresses images as combinations of patterns

Using scikit-learn NMF

- Follows

fit()/transform()pattern - Must specify number of components e.g.

NMF(n_components=2) - Works with NumPy arrays and with

csr_matrix

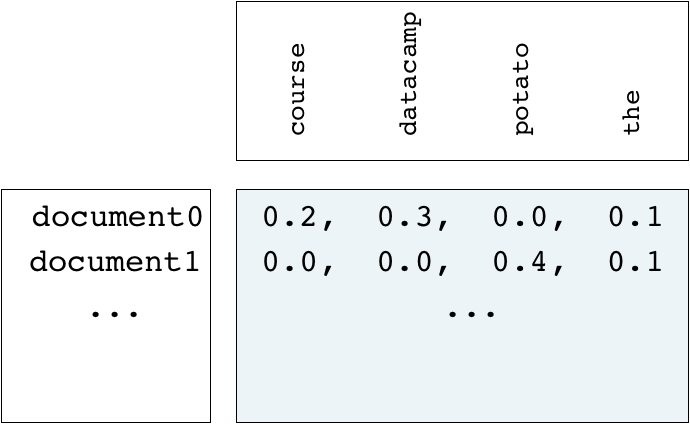

Example word-frequency array

- Word frequency array, 4 words, many documents

- Measure presence of words in each document using "tf-idf"

- "tf" = frequency of word in document

- "idf" reduces influence of frequent words

Example usage of NMF

samplesis the word-frequency array

from sklearn.decomposition import NMFmodel = NMF(n_components=2)model.fit(samples)

NMF(n_components=2)

nmf_features = model.transform(samples)

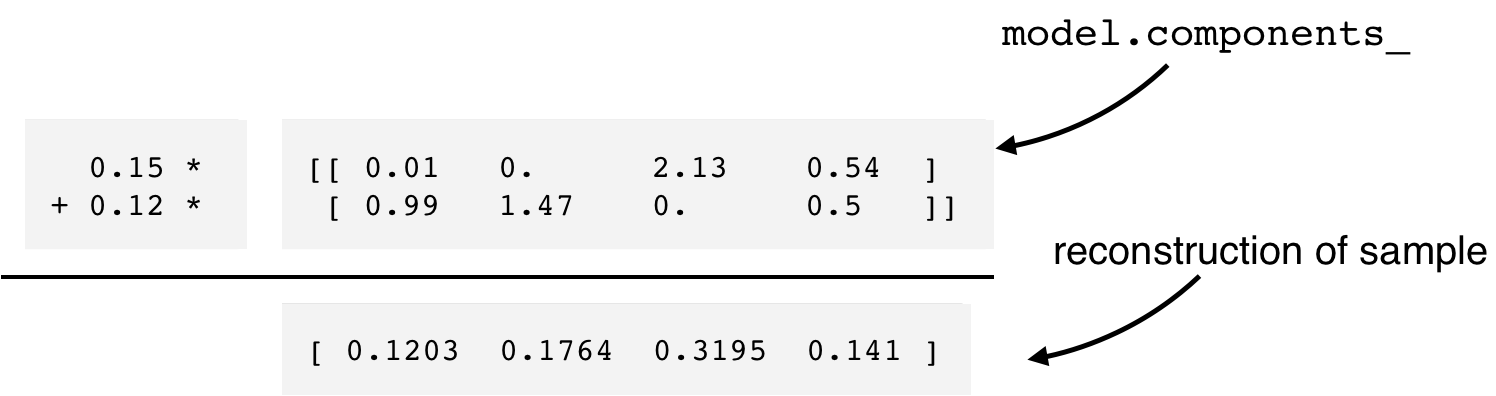

NMF components

- NMF has components

- ... just like PCA has principal components

- Dimension of components = dimension of samples

- Entries are non-negative

print(model.components_)

[[ 0.01 0. 2.13 0.54]

[ 0.99 1.47 0. 0.5 ]]

NMF features

- NMF feature values are non-negative

- Can be used to reconstruct the samples

- ... combine feature values with components

print(nmf_features)

[[ 0. 0.2 ]

[ 0.19 0. ]

...

[ 0.15 0.12]]

Reconstruction of a sample

print(samples[i,:])

[ 0.12 0.18 0.32 0.14]

print(nmf_features[i,:])

[ 0.15 0.12]

Sample reconstruction

- Multiply components by feature values, and add up

- Can also be expressed as a product of matrices

- This is the "Matrix Factorization" in "NMF"

NMF fits to non-negative data only

- Word frequencies in each document

- Images encoded as arrays

- Audio spectrograms

- Purchase histories on e-commerce sites

- ... and many more!

Let's practice!

Unsupervised Learning in Python