Differentially private machine learning models

Data Privacy and Anonymization in Python

Rebeca Gonzalez

Data Engineer

Sharing data safely

Companies with similar data, sharing information to improve products and services.

- Including Machine Learning (ML) models

Differently private machine learning models

- SaaS company that has multiple online stores as partners.

- When a new partner joins, it might take months before it can collect enough data

- With DP, the SaaS company can encourage partners to share data

$$

$$

Machine learning and privacy

- Datasets contain sensitive information

- Adversaries can exploit the output of machine learning algorithms

Machine learning and privacy

Differentially private machine learning models

- Follow the corresponding data distributions

- Can guarantee privacy to individuals

Differentially private classification models

# Import the scikit-learn naive Bayes classifier from sklearn.naive_bayes import GaussianNB# Import the differentially private naive Bayes classifier from diffprivlib.models import GaussianNB

Non-private classifier

from sklearn.naive_bayes import GaussianNB# Built the non-private classifier nonprivate_clf = GaussianNB()# Fit the model to the data nonprivate_clf.fit(X_train, y_train)print("The accuracy of the non-private model is ", nonprivate_clf.score(X_test, y_test))

The accuracy of the non-private model is 0.8333333333333334

Differentially private classifier

from diffprivlib.models import GaussianNB as dp_GaussianNB# Build the private classifier with empty constructor private_clf = dp_GaussianNB()# Fit the model to the data and see the score private_clf.fit(X_train, y_train)print("The accuracy of the private model is ", private_clf.score(X_test, y_test))

The accuracy of the private model is 0.7

PrivacyLeakWarning: Bounds have not been specified and will be calculated

on the data provided. This will result in additional privacy leakage.

To ensure differential privacy and no additional privacy leakage, specify bounds for each dimension.

"privacy leakage, specify bounds for each dimension.", PrivacyLeakWarning)

Avoid privacy leakage

To avoid data leakage, we can replace the min and max values by passing a bounds argument. It can be:

- A tuple of the form (min, max)

- Integers covering the min/max of the entire data

- Example:

(0,100)

- Example:

- Arrays for min and max values of each column in the data.

- Example:

([0,1,0,2],[10,80,5,70])

- Example:

- Integers covering the min/max of the entire data

Avoid privacy leakage

# Set the bounds to cover at least the min and max values bounds = (X_train.min(axis=0) - 1, X_train.max(axis=0) + 1)# Built the classifier with epsilon of 0.5 dp_clf = dp_GaussianNB(epsilon=0.5, bounds=bounds)# Fit the model to the data and see the score dp_clf.fit(X_train, y_train) print("The accuracy of the private model is ", private_clf.score(X_test, y_test))

The accuracy of the private model is 0.807000

More on adding bounds

# Import random module import random # Set the min and max of bounds in the data plus some noise bounds = (X_train.min(axis=0) - random.sample(range(0, 30), 12), X_train.max(axis=0) + random.sample(range(0, 30), 12))# Build the classifier with epsilon of 0.5 dp_clf = dp_GaussianNB(epsilon=0.5, bounds=bounds)# Fit the model to the data and see the score dp_clf.fit(X_train, y_train) print("The accuracy of private classifier with bounds is ", dp_clf.score(X_test, y_test))

The accuracy of private classifier with bounds is 0.7544444444

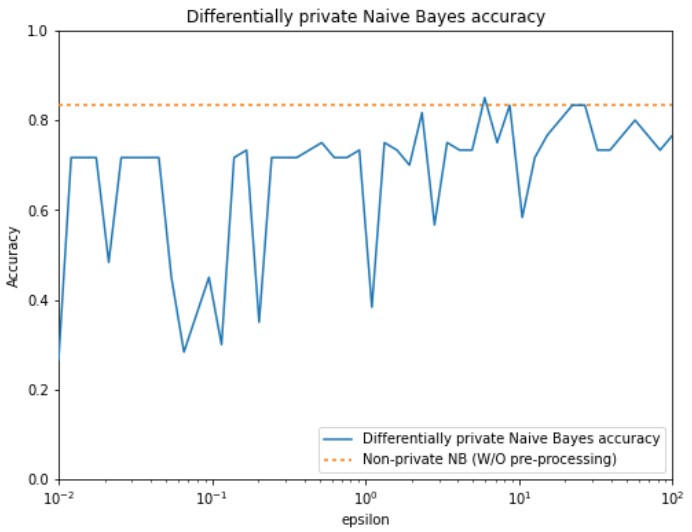

Different epsilon values

Let's create privacy preserving models!

Data Privacy and Anonymization in Python