Estimating performance with cross validation

Modeling with tidymodels in R

David Svancer

Data Scientist

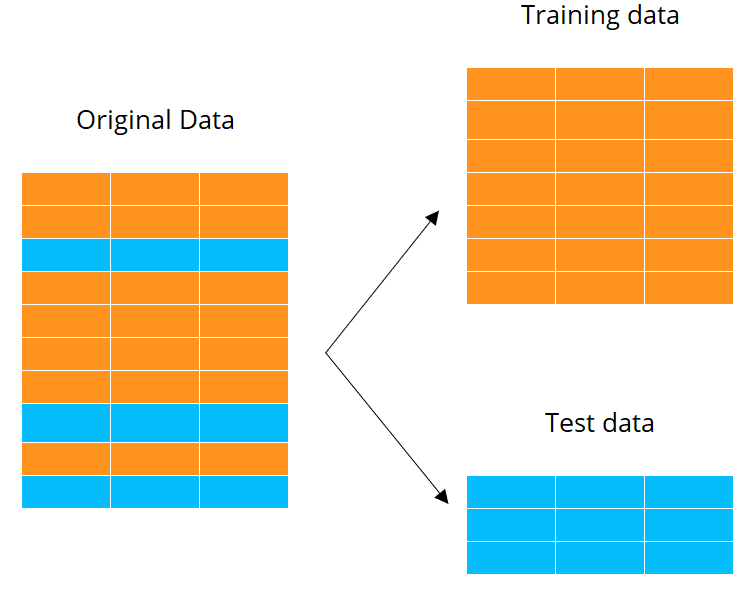

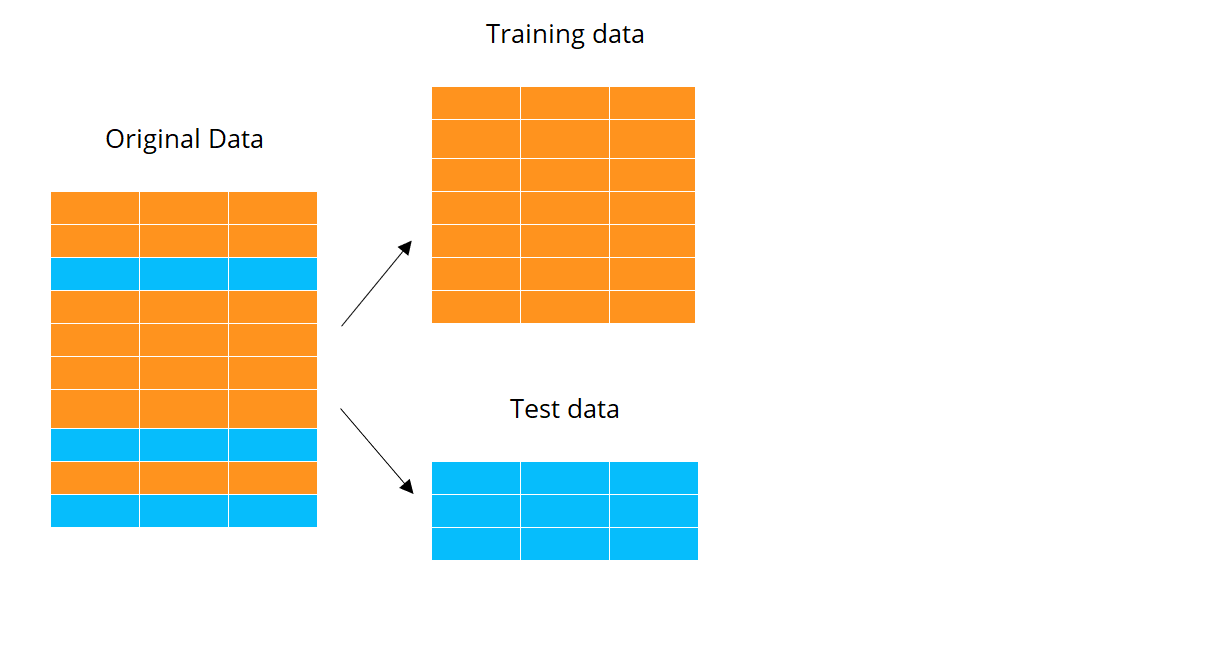

Training and test datasets

Creating training and test datasets is the first step in the modeling process

- Guards against overfitting

- Training data is used for model fitting

- Test data is used for model evaluation

Downside

- Only one estimate of model performance

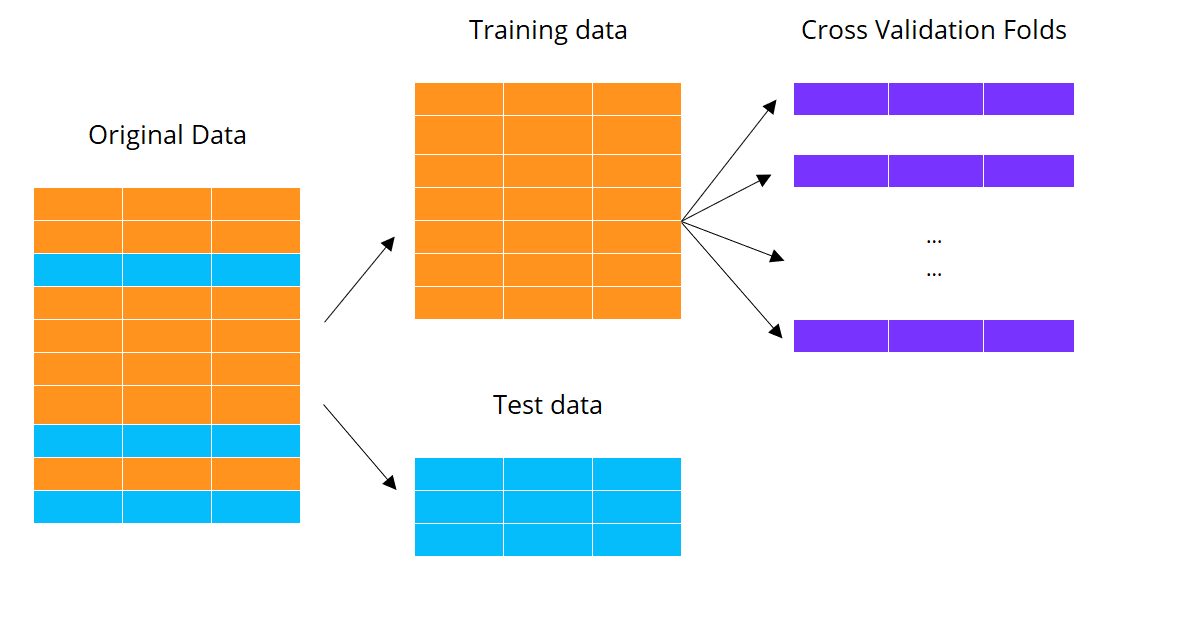

K-fold cross validation

Resampling technique for exploring model performance

- Provides K estimates of model performance during the model fitting process

K-fold cross validation

Resampling technique for exploring model performance

- Provides K estimates of model performance during the model fitting process

- Training data is randomly partitioned into K sets of roughly equal size

- Folds are used to perform K iterations of model fitting and evaluation

Machine learning with cross validation

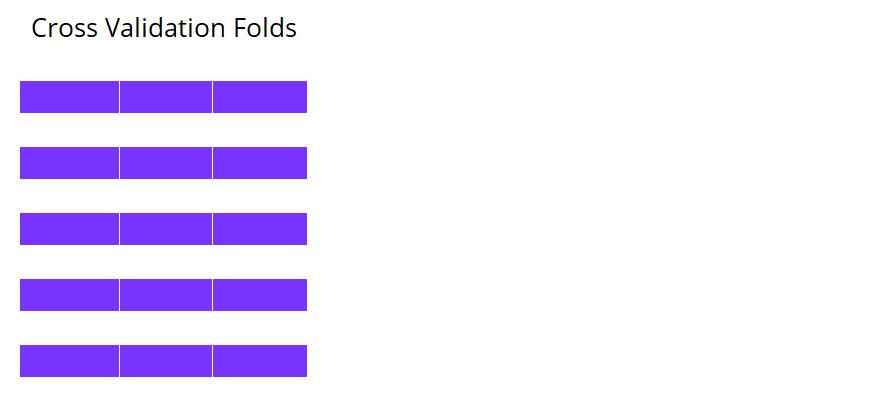

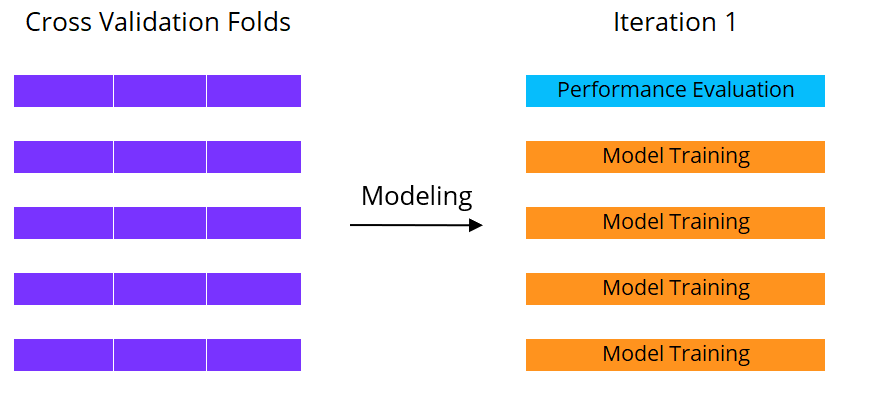

Performing 5-fold cross validation

- Five iterations of model training and evaluation

Machine learning with cross validation

Performing 5-fold cross validation

- Five iterations of model training and evaluation

- Iteration 1

- Fold 1 reserved for model evaluation and folds 2 through 5 for model training

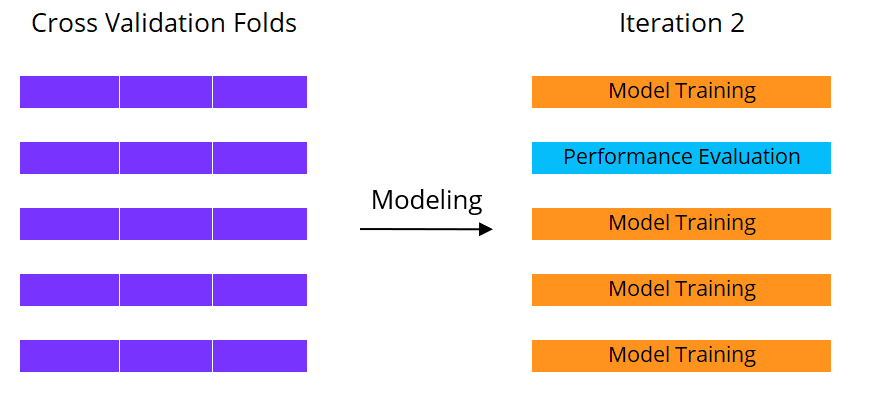

Machine learning with cross validation

Performing 5-fold cross validation

- Five iterations of model training and evaluation

- Iteration 1

- Fold 1 reserved for model evaluation and folds 2 through 5 for model training

- Iteration 2

- Fold 2 reserved for model evaluation

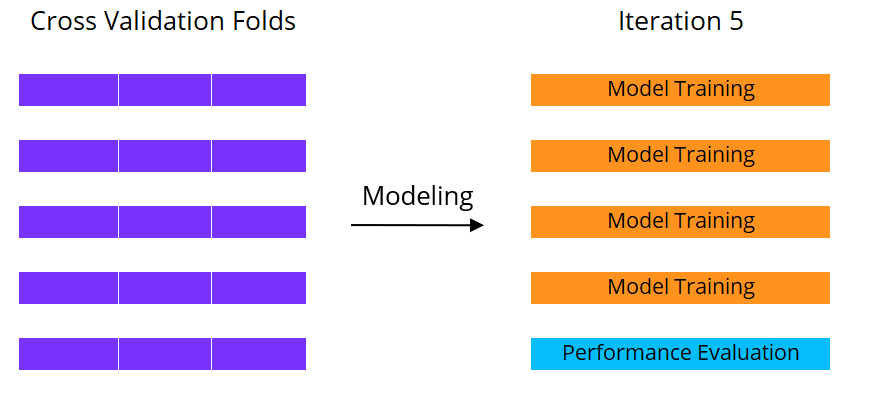

Machine learning with cross validation

Performing 5-fold cross validation

- Five iterations of model training and evaluation

- Iteration 1

- Fold 1 reserved for model evaluation and folds 2 through 5 for model training

- Iteration 2

- Fold 2 reserved for model evaluation

Five estimates of model performance in total

Creating cross validation folds

The vfold_cv() function

- Training data

- Number of folds,

v - Stratification variable,

strata - Execute

set.seed()beforevfold_cv()for reproducibility splits- List column with data split objects for creating fold

set.seed(214) leads_folds <- vfold_cv(leads_training,v = 10,strata = purchased)leads_folds

# 10-fold cross-validation using stratification

# A tibble: 10 x 2

splits id

<list> <chr>

1 <split [896/100]> Fold01

2 <split [896/100]> Fold02

3 <split [896/100]> Fold03

. ................ ......

9 <split [897/99]> Fold09

10 <split [897/99]> Fold10

Model training with cross validation

The fit_resamples() function

- Train a

parsnipmodel orworkflowobject - Provide cross validation folds,

resamples - Optional custom metric function,

metrics- Default is accuracy and ROC AUC

Each metric is estimated 10 times

- One estimate per fold

- Average value in

meancolumn

leads_rs_fit <- leads_wkfl %>%fit_resamples(resamples = leads_folds,metrics = leads_metrics)leads_rs_fit %>% collect_metrics()

# A tibble: 3 x 5

.metric .estimator mean n std_err

<chr> <chr> <dbl> <int> <dbl>

1 roc_auc binary 0.823 10 0.0147

2 sens binary 0.786 10 0.0203

3 spec binary 0.855 10 0.0159

Detailed cross validation results

The collect_metrics() function

- Passing

summarize = FALSEwill provide all metric estimates for every cross validation fold - 30 total combinations (3 metrics x 10 folds)

.metriccolumn identifies metric.estimatecolumn gives estimated value for each fold

rs_metrics <- leads_rs_fit %>% collect_metrics(summarize = FALSE)rs_metrics

# A tibble: 30 x 4

id .metric .estimator .estimate

<chr> <chr> <chr> <dbl>

1 Fold01 sens binary 0.861

2 Fold01 spec binary 0.891

3 Fold01 roc_auc binary 0.885

4 Fold02 sens binary 0.778

5 Fold02 spec binary 0.969

6 Fold02 roc_auc binary 0.885

# ... with 24 more rows

Summarizing cross validation results

The collect_metrics() function returns a tibble

- Results can be summarized with

dplyr- Start with

rs_metrics - Form groups by

.metricvalues - Calculate summary statistics with

summarize()

- Start with

rs_metrics %>%group_by(.metric) %>%summarize(min = min(.estimate), median = median(.estimate), max = max(.estimate), mean = mean(.estimate), sd = sd(.estimate))

# A tibble: 3 x 6

.metric min median max mean sd

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 roc_auc 0.758 0.806 0.885 0.823 0.0466

2 sens 0.667 0.792 0.861 0.786 0.0642

3 spec 0.810 0.843 0.969 0.855 0.0502

Cross validation methodology

Models trained with fit_resamples() are not able to provide predictions on new data sources

predict()function does not accept resample objects

Purpose of fit_resample()

- Explore and compare the performance profile of different model types

- Select best performing model type and focus on model fitting efforts

predict(leads_rs_fit,

new_data = leads_test)

Error in UseMethod("predict") :

no applicable method for 'predict' applied to

an object of class

"c('resample_results',

'tune_results',

'tbl_df',

'tbl', 'data.frame')"

Let's cross validate!

Modeling with tidymodels in R