Visualizing model performance

Modeling with tidymodels in R

David Svancer

Data Scientist

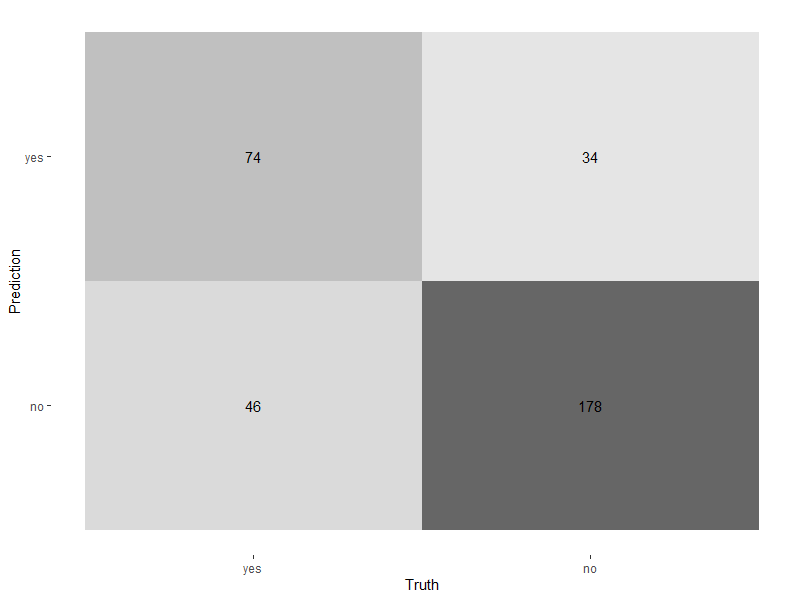

Plotting the confusion matrix

Heatmap with autoplot()

- Pass confusion matrix object into

autoplot() - Set

typeto'heatmap' - Visualize the most prevalent counts

conf_mat(leads_results, truth = purchased, estimate = .pred_class) %>%autoplot(type = 'heatmap')

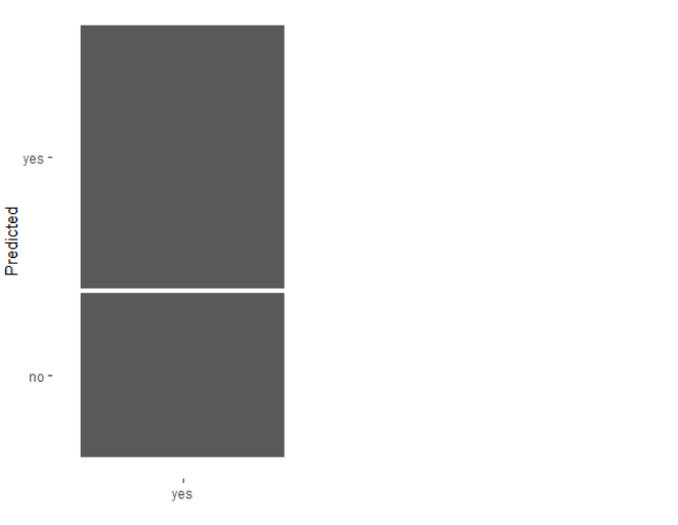

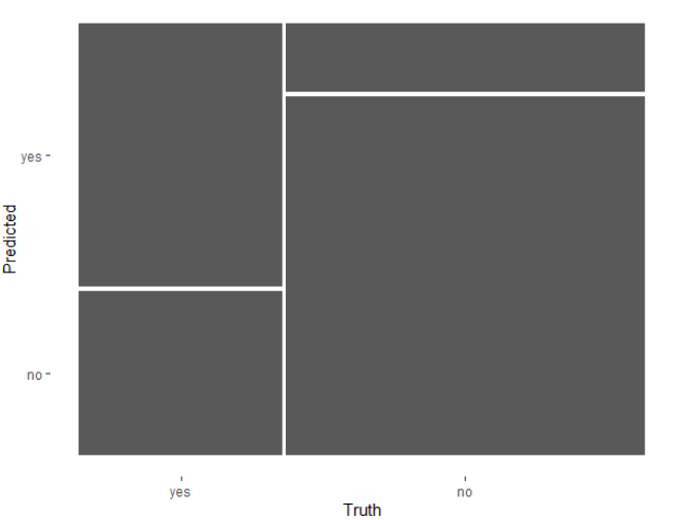

Mosaic plot

Mosaic with autoplot()

- Set

typeto'mosaic' - Each vertical bar represents 100% of actual outcome value in column

- Visually displays

- sensitivity

conf_mat(leads_results,

truth = purchased,

estimate = .pred_class) %>%

autoplot(type = 'mosaic')

Mosiac plot

Mosaic with autoplot()

- Set

typeto'mosaic' - Each vertical bar represents 100% of actual outcome value in column

- Visually displays

- sensitivity

- specificity

conf_mat(leads_results,

truth = purchased,

estimate = .pred_class) %>%

autoplot(type = 'mosaic')

Probability thresholds

Default probability threshold in binary classification is 0.5

- If the estimated probability of the positive class is greater than or equal to 0.5, the positive class is predicted

leads_results

- If

.pred_yesis greater than or equal to 0.5 then.pred_classis set to 'yes' by thepredict()function intidymodels

leads_results

# A tibble: 332 x 4

purchased .pred_class .pred_yes .pred_no

<fct> <fct> <dbl> <dbl>

1 no no 0.134 0.866

2 yes yes 0.729 0.271

3 no no 0.133 0.867

4 no no 0.0916 0.908

5 yes yes 0.598 0.402

6 no no 0.128 0.872

7 yes no 0.112 0.888

8 no no 0.169 0.831

9 no no 0.158 0.842

10 yes yes 0.520 0.480

# ... with 322 more rows

Exploring performance across thresholds

How does a classification model perform across a range of thresholds?

- Unique probability thresholds in the

.pred_yescolumn of the test dataset results- Calculate specificity and sensitivity for each

| threshold | specificity | sensitivity |

|---|---|---|

| 0 | 0 | 1 |

| 0.11 | 0.01 | 0.98 |

| 0.15 | 0.05 | 0.97 |

| ... | ... | ... |

| 0.84 | 0.89 | 0.08 |

| 0.87 | 0.94 | 0.02 |

| 0.91 | 0.99 | 0 |

| 1 | 1 | 0 |

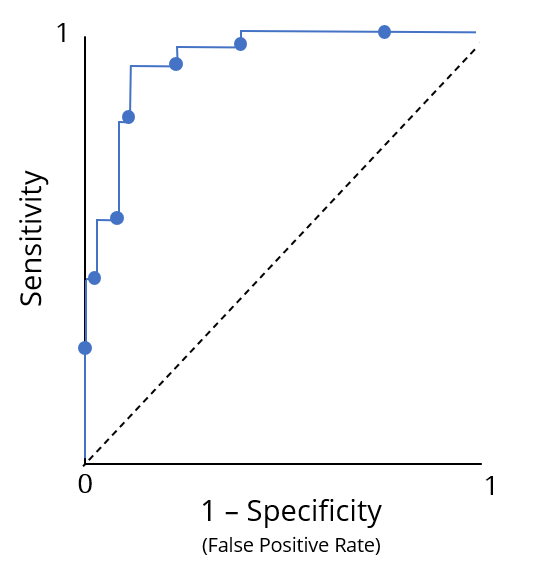

Visualizing performance across thresholds

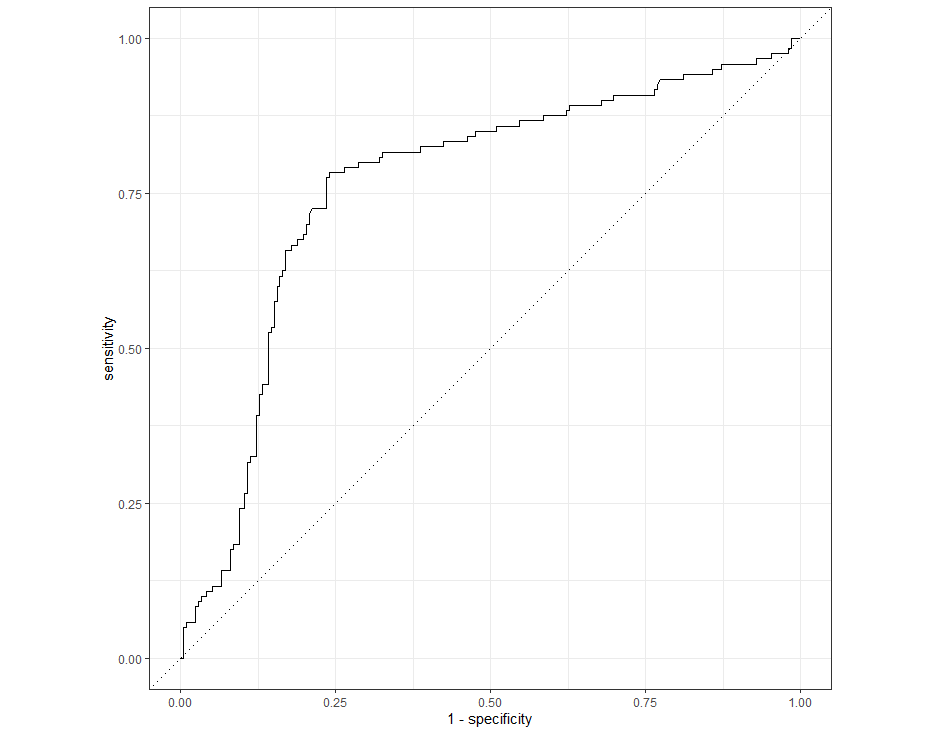

Receiver operating characteristic (ROC) curve

- Used to visualize performance across probability thresholds

- Sensitivity vs. (1 - specificity) across unique thresholds in test set results

Visualizing performance across thresholds

Receiver operating characteristic (ROC) curve

- Used to visualize performance across probability thresholds

- Sensitivity vs (1 - specificity) across unique thresholds in test set results

- Proportion correct among actual positives vs. proportion incorrect among actual negatives

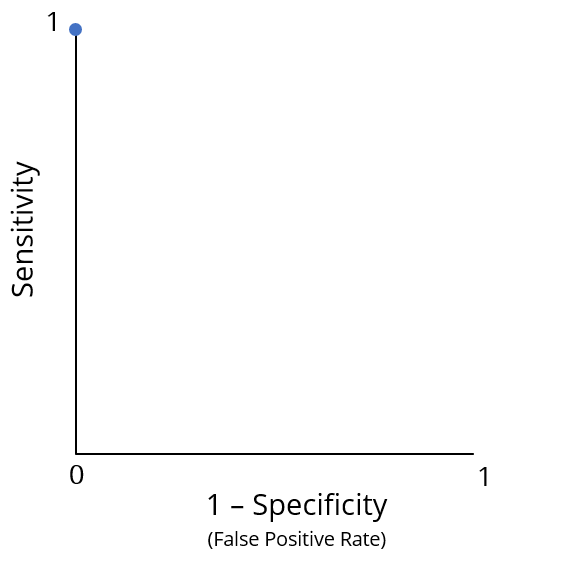

ROC curves

Optimal performance is at the point (0, 1)

- Ideally, a classification model produces points close to left upper edge across all thresholds

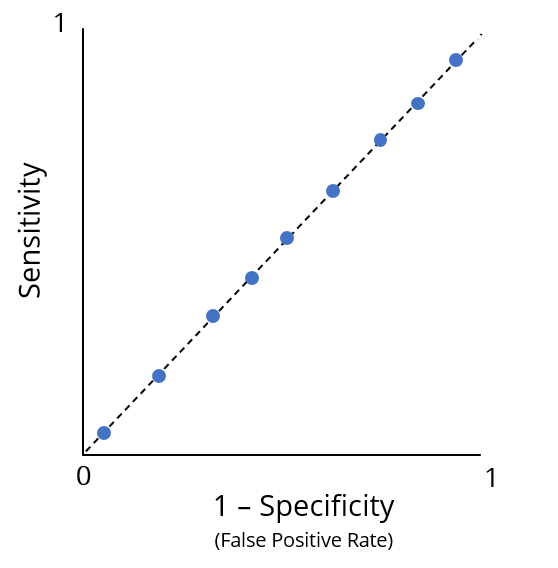

ROC curves

Optimal performance is at the point (0, 1)

- Ideally, a classification model produces points close to left upper edge across all thresholds

Poor performance

- Sensitivity and (1 - specificity) are equal across all thresholds

- Corresponds to a classification model that predicts outcomes based on the result of randomly flipping a fair coin

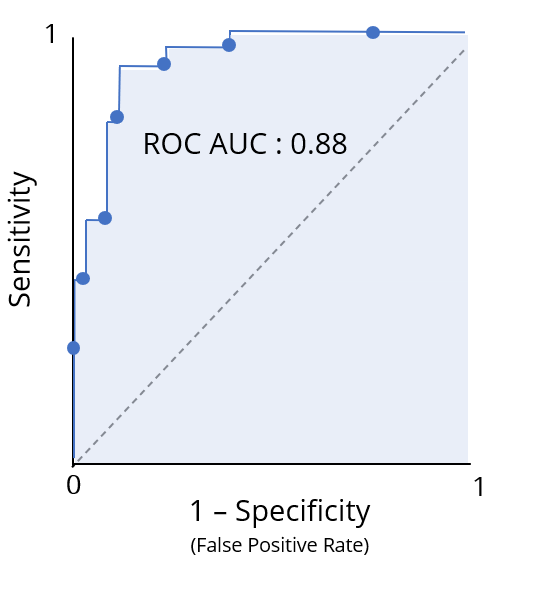

Summarizing the ROC curve

The area under the ROC curve (ROC AUC) captures the ROC curve information of a classification model in a single number

Useful interpretation as a letter grade of classification performance

- A - [0.9, 1]

- B - [0.8, 0.9)

- C - [0.7, 0.8)

- D - [0.6, 0.7)

- F - [0.5, 0.6)

Calculating performance across thresholds

The roc_curve() function

- Takes a results tibble as the first argument

truthcolumn with true outcome categories- Column with estimated probabilities for the positive class

.pred_yesinleads_resultstibble

- Returns a tibble with specificity and sensitivity for all unique thresholds in

.pred_yes

leads_results %>%

roc_curve(truth = purchased, .pred_yes)

# A tibble: 331 x 3

.threshold specificity sensitivity

<dbl> <dbl> <dbl>

1 -Inf 0 1

2 0.0871 0 1

3 0.0888 0.00472 1

4 0.0893 0.00943 1

5 0.0896 0.0142 1

6 0.0902 0.0142 0.992

7 0.0916 0.0142 0.983

8 0.0944 0.0189 0.983

# ... with 323 more rows

Plotting the ROC curve

Passing the results of roc_curve() to the autoplot() function returns an ROC curve plot

leads_results %>%

roc_curve(truth = purchased, .pred_yes) %>%

autoplot()

Calculating ROC AUC

The roc_auc() function from yardstick will calculate the ROC AUC

- Tibble of model results

truthcolumn- Column with estimated probabilities for the positive class

roc_auc(leads_results,

truth = purchased,

.pred_yes)

# A tibble: 1 x 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 roc_auc binary 0.763

Let's practice!

Modeling with tidymodels in R