Optimize the boosted ensemble

Machine Learning with Tree-Based Models in R

Sandro Raabe

Data Scientist

Starting point: untuned performance

collect_metrics(cv_results)

# A tibble: 1 x 3

.metric .mean n

<chr> <dbl> <int>

1 roc_auc 0.951 5

- 95% - not bad for untuned model!

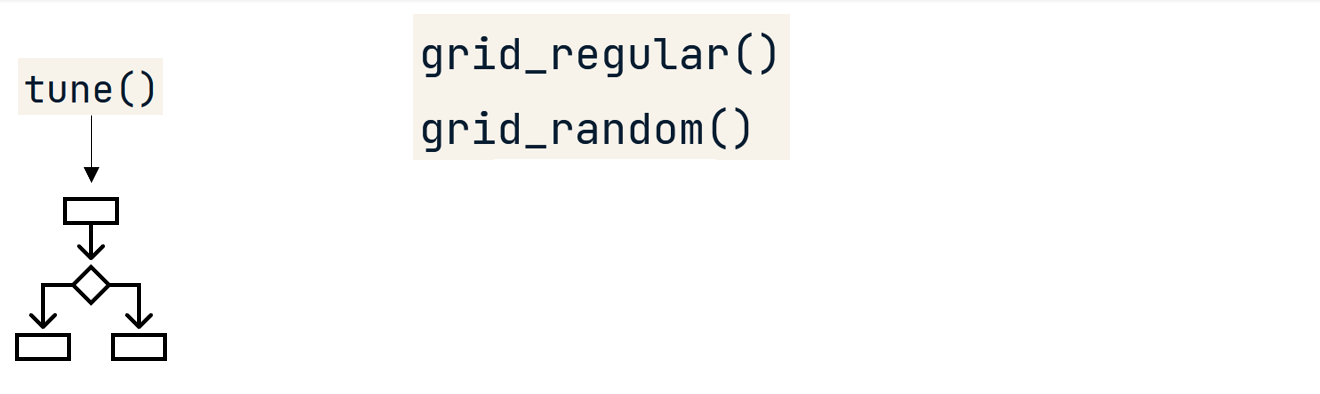

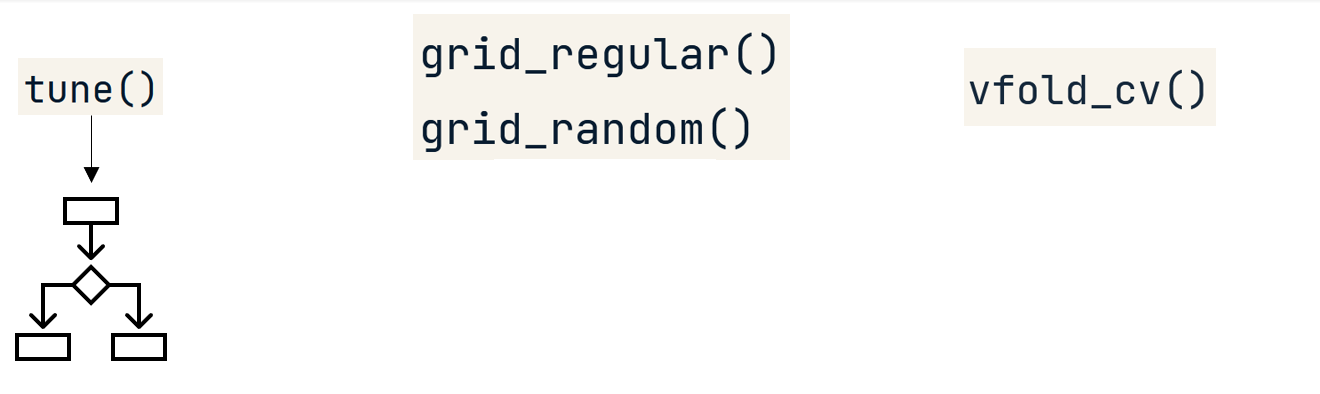

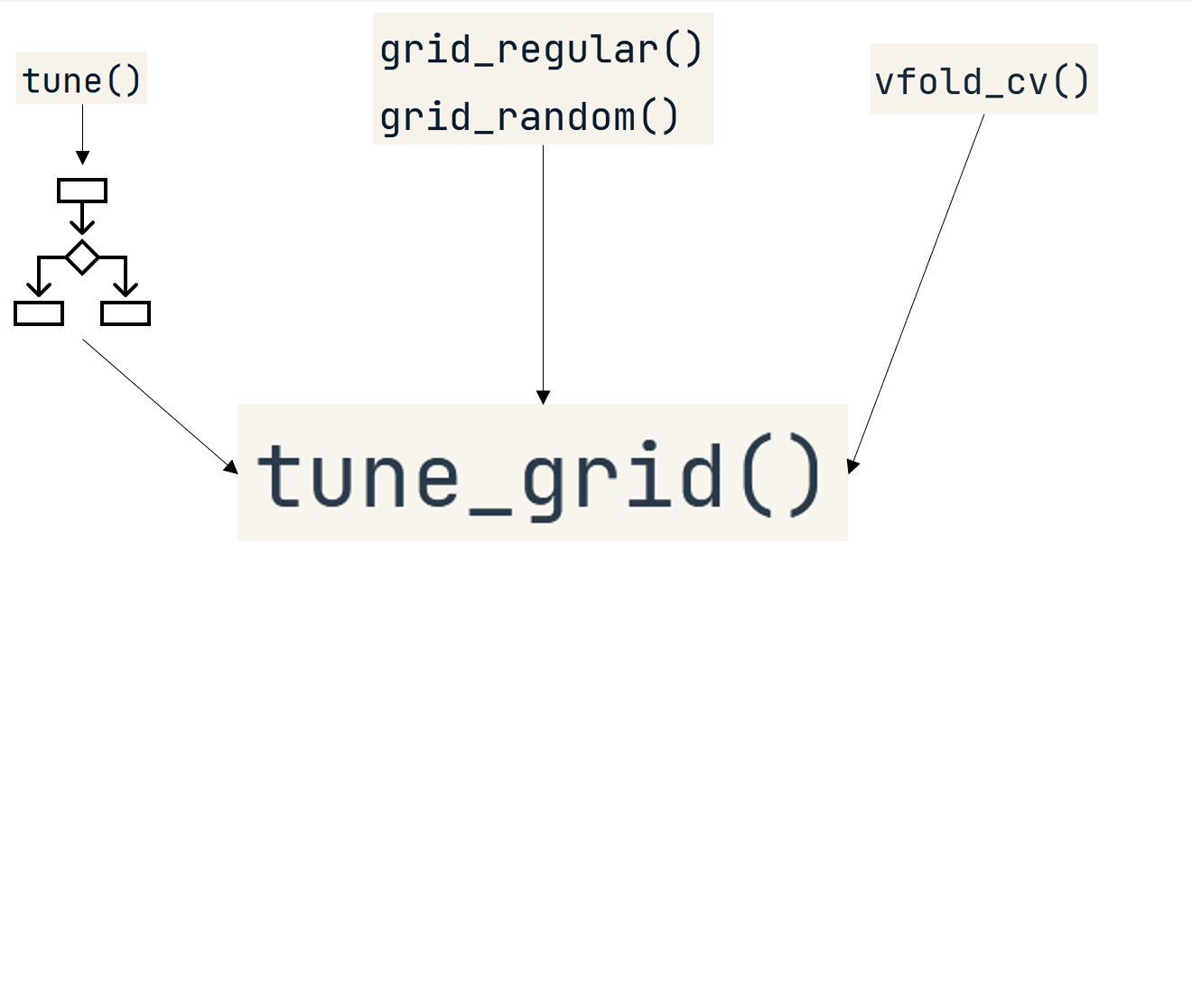

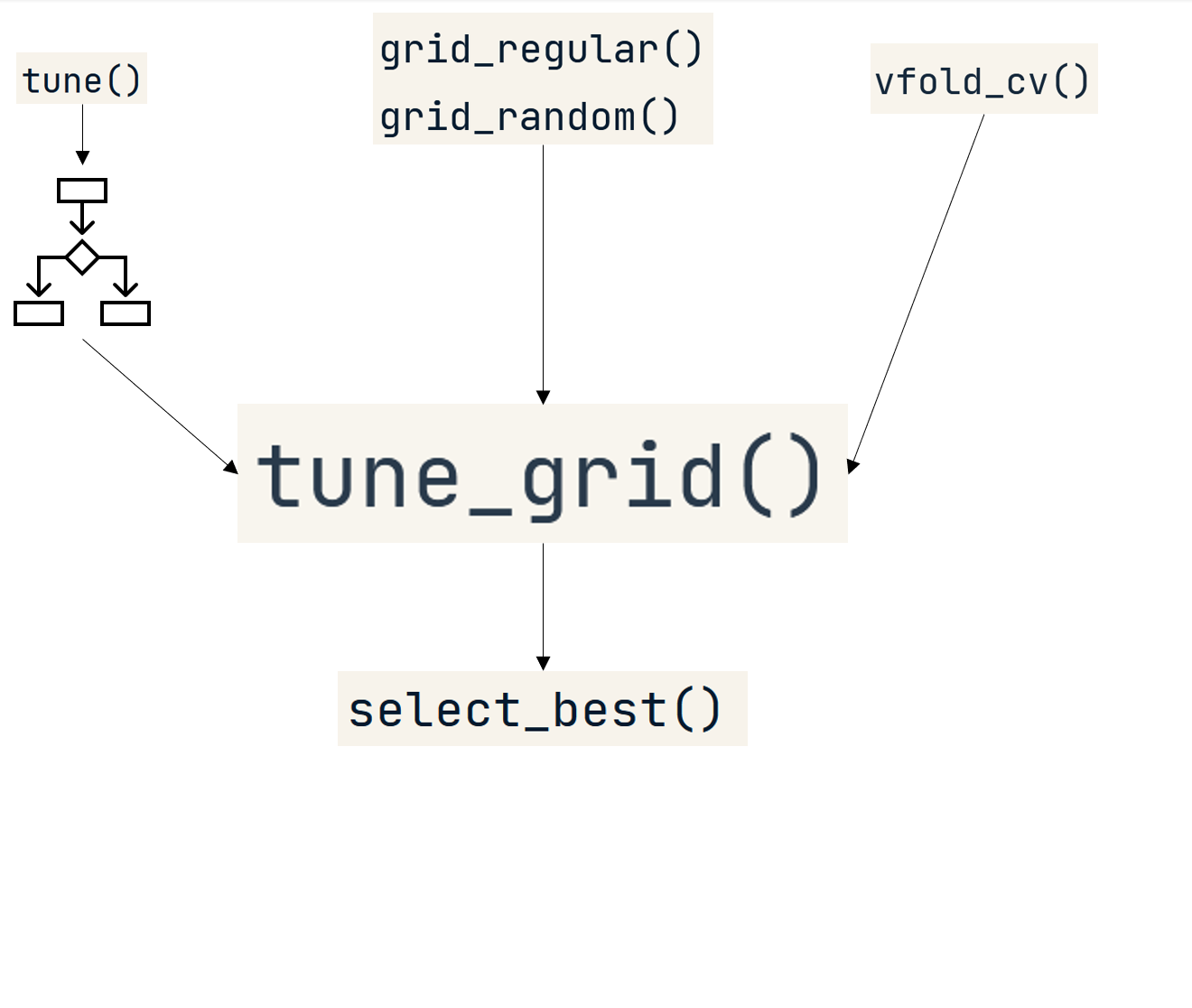

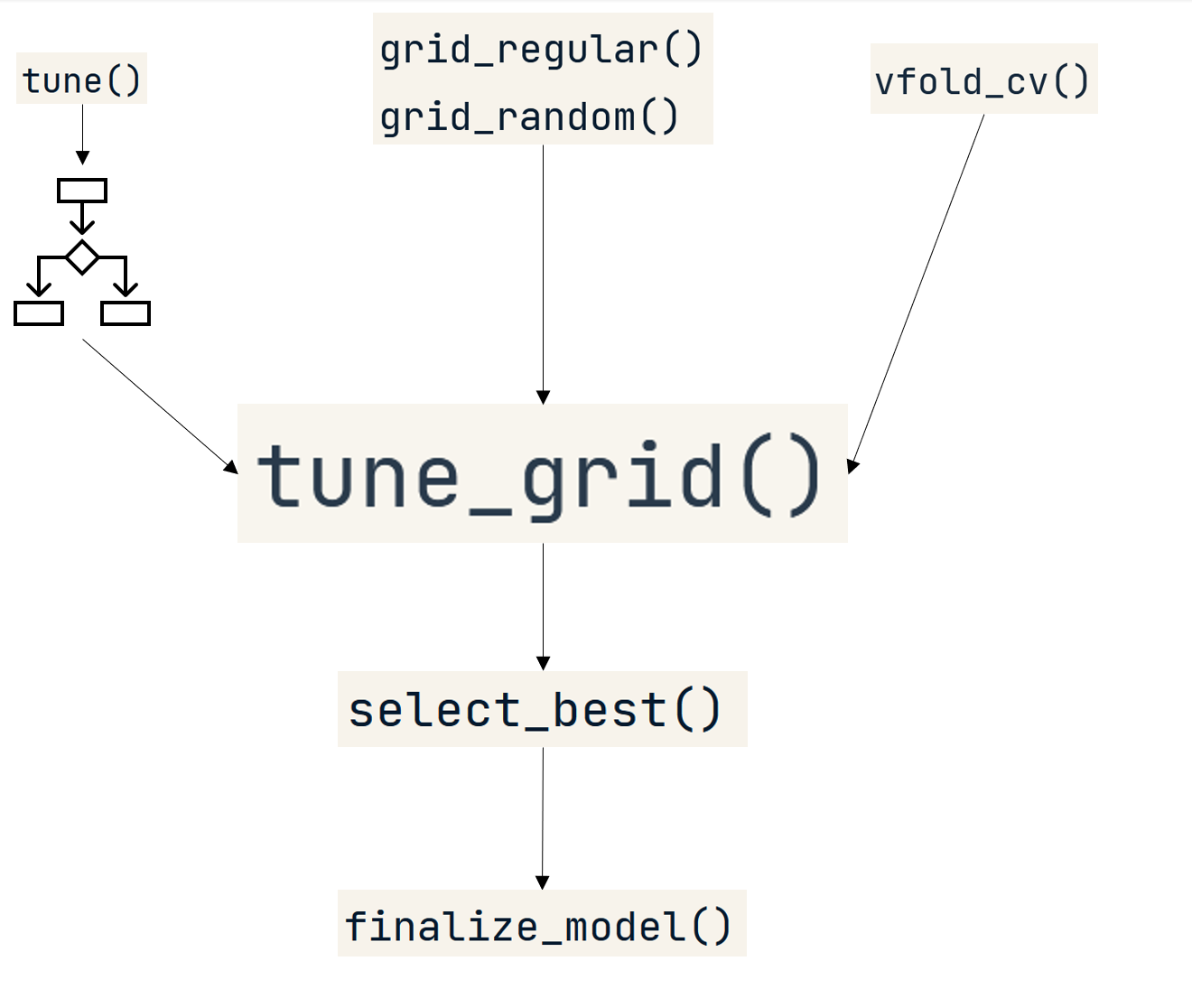

Tuning workflow

Tuning workflow

Tuning workflow

Tuning workflow

Tuning workflow

Tuning workflow

Tuning workflow

Step 1: Create the tuning spec

# Create the specification with placeholders boost_spec <- boost_tree(trees = 500, learn_rate = tune(), tree_depth = tune(), sample_size = tune()) %>%set_mode("classification") %>% set_engine("xgboost")

Boosted Tree Model Specification (classification)

Main Arguments:

trees = 500

tree_depth = tune()

learn_rate = tune()

sample_size = tune()

Computational engine: xgboost

- Usual specification

- Major difference: use

tune()to create placeholders for values to be tuned

Step 2: Create the tuning grid

# Create a regular grid tunegrid_boost <- grid_regular(parameters(boost_spec),levels = 2)

# A tibble: 8 x 3

tree_depth learn_rate sample_size

<int> <dbl> <dbl>

1 1 0.0000000001 0.1

2 15 0.0000000001 0.1

3 1 0.1 0.1

4 15 0.1 0.1

5 1 0.0000000001 1

6 15 0.0000000001 1

7 1 0.1 1

8 15 0.1 1

# Create a random grid grid_random(parameters(boost_spec),size = 8)

# A tibble: 8 x 3

tree_depth learn_rate sample_size

<int> <dbl> <dbl>

1 11 0.0000000249 0.858

2 12 0.00000000392 0.856

3 15 0.0000000131 0.220

4 15 0.0000216 0.125

5 10 0.00000000537 0.759

6 14 0.0395 0.270

7 2 0.000000828 0.904

8 9 0.0000254 0.473

Step 3: The tuning

Arguments for tune_grid():

- Dummy specification

- Model formula

- Resamples/folds

- Parameter grid

- Metric list in

metric_set()

Function call:

# Tune along the grid tune_results <- tune_grid(boost_spec,still_customer ~ .,resamples = vfold_cv(customers_train, v = 6),grid = tunegrid_boost,metrics = metric_set(roc_auc))

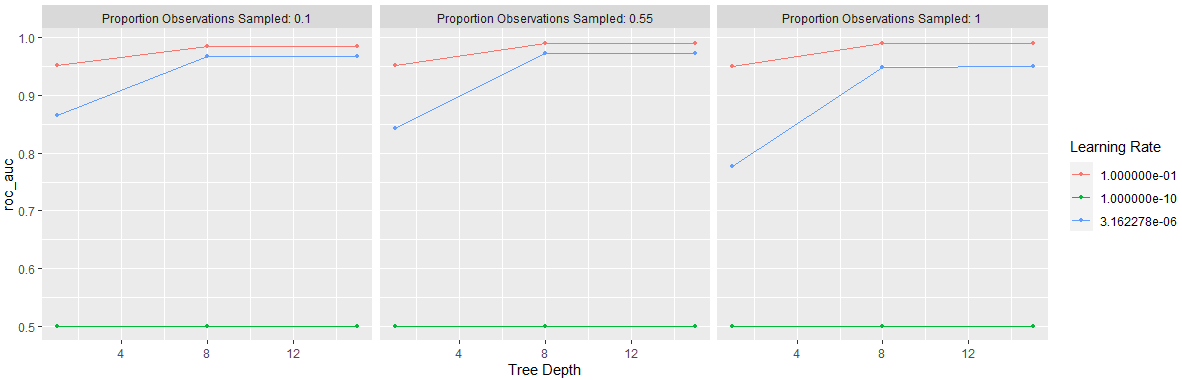

Visualize the result

# Plot the results

autoplot(tune_results)

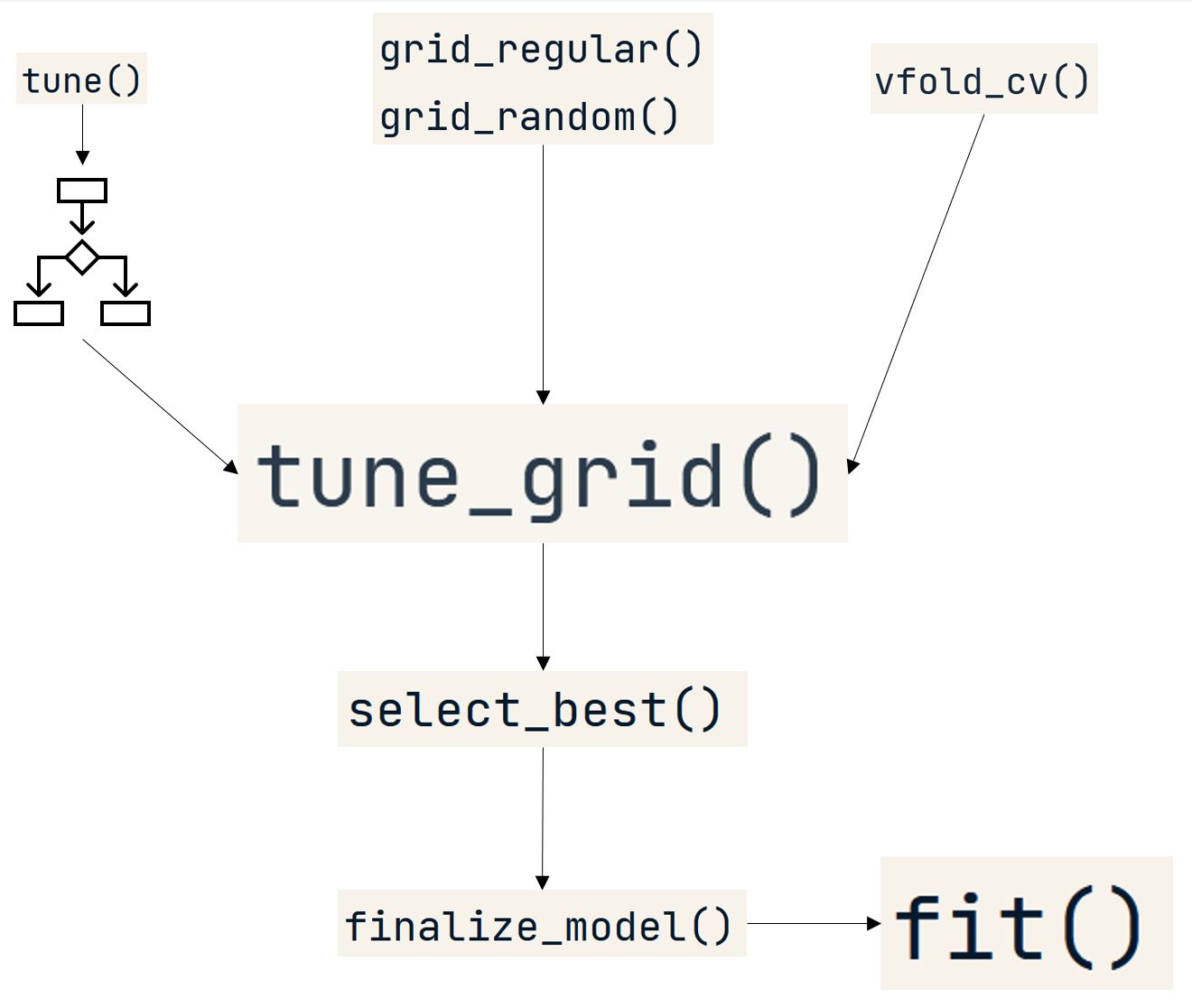

Step 4: Finalize the model

# Select the final hyperparameters

best_params <- select_best(tune_results)

best_params

# A tibble: 1 x 4

tree_depth learn_rate sample_size .config

<int> <dbl> <dbl> <chr>

1 8 0.1 0.55 Model17

# Finalize the specification

final_spec <- finalize_model(boost_spec,

best_params)

final_spec

Boosted Tree Model Specification

Main Arguments:

trees = 500

tree_depth = 8

learn_rate = 0.1

sample_size = 0.55

Computational engine: xgboost

Last step: Train the final model

final_model <- final_spec %>% fit(formula = still_customer ~ ., data = customers_train)final_model

Fit time: 2.3s

##### xgb.Booster

raw: 343.8 Kb

nfeatures : 37

evaluation_log:

iter training_error

1 0.046403

100 0.002592

Your turn!

Machine Learning with Tree-Based Models in R