Bias-variance tradeoff

Machine Learning with Tree-Based Models in R

Sandro Raabe

Data Scientist

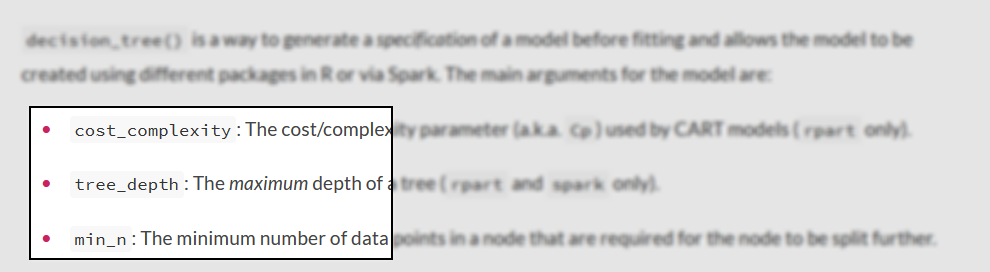

Hyperparameters

- Chosen by modeler

- e.g.

tree_depth - Check documentation!

?decision_tree

Simple model

simple_spec <- decision_tree(tree_depth = 2) %>%

set_mode("regression")

simple_spec %>% fit(final_grade ~ .,

data = training_data)

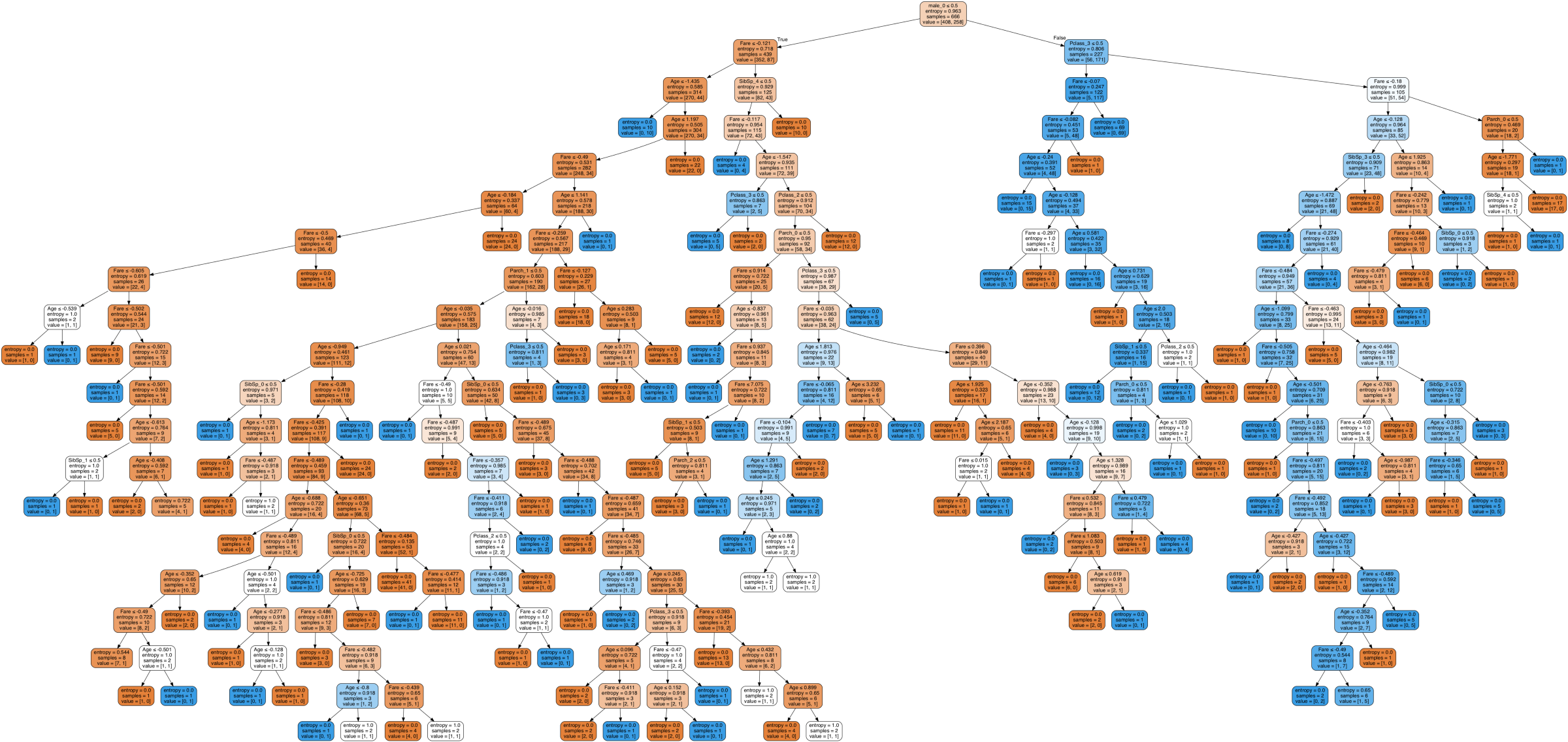

Complex model

complex_spec <- decision_tree(tree_depth = 15) %>%

set_mode("regression")

complex_spec %>% fit(final_grade ~ .,

data = training_data)

Complex model - overfitting - high variance

Predictions on training set: well done!

mae(train_results,

estimate = .pred,

truth = final_grade)

# A tibble: 1 x 3

.metric .estimate

1 mae 0.204

Predictions on test set: not even close!

mae(test_results,

estimate = .pred,

truth = final_grade)

# A tibble: 1 x 3

.metric .estimate

1 mae 0.947

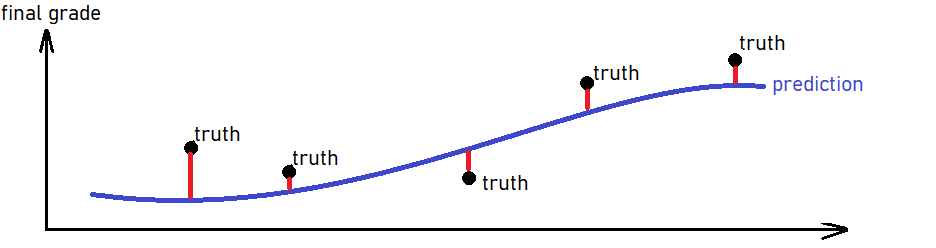

Simple model - underfitting - high bias

Large errors on training and test set:

bind_rows(training = mae(train_results, estimate = .pred, truth = final_grade),

test = mae(test_results, estimate = .pred, truth = final_grade),

.id = "dataset")

# A tibble: 2 x 4

dataset .metric .estimate

<chr> <chr> <dbl>

1 training mae 0.754

2 test mae 0.844

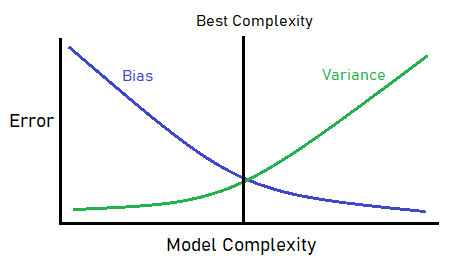

The bias-variance tradeoff

- Simple models -> high bias

- Complex models -> high variance

- Tradeoff between bias and variance

- Build models around the sweet spot

Detecting overfitting

Out-of-sample/CV:

collect_metrics(cv_fits)

# A tibble: 1 x 3

.metric mean n

1 mae 2.432 5

- High CV error

- Overfit / high variance

- Reduce complexity!

In-sample:

mae(training_pred,

estimate = .pred,

truth = final_grade)

# A tibble: 1 x 2

.metric .estimate

1 mae 0.228

- Small training error

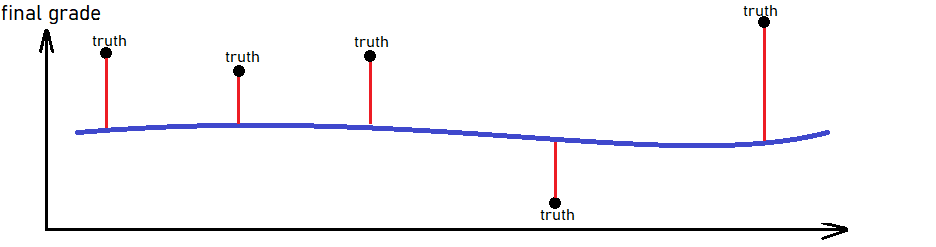

Detecting underfitting

In-sample:

mae(training_pred, estimate = .pred, truth = final_grade)

# A tibble: 1 x 2

.metric .estimate

<chr> <dbl>

1 mae 2.432

- Large in-sample/training error

- Underfit / high bias

- Increase complexity!

Let's trade off!

Machine Learning with Tree-Based Models in R