Task graphs and scheduling methods

Parallel Programming with Dask in Python

James Fulton

Climate Informatics Researcher

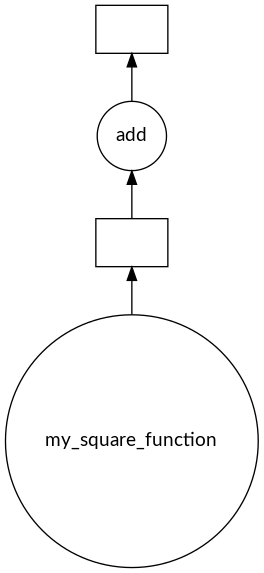

Visualizing a task graph

# Create 2 delayed objects delayed_num1 = delayed(my_square_function)(3) delayed_num2 = delayed(my_square_function)(4) # Add them result = delayed_num1 + delayed_num2# Plot the task graph result.visualize()

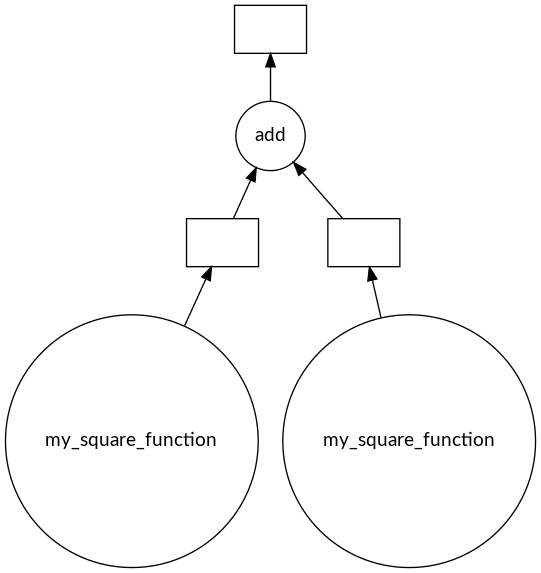

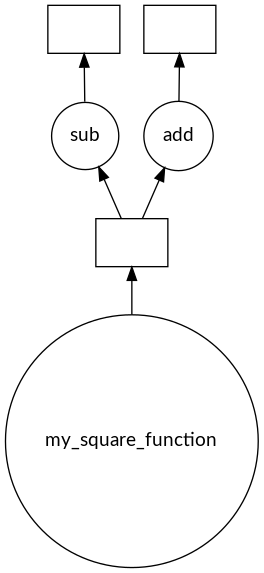

Overlapping task graph

delayed_intermediate = delayed(my_square_function)(3)

# These two results both use delayed_intermediate_result

delayed_result1 = delayed_intermediate - 5

delayed_result2 = delayed_intermediate + 4

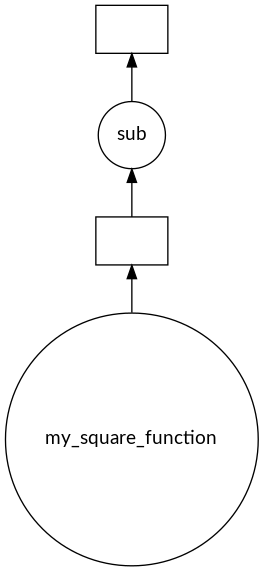

Overlapping task graph

delayed_result1.visualize()

delayed_result2.visualize()

Overlapping task graph

# Plot the task graph

dask.visualize(delayed_result1, delayed_result2)

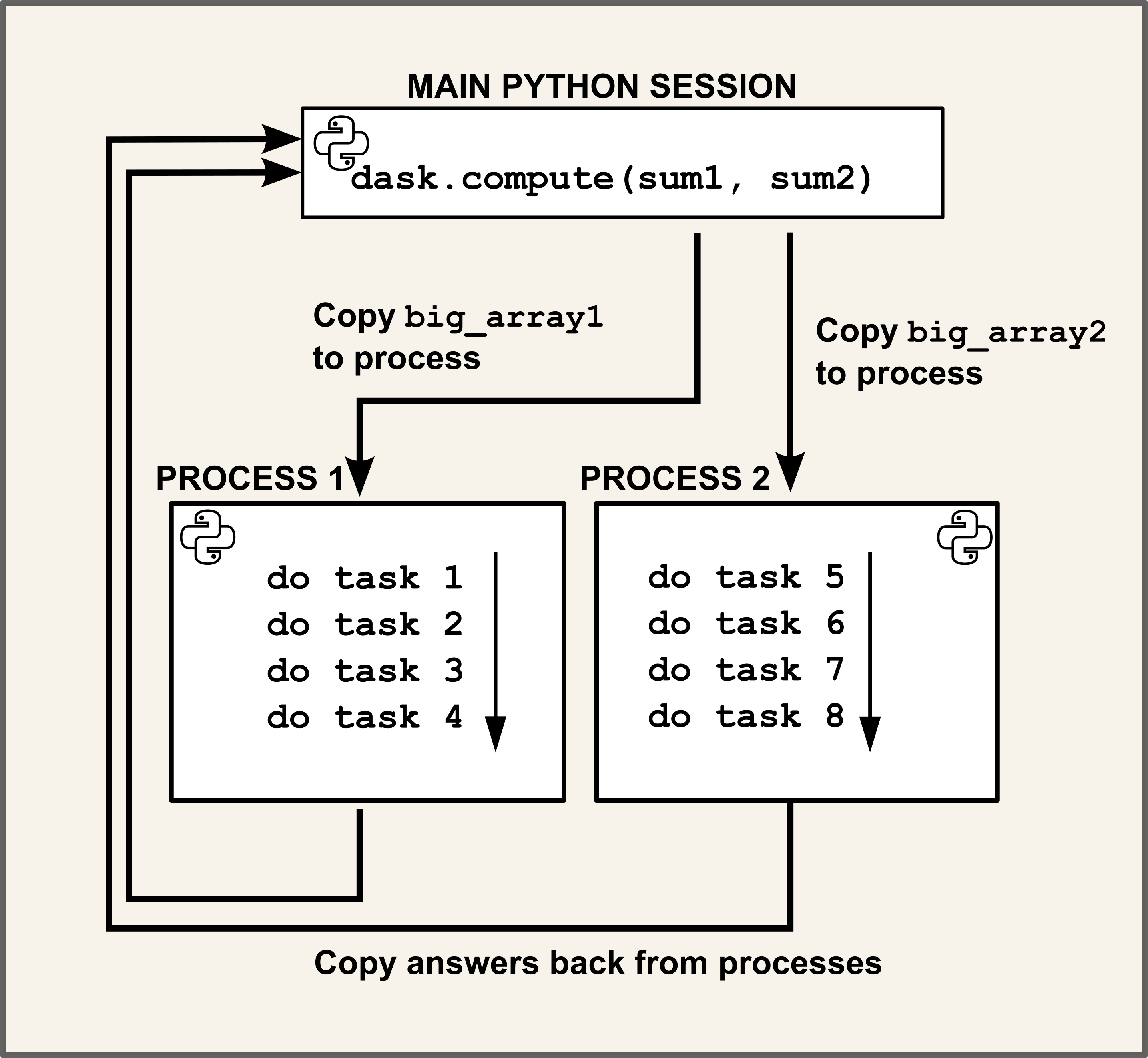

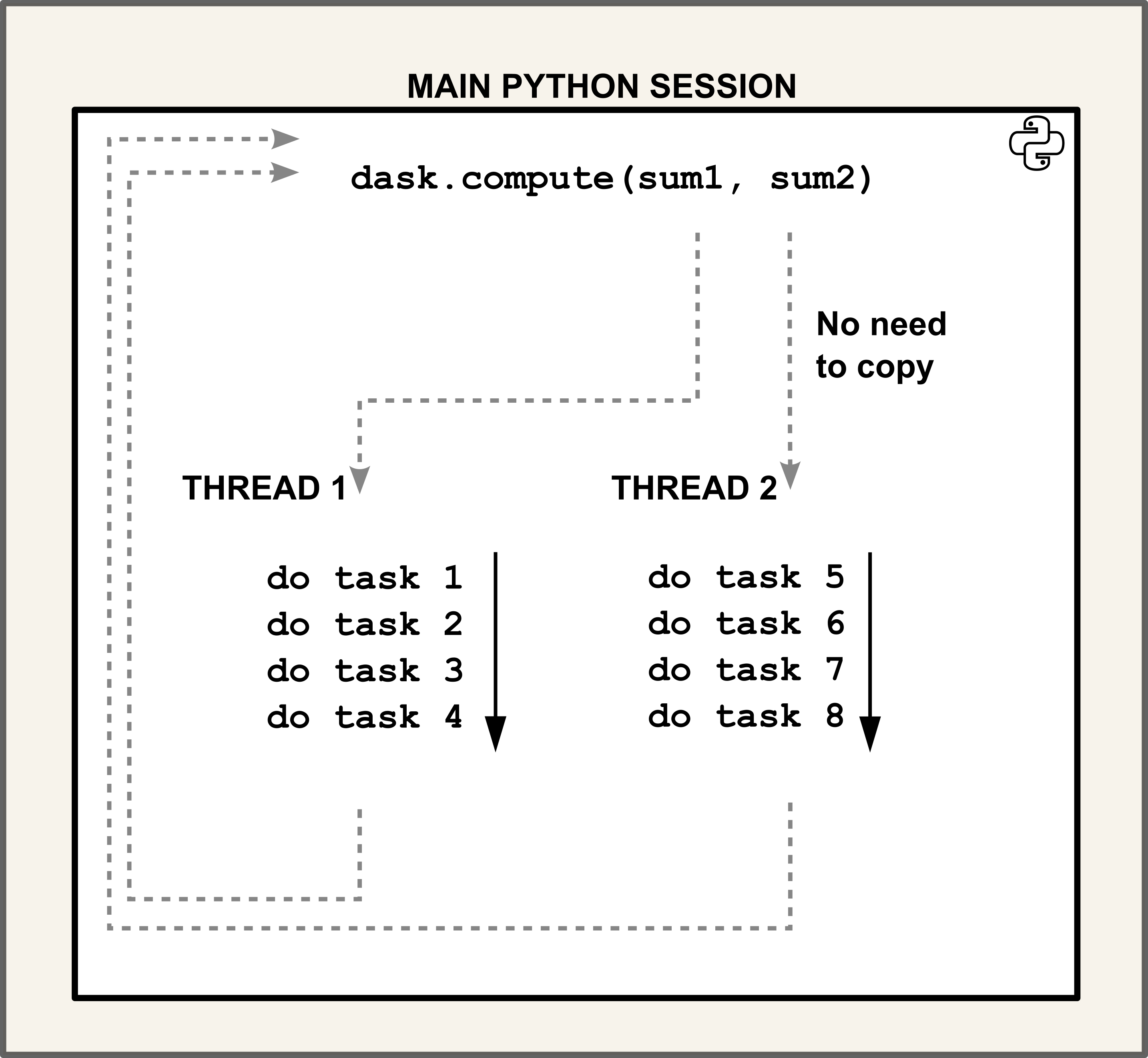

Multi-threading vs. parallel processing

Moving data

Parallel processing

- Processes have their own RAM space

Multi-threading

- Threads use the same RAM space

Multi-threading vs. parallel processing

# Run a sum on two big arrays

sum1 = delayed(np.sum)(big_array1)

sum2 = delayed(np.sum)(big_array2)

# Compute using processes

dask.compute(sum1, sum2)

- Slow using parallel processing

Multi-threading vs. parallel processing

# Run a sum on two big arrays

sum1 = delayed(np.sum)(big_array1)

sum2 = delayed(np.sum)(big_array2)

# Compute using threads

dask.compute(sum1, sum2)

- Fast using multi-threading

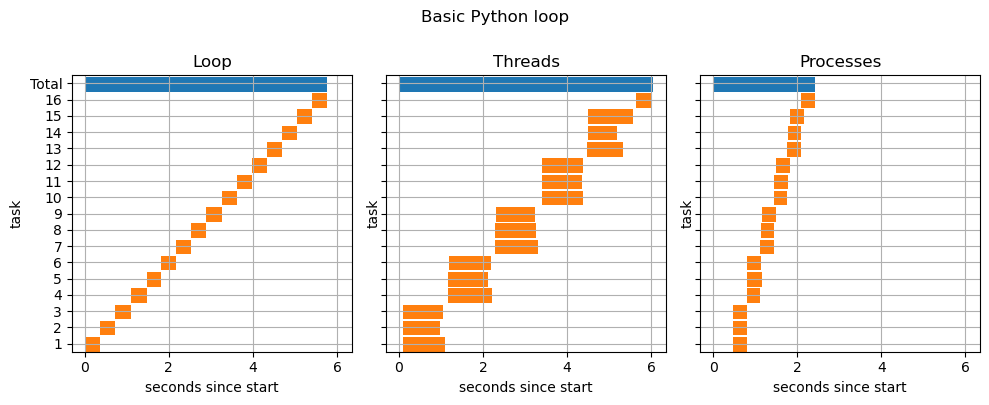

The GIL

Global interpreter lock - only one thread can read the Python script at a time

def sum_to_n(n):

"""Sums numbers from 0 to n"""

total = 0

for i in range(n+1):

total += i

return total

- Multi-threading won't help here

- Parallel processing will

sum1 = delayed(sum_to_n)(1000)

sum2 = delayed(sum_to_n)(1000)

Example timings - GIL

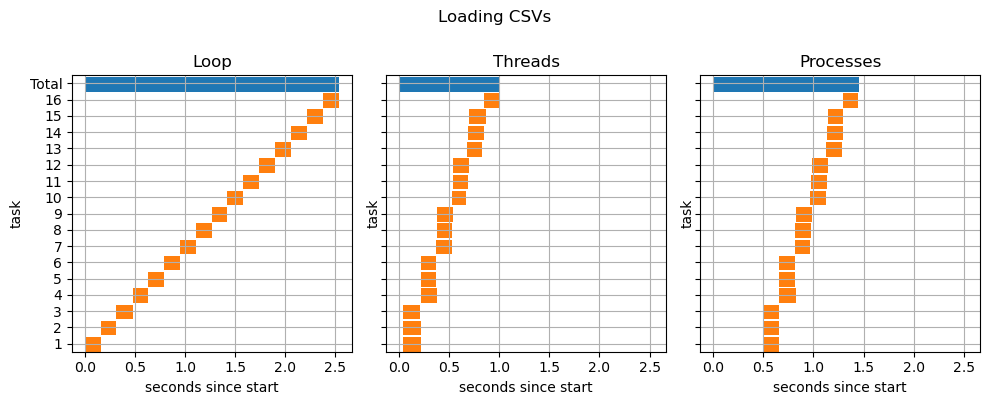

Functions which release the GIL

- E.g. the

pd.read_csv()function releases the GIL

df1 = delayed(pd.read_csv)('file1.csv')

df2 = delayed(pd.read_csv)('file2.csv')

Example timings - Loading data

Summary

Threads

- Are very fast to initiate

- Share memory space with main session

- No memory transfer needed

- Limited by the GIL, which allows one thread to read the code at once

Processes

- Take time and memory to set up

- Have separate memory pools

- Very slow to transfer data between themselves and to the main Python session

- Each have their own GIL and so don't need to take turns reading the code

Let's practice!

Parallel Programming with Dask in Python