Training and test sets

Forecasting in R

Rob J. Hyndman

Professor of Statistics at Monash University

Training and test sets

Training and test sets

Training and test sets

- The test set must not be used for any aspect of calculating forecasts

- Build forecasts using training set

- A model which fits the training data well will not necessarily forecast well

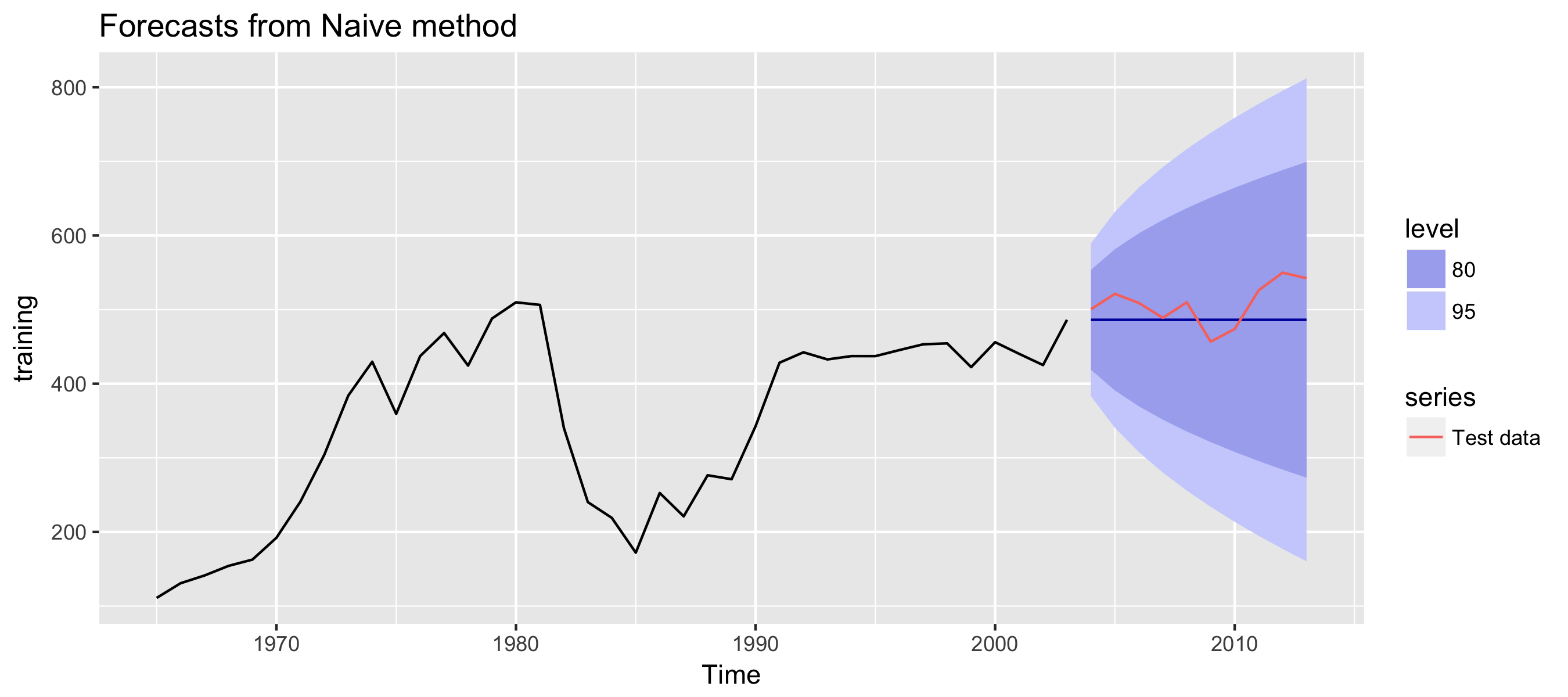

Example: Saudi Arabian oil production

training <- window(oil, end = 2003)

test <- window(oil, start = 2004)

fc <- naive(training, h = 10)

autoplot(fc) + autolayer(test, series = "Test data")

Forecast errors

Forecast "error" = the difference between observed value and its forecast in the test set.

$\neq$ residuals

- which are errors on the training set (vs. test set)

- which are based on one-step forecasts (vs. multi-step)

Compute accuracy using forecast errors on test data

Measures of forecast accuracy

- Observation: $y_t$

- Forecast: $\hat{y}_t$

- Forecast error: $e_t = y_t - \hat{y}_t$

| Accuracy measure | Calculation |

|---|---|

| Mean absolute error | $\text{MAE} = avg(\mid e_t \mid)$ |

| Mean squared error | $\text{MSE} = avg(e_t^2)$ |

| Mean absolute percentage error | $\text{MAPE} = 100 \times avg(\mid \frac{e_t}{y_t} \mid )$ |

| Mean absolute scaled error | $\text{MASE} = \frac{\text{MAE}}{Q}$ where $Q$ is a scaling constant |

The accuracy() command

accuracy(fc, test)

ME RMSE MAE MPE MAPE MASE ACF1 Theil's U

Training set 9.874 52.56 39.43 2.507 12.571 1.0000 0.1802 NA

Test set 21.602 35.10 29.98 3.964 5.778 0.7603 0.4030 1.185

Let's practice!

Forecasting in R