Feature engineering

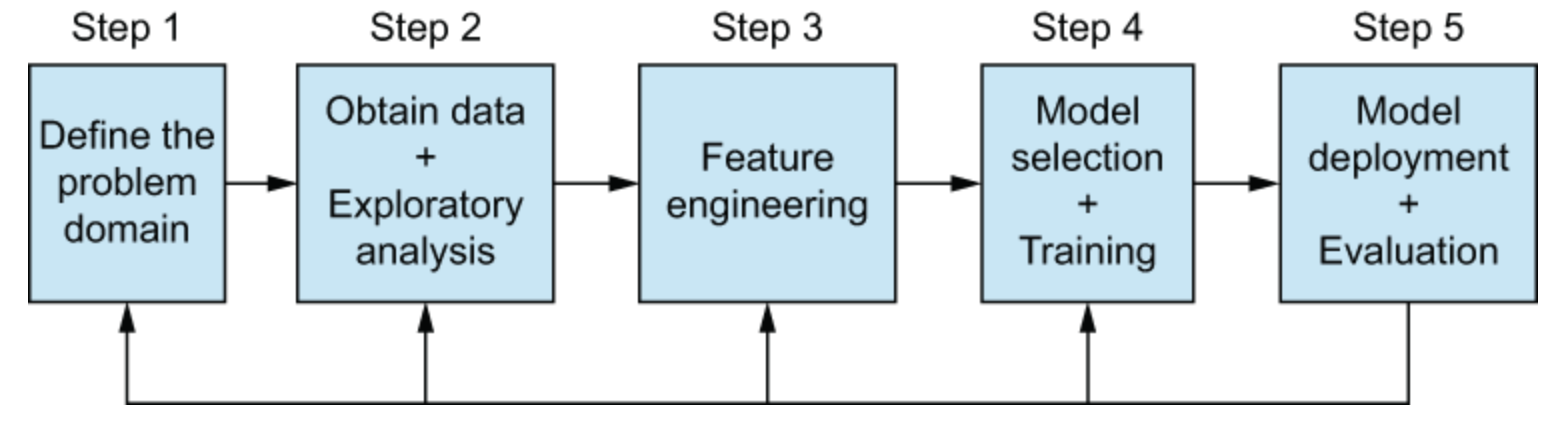

Developing Machine Learning Models for Production

Sinan Ozdemir

Data Scientist and Author

Introduction to feature engineering

- Transforming training data to maximize ML pipeline performance

- Reducing computation complexity

- Examples

- Aggregating data from multiple sources

- Constructing new features

- Applying feature transformations

1 https://www.manning.com/books/feature-engineering-bookcamp

Aggregating data from multiple sources

- Combining data from different datasets

- Using multiple types of data (e.g. numerical and categorical)

This helps by:

- Improving the accuracy of models

- Enabling the use of more complex models

Example of data aggregation

class DataAggregator:

def __init__(self):

pass

def fit(self, X, y=None):

return self # nothing to fit

def transform(self, X, y=None):

# Load data from multiple sources

data1 = pd.read_csv('data1.csv')

data2 = pd.read_csv('data2.csv')

data3 = pd.read_csv('data3.csv')

# Combine data from all sources (including X) into a single data frame

aggregated_data = pd.concat([X, data1, data2, data3], axis=0)

return aggregated_data # Return aggregated data

Feature construction

- Creating new features from existing ones

- Generating new features from pre-existing data

- Improving model performance

- Enhancing model interpretability

Example of feature construction

class FeatureConstructor:

def __init__(self):

pass

def fit(self, X, y=None):

return self

def transform(self, X, y=None):

# Calculate the mean of each column in the data

mean_values = X.mean()

# Create new features based on the mean values

X['mean_col1'] = X['col1'] - mean_values['col1']

X['mean_col2'] = X['col2'] - mean_values['col2']

return X # Return the augmented data set

Feature transformations

Transforming existing features in place

- Normalizing data distributions

- Removing outliers

- Improving model accuracy and performance

from sklearn.datasets import load_breast_cancer

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import accuracy_score

data = load_breast_cancer() # Load dataset

X, y = data.data, data.target

model = LogisticRegression(random_state=42) # instantiate a model

# Split data into train and test sets

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42)

model.fit(X_train, y_train) # Fit logistic regression model without scaling

y_pred_no_scaling = model.predict(X_test)

acc_no_scaling = accuracy_score(y_test, y_pred_no_scaling)

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

model.fit(X_train_scaled, y_train) # Fit logistic regression model with scaling

y_pred_with_scaling = model.predict(X_test_scaled)

acc_with_scaling = accuracy_score(y_test, y_pred_with_scaling)

# Compare accuracies of models with and without scaling

print(f"Accuracy without scaling: {acc_no_scaling}") # 0.971

print(f"Accuracy with scaling: {acc_with_scaling}") # 0.982

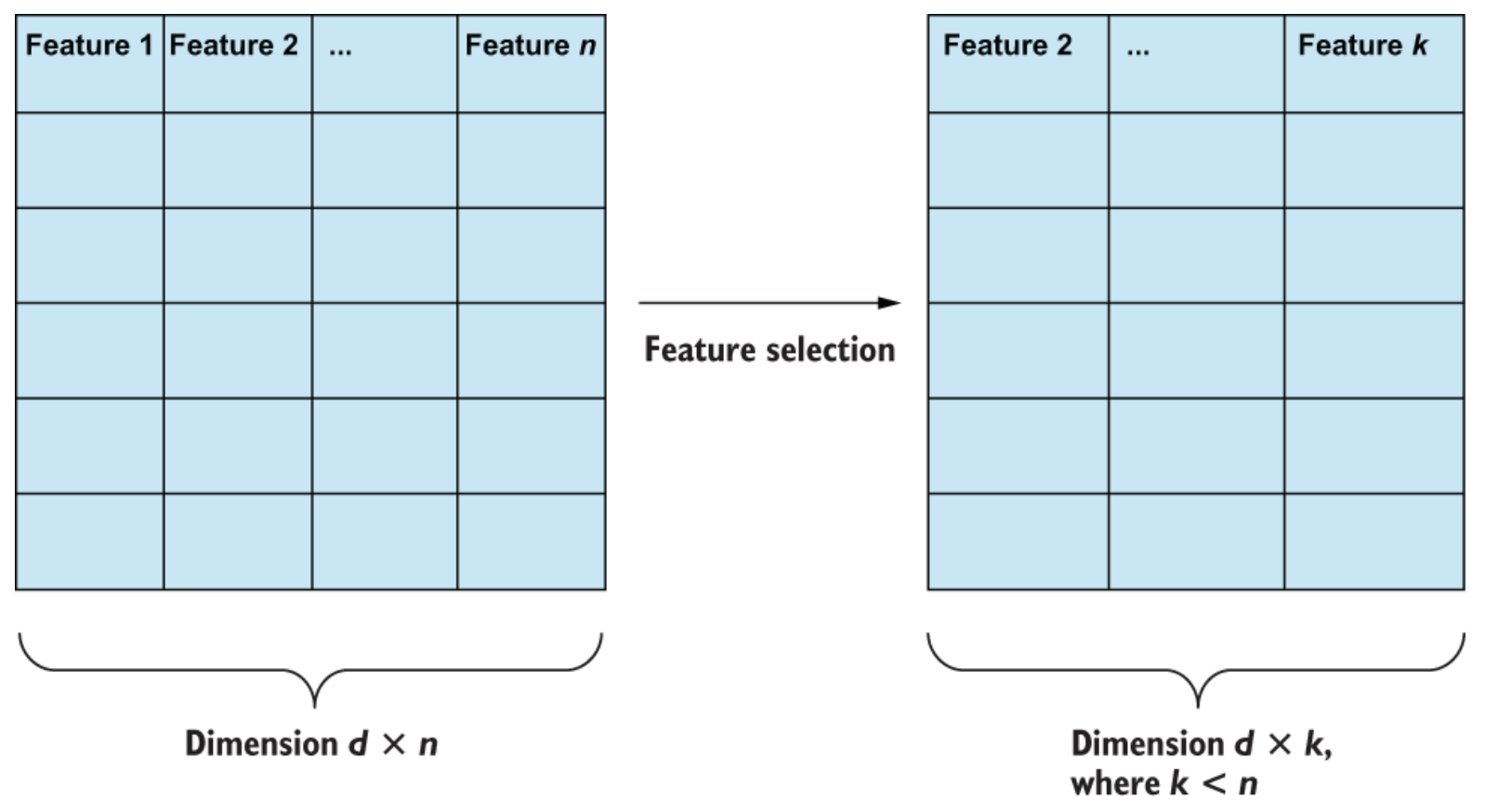

Feature selection

Selecting a subset of features from a larger set of features by removing redundant and irrelevant features

- Reduces overfitting

- Enhances model accuracy and performance

- Improves model interpretability

1 https://www.manning.com/books/feature-engineering-bookcamp

Example of feature engineering cont.

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.feature_selection import SelectKBest, chi2

from sklearn.pipeline import Pipeline

pipeline = Pipeline([ # Define feature engineering pipeline

('aggregate', DataAggregator()), # Aggregate data from multiple sources

('construction', FeatureConstructor()), # Feature Construction

('scaler', StandardScaler()), # Feature Transformation

('select', SelectKBest(chi2, k=10)), # Feature Selection

])

X_transformed = pipeline.fit_transform(X) # Fit and transform data using pipeline

Learn more about feature engineering

Let's practice!

Developing Machine Learning Models for Production