Packaging ML models

Developing Machine Learning Models for Production

Sinan Ozdemir

Data Scientist, Entrepreneur, and Author

Why packaging matters

- Optimize performance

- Ensure compatibility

- Makes models easier to deploy

Packaging Methods

- Serialization: simple, lightweight, and language-agnostic

- Environment packaging: capture entire software environments

- Containerization: a portable, reproducible, and isolated environment

How to package ML models

Serialization - storing and retrieving an ML model

Environment packaging - consistent and reproducible environment for the ML Model

Containerization - packaging the model, dependencies, and the environment in a single "container"

Serializing scikit-learn models

Serializing an sklearn model using pickle:

import pickle

model = ... # Train the scikit-learn model

# Serialize the model to a file

with open('model.pkl', 'wb') as f:

pickle.dump(model, f)

# Load the serialized model from the file

with open('model.pkl', 'rb') as f:

model = pickle.load(f)

Serializing an sklearn model in HDF5 format:

import h5py

import numpy as np

from sklearn.externals import joblib

model = ... # Train the scikit-learn model

# Serialize the model to an HDF5 file

with h5py.File('model.h5', 'w') as f:

f.create_dataset('model_weights',

data=joblib.dump(model))

# Load the serialized model from the HDF5 file

with h5py.File('model.h5', 'r') as f:

model = joblib.load(f['model_weights'][:])

Serializing PyTorch and Tensorflow models

Serializing a PyTorch model:

import torch

# Train a PyTorch model and store it in a variable

trained_model = ...

# Serialize the trained model to a file

serialized_model_path = 'model.pt'

torch.save(trained_model.state_dict(), serialized_model_path)

# Load the serialized model from a file

loaded_model = ... # Initialize the model

loaded_model.load_state_dict(

torch.load(serialized_model_path))

Serializing a Tensorflow model:

import tensorflow as tf

# Train a Tensorflow model

trained_model = ...

# Save the trained model to a directory

saved_model_directory = 'model/'

tf.saved_model.save

(trained_model, saved_model_directory)

# Load the saved model from the directory

loaded_model = tf.saved_model.load(

saved_model_directory)

ML environment packaging with Docker

- Ensure the model's environment lets it run

- virtualenv etc. create consistent and reproducible environments

- Docker containers are self-contained units that are easily deployable

Example Dockerfile

# Use an existing image as the base image

FROM python:3.8-slim

# Set the working directory

WORKDIR /app

# Copy the requirements file to the image

COPY requirements.txt .

# Install the required dependencies

RUN pip install -r requirements.txt

# Copy the ML model and its dependencies to the image

COPY model/ .

# Set the entrypoint to run the model

ENTRYPOINT ["python", "run_model.py"]

<---- Use Python 3.8 base image

<---- Set the working directory

<---- Copy the requirmentes.txt file

<---- Install the model's dependent packages

<---- Copy the model into the continer

<---- Tell the container how to start up

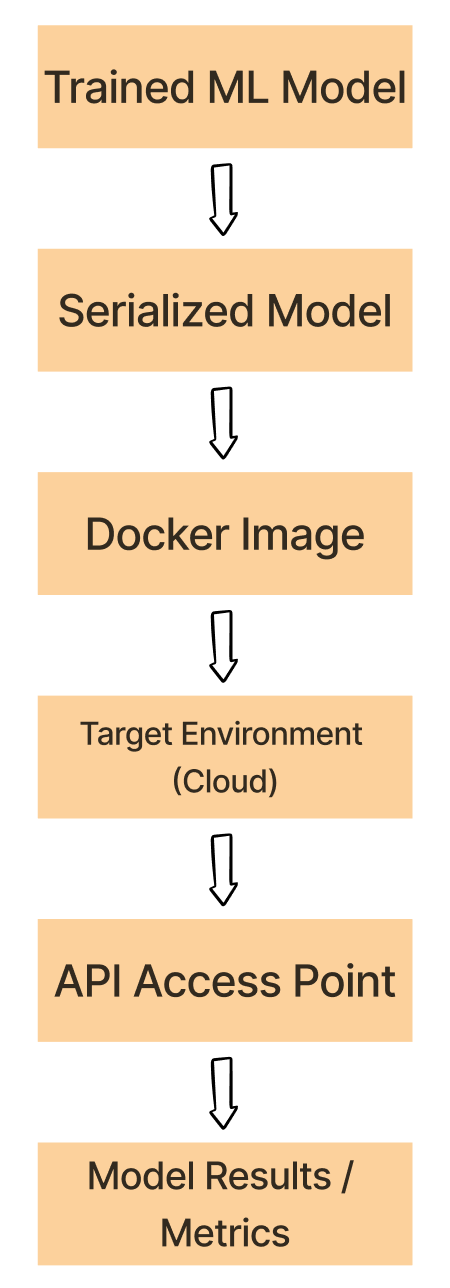

Experiment -> Docker workflow

Serialize the trained ML model using a format such as pickle, HDF5, or PyTorch.

Containerize the serialized ML model, its dependencies, and the environment

Deploy the Docker image to a target environment such as a cloud platform

Run the Docker container from the deployed Docker image and run the ML model.

Use the model within the container from an API or some other access point.

Let's practice!

Developing Machine Learning Models for Production