Improving model performance

Introduction to Deep Learning with PyTorch

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

Steps to maximize performance

$$

Can we solve the problem?

Set a performance baseline

$$

- Increase performance on the validation set

$$ $$

- Achieve the best possible performance

Step 1: overfit the training set

Modify the training loop to overfit a single data point

features, labels = next(iter(dataloader)) for i in range(1000): outputs = model(features) loss = criterion(outputs, labels) optimizer.zero_grad() loss.backward() optimizer.step()- Should reach 1.0 accuracy and 0 loss

Then scale up to the entire training set

- Keep default hyperparameters

Step 2: reduce overfitting

Goal: maximize the validation accuracy

Experiment with:

- Dropout

- Data augmentation

- Weight decay

- Reducing model capacity

$$

- Keep track of each hyperparameter and validation accuracy

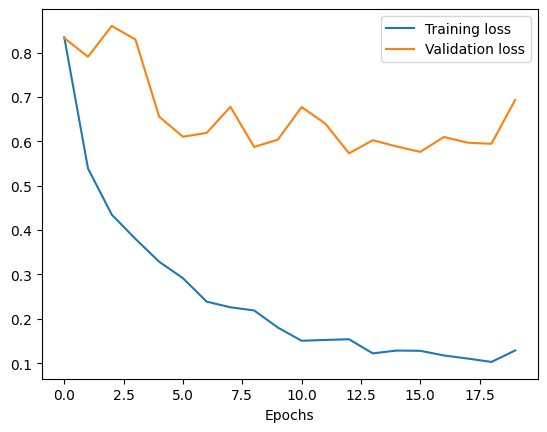

Step 2: reduce overfitting

$$

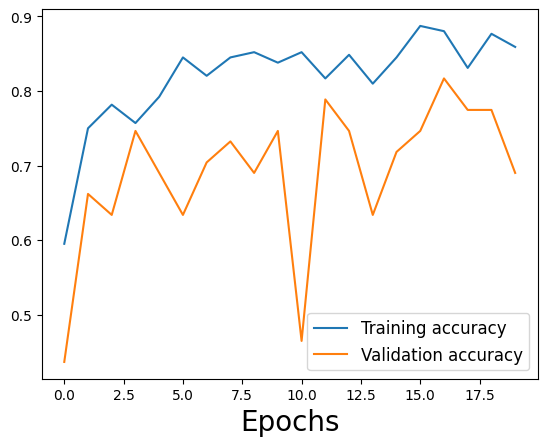

Original model overfitting training data

$$

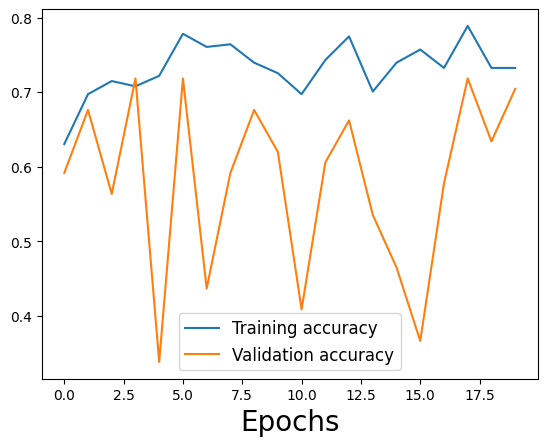

Updated model with too much regularization

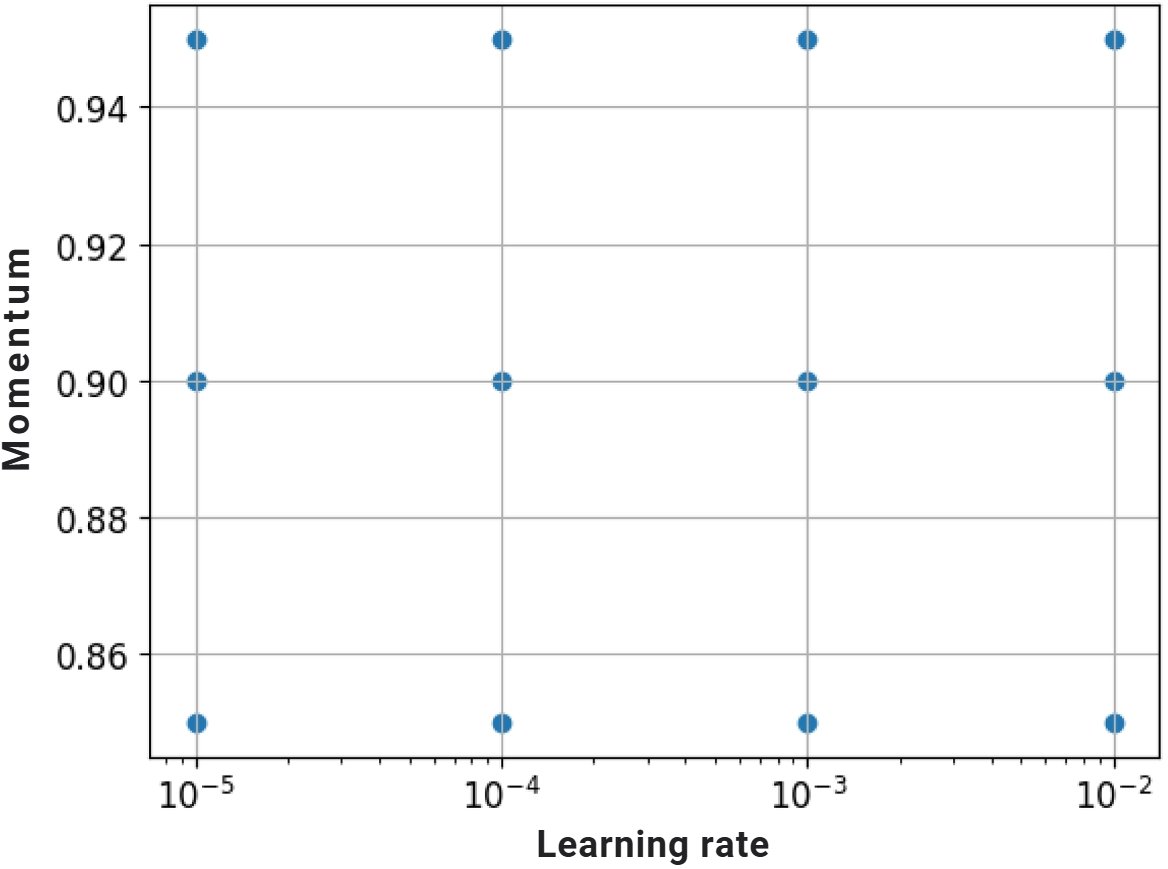

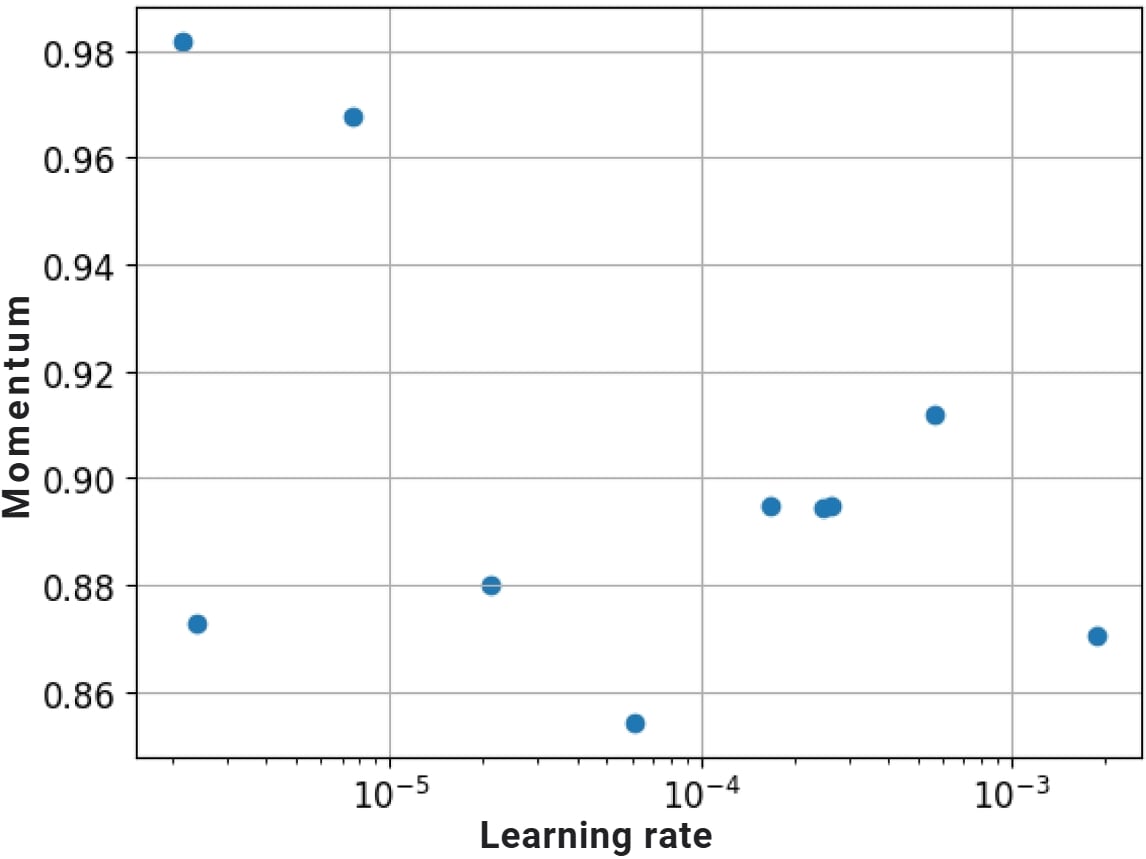

Step 3: fine-tune hyperparameters

- Grid search

for factor in range(2, 6):

lr = 10 ** -factor

- Random search

factor = np.random.uniform(2, 6)

lr = 10 ** -factor

Let's practice!

Introduction to Deep Learning with PyTorch