Learning rate and momentum

Introduction to Deep Learning with PyTorch

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

Updating weights with SGD

- Training a neural network = solving an optimization problem.

Stochastic Gradient Descent (SGD) optimizer

sgd = optim.SGD(model.parameters(), lr=0.01, momentum=0.95)

- Two arguments:

- learning rate: controls the step size

- momentum: adds inertia to avoid getting stuck

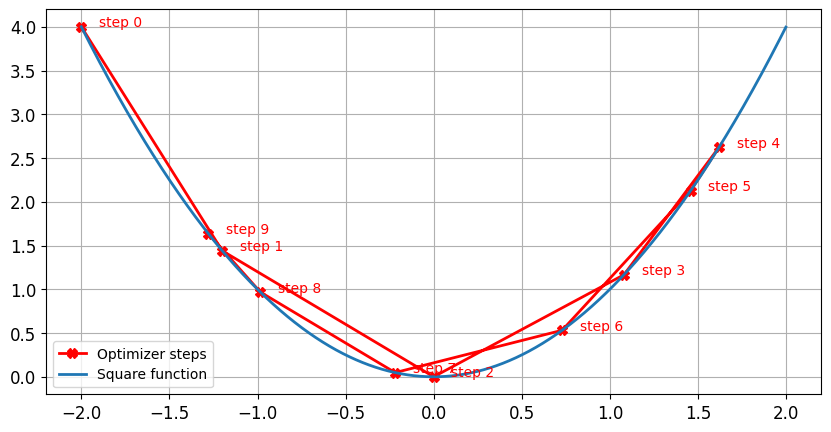

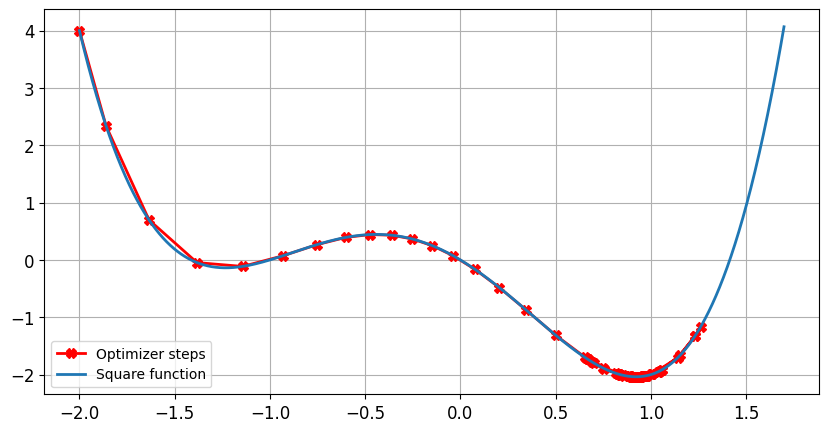

Impact of the learning rate: optimal learning rate

- Step size decreases near zero as the gradient gets smaller

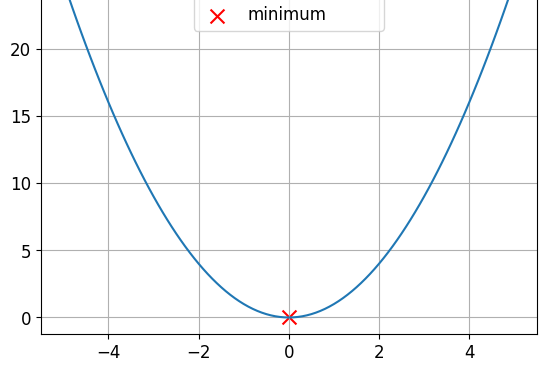

Impact of the learning rate: small learning rate

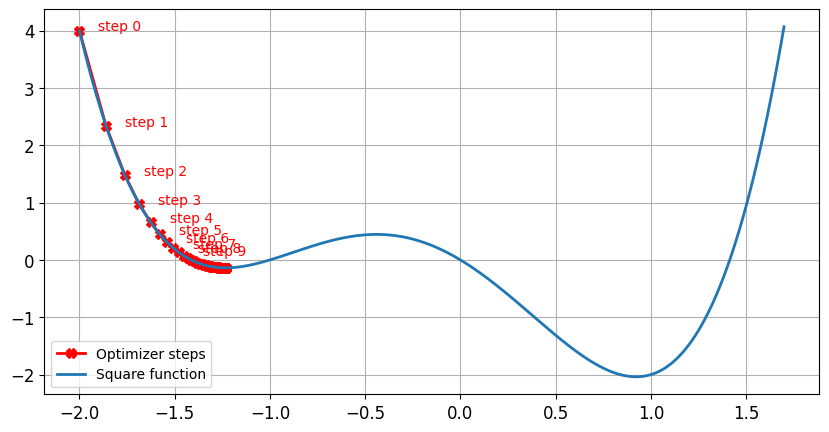

Impact of the learning rate: high learning rate

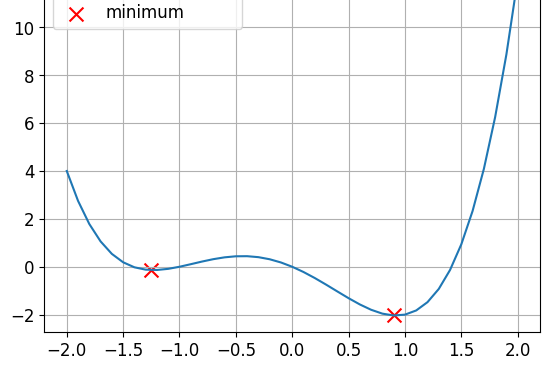

Convex and non-convex functions

This is a convex function.

This is a non-convex function.

- Loss functions are non-convex

Without momentum

lr = 0.01momentum = 0, after 100 steps minimum found forx = -1.23andy = -0.14

With momentum

lr = 0.01momentum = 0.9, after 100 steps minimum found forx = 0.92andy = -2.04

Summary

$$

| Learning Rate | Momentum |

|---|---|

| Controls the step size | Controls the inertia |

| Too high → poor performance | Helps escape local minimum |

| Too low → slow training | Too small → optimizer gets stuck |

| Typical range: 0.01 ($10^{-2}$) and 0.0001 ($10^{-4}$) | Typical range: 0.85 to 0.99 |

Let's practice!

Introduction to Deep Learning with PyTorch