spaCy basics

Natural Language Processing with spaCy

Azadeh Mobasher

Principal Data Scientist

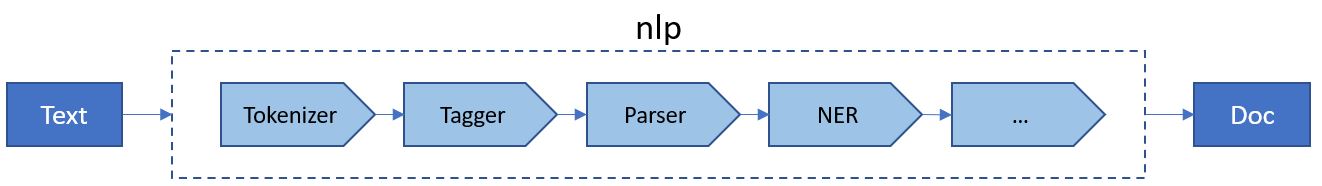

spaCy NLP pipeline

import spacynlp = spacy.load("en_core_web_sm")doc = nlp("Here's my spaCy pipeline.")

- Import

spaCy - Use

spacy.load()to returnnlp, aLanguageclass- The

Languageobject is the text processing pipeline

- The

- Apply

nlp()on any text to get aDoccontainer

spaCy NLP pipeline

spaCy applies some processing steps using its Language class:

Container objects in spaCy

- There are multiple data structures to represent text data in

spaCy:

| Name | Description |

|---|---|

Doc |

A container for accessing linguistic annotations of text |

Span |

A slice from a Doc object |

Token |

An individual token, i.e. a word, punctuation, whitespace, etc. |

Pipeline components

- The

spaCylanguage processing pipeline always depends on the loaded model and its capabilities.

| Component | Name | Description |

|---|---|---|

| Tokenizer | Tokenizer | Segment text into tokens and create Doc object |

| Tagger | Tagger | Assign part-of-speech tags |

| Lemmatizer | Lemmatizer | Reduce the words to their root forms |

| EntityRecognizer | NER | Detect and label named entities |

Pipeline components

Each component has unique features to process text

- Language

- DependencyParser

- Sentencizer

Tokenization

- Always the first operation

- All the other operations require tokens

- Tokens can be words, numbers and punctuation

import spacy nlp = spacy.load("en_core_web_sm") doc = nlp("Tokenization splits a sentence into its tokens.")print([token.text for token in doc])

['Tokenization', 'splits', 'a', 'sentence', 'into', 'its', 'tokens', '.']

Sentence segmentation

- More complex than tokenization

- Is a part of

DependencyParsercomponent

import spacy nlp = spacy.load("en_core_web_sm") text = "We are learning NLP. This course introduces spaCy." doc = nlp(text)for sent in doc.sents: print(sent.text)

We are learning NLP.

This course introduces spaCy.

Lemmatization

- A lemma is a the base form of a token

- The lemma of eats and ate is eat

- Improves accuracy of language models

import spacy nlp = spacy.load("en_core_web_sm") doc = nlp("We are seeing her after one year.")print([(token.text, token.lemma_) for token in doc])

[('We', 'we'), ('are', 'be'), ('seeing', 'see'), ('her', 'she'),

('after', 'after'), ('one', 'one'), ('year', 'year'), ('.', '.')]

Let's practice!

Natural Language Processing with spaCy