spaCy pipelines

Natural Language Processing with spaCy

Azadeh Mobasher

Principal Data Scientist

spaCy pipelines

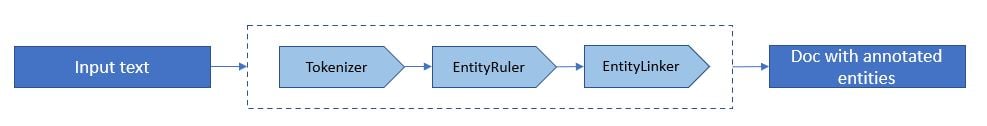

spaCyfirst tokenizes the text to produce aDocobject- The

Docis processed in several different steps of processing pipeline

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp(example_text)

spaCy pipelines

- A pipeline is a sequence of pipes, or actors on data

- A

spaCyNER pipeline:- Tokenization

- Named entity identification

- Named entity classification

print([ent.text for ent in doc.ents])

Adding pipes

sentencizer:spaCypipeline component for sentence segmentation.

text = " ".join(["This is a test sentence."]*10000)en_core_sm_nlp = spacy.load("en_core_web_sm") start_time = time.time() doc = en_core_sm_nlp(text)print(f"Finished processing with en_core_web_sm model in {round((time.time() - start_time)/60.0 , 5)} minutes")

>>> Finished processing with en_core_web_sm model in 0.09332 minutes

Adding pipes

- Create a blank model and add a

sentencizerpipe:

blank_nlp = spacy.blank("en")blank_nlp.add_pipe("sentencizer")start_time = time.time() doc = blank_nlp(text) print(f"Finished processing with blank model in {round((time.time() - start_time)/60.0 , 5)} minutes")

>>> Finished processing with blank model in 0.00091 minutes

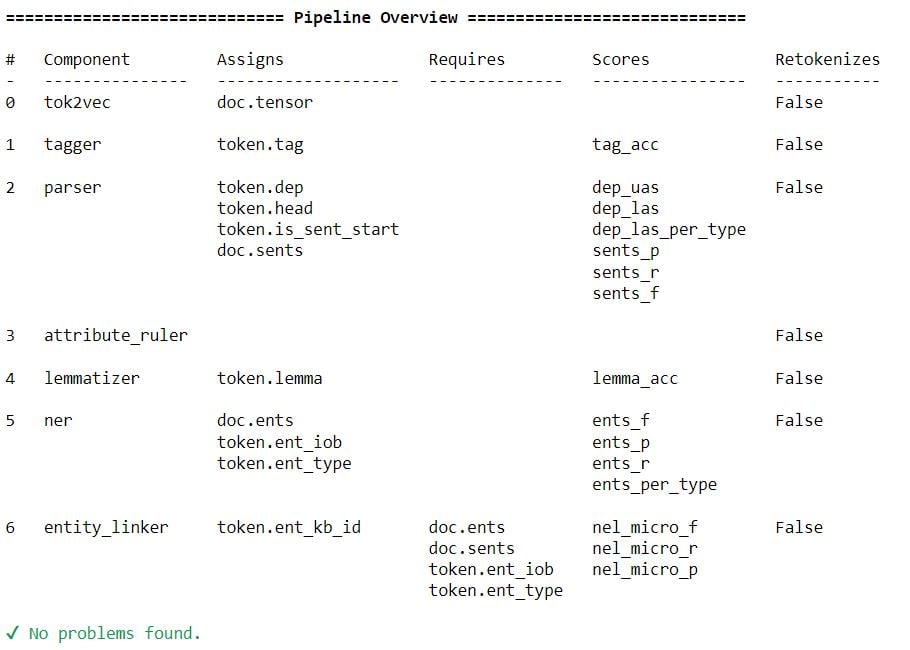

Analyzing pipeline components

nlp.analyze_pipes()analyzes aspaCypipeline to determine:- Attributes that pipeline components set

- Scores a component produces during training

- Presence of all required attributes

- Setting

prettytoTruewill print a table instead of only returning the structured data.

import spacy

nlp = spacy.load("en_core_web_sm")

analysis = nlp.analyze_pipes(pretty=True)

Analyzing pipeline components

Let's practice!

Natural Language Processing with spaCy