Overview of Text Classification

Deep Learning for Text with PyTorch

Shubham Jain

Instructor

Text classification defined

- Assigning labels to text

- Giving meaning to words and sentences

- Organizes and gives structure to unstructured data

Applications:

- Analyzing customer sentiment in reviews

- Detecting spam in emails

- Tagging news articles with relevant topics

Types: binary, multi-class, multi-label

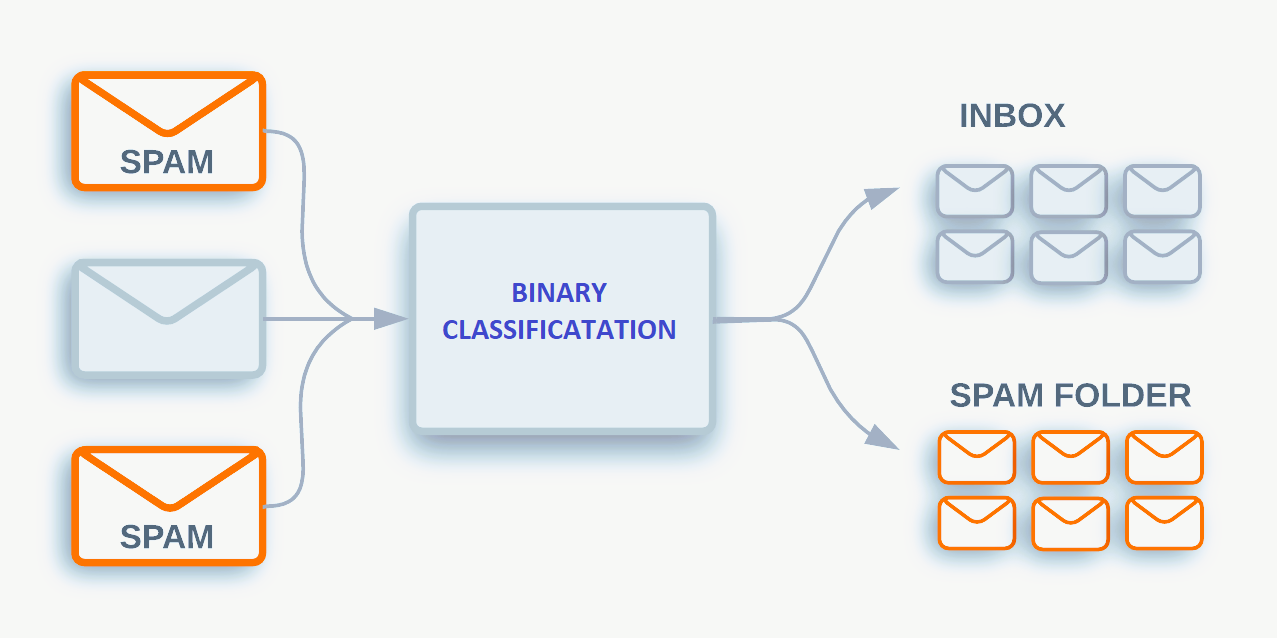

Binary classification

- Sorting into two categories

- Example: email spam detection

- Emails can be classified as 'spam' or 'not spam'

1 https://storage.googleapis.com/gweb-cloudblog-publish/images/image4_v2LFcq0.max-1200x1200.png

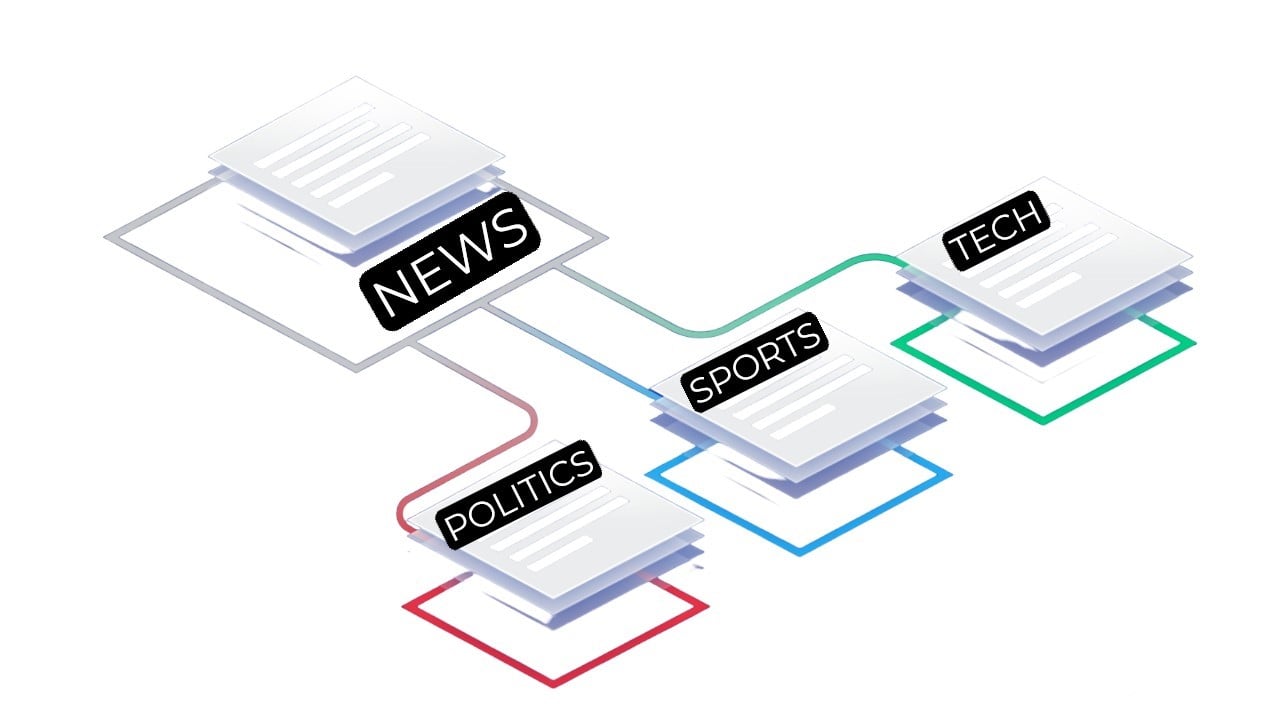

Multi-class classification

- Sorting into multiple categories

- Example: News articles can be sorted into various categories like

- Politics

- Sports

- Technology

Multi-label classification

- Each text can be assigned multiple labels

- Example: Books can be multiple genres

- Action

- Adventure

- Fantasy

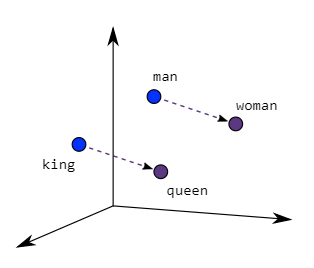

What are word embeddings

- Previous encoding techniques are a good first step

- Often create too many features and can't identify similar words

- Word embeddings map words to numerical vectors

- Example of semantic relationship:

- King and queen

- Man and woman

Word to index mapping

- Example:

- "King" -> 1

- "Queen" -> 2

- Compact and computationally efficient

- Follows tokenization in the pipeline

Word embeddings in PyTorch

torch.nn.Embedding:- Creates word vectors from indexes

- Input: Indexes for ['The', 'cat', 'sat', 'on', 'the', 'mat']

Embedding for 'the': tensor([-0.4689, 0.3164, -0.2971, -0.1291, 0.4064])

Embedding for 'cat': tensor([-0.0978, -0.4764, 0.0476, 0.1044, -0.3976])

Embedding for 'sat': tensor([ 0.2731, 0.4431, 0.1275, 0.1434, -0.4721])

Using torch.nn.Embedding

import torch from torch import nnwords = ["The", "cat", "sat", "on", "the", "mat"] word_to_idx = {word: i for i, word in enumerate(words)}inputs = torch.LongTensor([word_to_idx[w] for w in words])embedding = nn.Embedding(num_embeddings=len(words), embedding_dim=10)output = embedding(inputs)print(output)

tensor([[ 1.0624, 0.6792, 0.0459, ... -1.0828, -0.4475, 0.4868],

...

[1.5766, 0.0106, 0.1161, ...,, -0.0859, 1.3160, 1.3621])

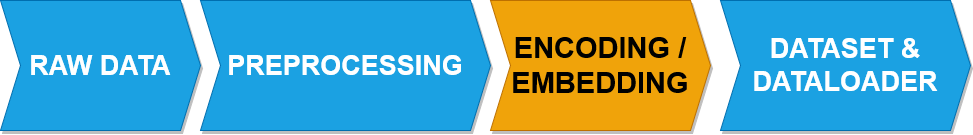

Using embeddings in the pipeline

def preprocess_sentences(text): # Tokenization # Stemming ...# Word to index mappingclass TextDataset(Dataset): def __init__(self, encoded_sentences): self.data = encoded_sentences def __len__(self): return len(self.data) def __getitem__(self, index): return self.data[index]

def text_processing_pipeline(text): tokens = preprocess_sentences(text) dataset = TextDataset(tokens) dataloader = DataLoader(dataset, batch_size=2, shuffle=True) return dataloader, vectorizertext = "Your sample text here." dataloader, vectorizer = text_processing_pipeline(text)embedding = nn.Embedding(num_embeddings=10, embedding_dim=50) for batch in dataloader: output = embedding(batch) print(output)

Let's practice!

Deep Learning for Text with PyTorch