Generative adversarial networks for text generation

Deep Learning for Text with PyTorch

Shubham Jain

Instructor

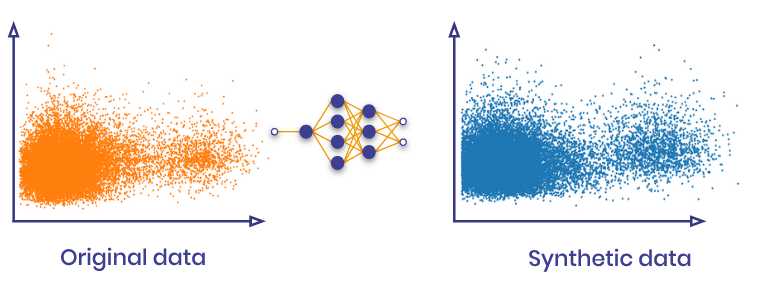

GANs and their role in text generation

- GANs can generate new content that seems original

- Preserves statistical similarities

- Can replicate complex patterns unachievable by RNNs

- Can emulate real-world patterns

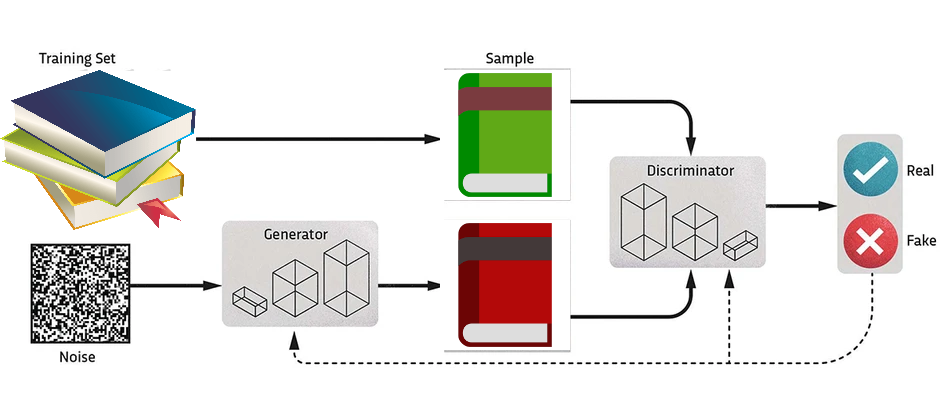

Structure of a GAN

- A GAN has two components:

- Generator: creates fake samples by adding noise

- Discriminator: differentiates between real and generated text data

1 https://www.sciencefocus.com/future-technology/how-do-machine-learning-gans-work/

Building a GAN model in PyTorch: Generator

# Embedding reviews # Convert reviews to tensorsclass Generator(nn.Module): def __init__(self): super().__init__()self.model = nn.Sequential( nn.Linear(seq_length, seq_length), nn.Sigmoid() )def forward(self, x): return self.model(x)

Building the discriminator network

class Discriminator(nn.Module): def __init__(self): super().__init__()self.model = nn.Sequential( nn.Linear(seq_length, 1), nn.Sigmoid() )def forward(self, x): return self.model(x)

Initializing networks and loss function

generator = Generator()discriminator = Discriminator()criterion = nn.BCELoss()optimizer_gen = torch.optim.Adam(generator.parameters(), lr=0.001) optimizer_disc = torch.optim.Adam(discriminator.parameters(), lr=0.001)

Training the discriminator

num_epochs = 50 for epoch in range(num_epochs):for real_data in data: real_data = real_data.unsqueeze(0)noise = torch.rand((1, seq_length))disc_real = discriminator(real_data)fake_data = generator(noise) disc_fake = discriminator(fake_data.detach())loss_disc = criterion(disc_real, torch.ones_like(disc_real)) + criterion(disc_fake, torch.zeros_like(disc_fake))optimizer_disc.zero_grad()loss_disc.backward()optimizer_disc.step()

Training the generator

# ... (continued from last slide) disc_fake = discriminator(fake_data)loss_gen = criterion(disc_fake, torch.ones_like(disc_fake))optimizer_gen.zero_grad()loss_gen.backward()optimizer_gen.step()if (epoch+1) % 10 == 0:print(f"Epoch {epoch+1}/{num_epochs}:\t Generator loss: {loss_gen.item()}\t Discriminator loss: {loss_disc.item()}")

Printing real and generated data

print("\nReal data: ") print(data[:5])print("\nGenerated data: ") for _ in range(5): noise = torch.rand((1, seq_length)) generated_data = generator(noise) print(torch.round(generated_data).detach())

GANs: generated synthetic data

Epoch 10/50: Generator loss: 0.8992824673652 Discriminator loss: 1.37682652473

Epoch 20/50: Generator loss: 0.7347183227539 Discriminator loss: 1.390102505683

...

Epoch 50/50: Generator loss: 0.7019854784011 Discriminator loss: 1.3501529693603

Generated data

Real data:

tensor([[1., 0., 0., 1., 1.],

[0., 0., 1., 0., 0.],

[1., 0., 1., 1., 1.],

[1., 0., 1., 0., 0.],

[1., 1., 1., 1., 1.]])

- Evaluation metric: correlation matrix

Generated data:

tensor([[0., 1., 1., 0., 0.]]),

tensor([[0., 1., 1., 1., 1.]])

tensor([[1, 1., 1., 0., 0.]]),

tensor([[1., 1., 1., 0., 0.]])

tensor([[0., 1., 1., 1., 1.]])

Let's practice!

Deep Learning for Text with PyTorch