Encoding text data

Deep Learning for Text with PyTorch

Shubham Jain

Data Scientist

Text encoding

- Convert text into machine-readable numbers

- Enable analysis and modeling

Encoding techniques

- One-hot encoding: transforms words into unique numerical representations

- Bag-of-Words (BoW): captures word frequency, disregarding order

- TF-IDF: balances uniqueness and importance

- Embedding: converts words into vectors, capturing semantic meaning (Chapter 2)

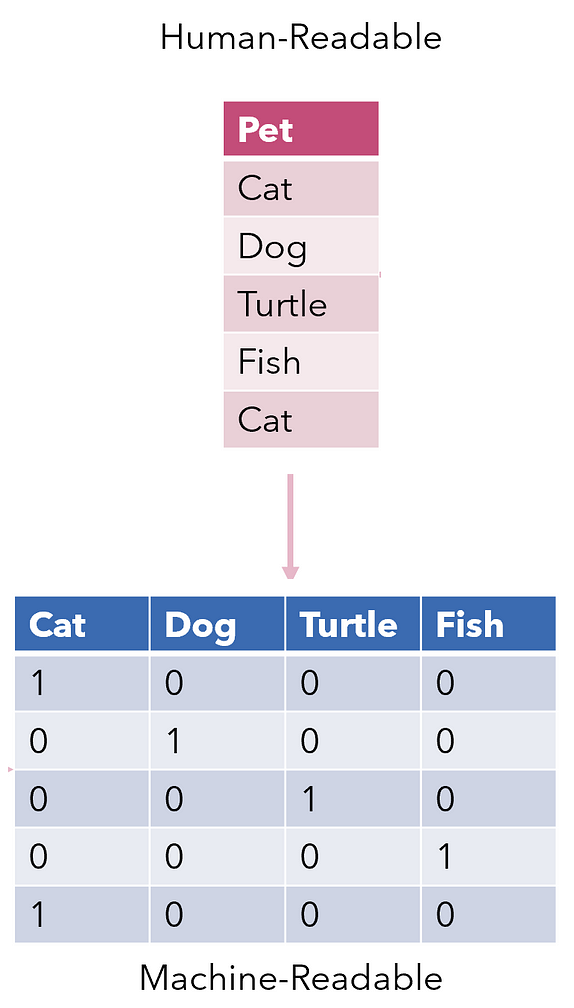

One-hot encoding

- Mapping each word to a distinct vector

- Binary vector:

- 1 for the presence of a word

- 0 for the absence of a word

- ['cat', 'dog', 'rabbit']

- 'cat' [1, 0, 0]

- 'dog' [0, 1, 0]

- 'rabbit' [0, 0, 1]

One-hot encoding with PyTorch

import torch vocab = ['cat', 'dog', 'rabbit']vocab_size = len(vocab)one_hot_vectors = torch.eye(vocab_size)one_hot_dict = {word: one_hot_vectors[i] for i, word in enumerate(vocab)}print(one_hot_dict)

{'cat': tensor([1., 0., 0.]),

'dog': tensor([0., 1., 0.]),

'rabbit': tensor([0., 0., 1.])}

Bag-of-words

- Example: "The cat sat on the mat"

- Bag-of-words:

- {'the': 2, 'cat': 1, 'sat': 1, 'on': 1, 'mat': 1}

- Treating each document as an unordered collection of words

- Focuses on frequency, not order

CountVectorizer

from sklearn.feature_extraction.text import CountVectorizervectorizer = CountVectorizer()corpus = ['This is the first document.', 'This document is the second document.', 'And this is the third one.', 'Is this the first document?']X = vectorizer.fit_transform(corpus)print(X.toarray())print(vectorizer.get_feature_names_out())

[[0 1 1 1 0 0 1 0 1] [0 2 0 1 0 1 1 0 1] [1 0 0 1 1 0 1 1 1] [0 1 1 1 0 0 1 0 1]]['and' 'document' 'first' 'is' 'one' 'second' 'the' 'third' 'this']

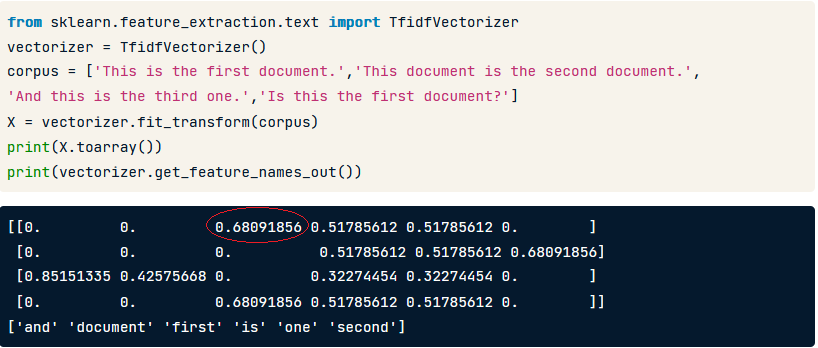

TF-IDF

- Term Frequency-Inverse Document Frequency

- Scores the importance of words in a document

- Rare words have a higher score

- Common ones have a lower score

- Emphasizes informative words

TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer vectorizer = TfidfVectorizer()corpus = ['This is the first document.','This document is the second document.', 'And this is the third one.','Is this the first document?']X = vectorizer.fit_transform(corpus)print(X.toarray())print(vectorizer.get_feature_names_out())

[[0. 0. 0.68091856 0.51785612 0.51785612 0. ] [0. 0. 0. 0.51785612 0.51785612 0.68091856] [0.85151335 0.42575668 0. 0.32274454 0.32274454 0. ] [0. 0. 0.68091856 0.51785612 0.51785612 0. ]]['and' 'document' 'first' 'is' 'one' 'second']

TfidfVectorizer

Encoding techniques

Techniques: One-hot encoding, bag-of-words, and TF-IDF

- Allows models to understand and process text

- Choose one technique to avoid redudancy

- More techniques exist

Let's practice!

Deep Learning for Text with PyTorch