Transfer learning for text classification

Deep Learning for Text with PyTorch

Shubham Jain

Instructor

What is transfer learning?

- Use pre-existing knowledge from one task to a related task

- Saves time

- Share expertise

- Reduces need for large data

- An English teacher starts teaching History

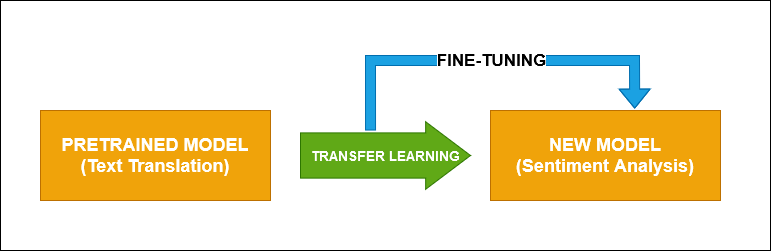

Mechanics of transfer learning

Mechanics of transfer learning

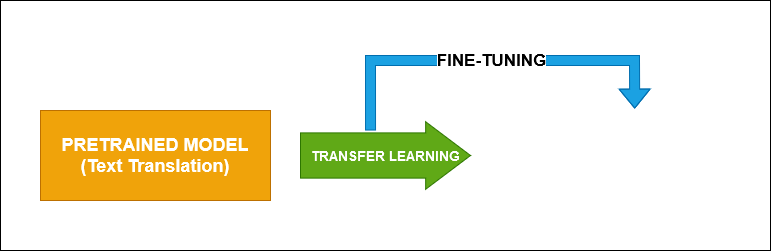

Mechanics of transfer learning

Mechanics of transfer learning

Pre-trained model : BERT

- Bidirectional Encoder Representations from Transformers

- Trained for language modeling

- Multiple layers of transformers

- Pre-trained on large texts

Hands-on: implementing BERT

texts = ["I love this!", "This is terrible.", "Amazing experience!", "Not my cup of tea."] labels = [1, 0, 1, 0]import torch from transformers import BertTokenizer, BertForSequenceClassificationtokenizer = BertTokenizer.from_pretrained('bert-base-uncased') model = BertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=2)inputs = tokenizer(texts, padding=True, truncation=True, return_tensors="pt", max_length=32) inputs["labels"] = torch.tensor(labels)

Fine-tuning BERT

optimizer = torch.optim.AdamW(model.parameters(), lr=0.00001) model.train()for epoch in range(1): outputs = model(**inputs)loss = outputs.loss loss.backward()optimizer.step() optimizer.zero_grad()print(f"Epoch: {epoch+1}, Loss: {loss.item()}")

Epoch: 1, Loss: 0.7061821222305298

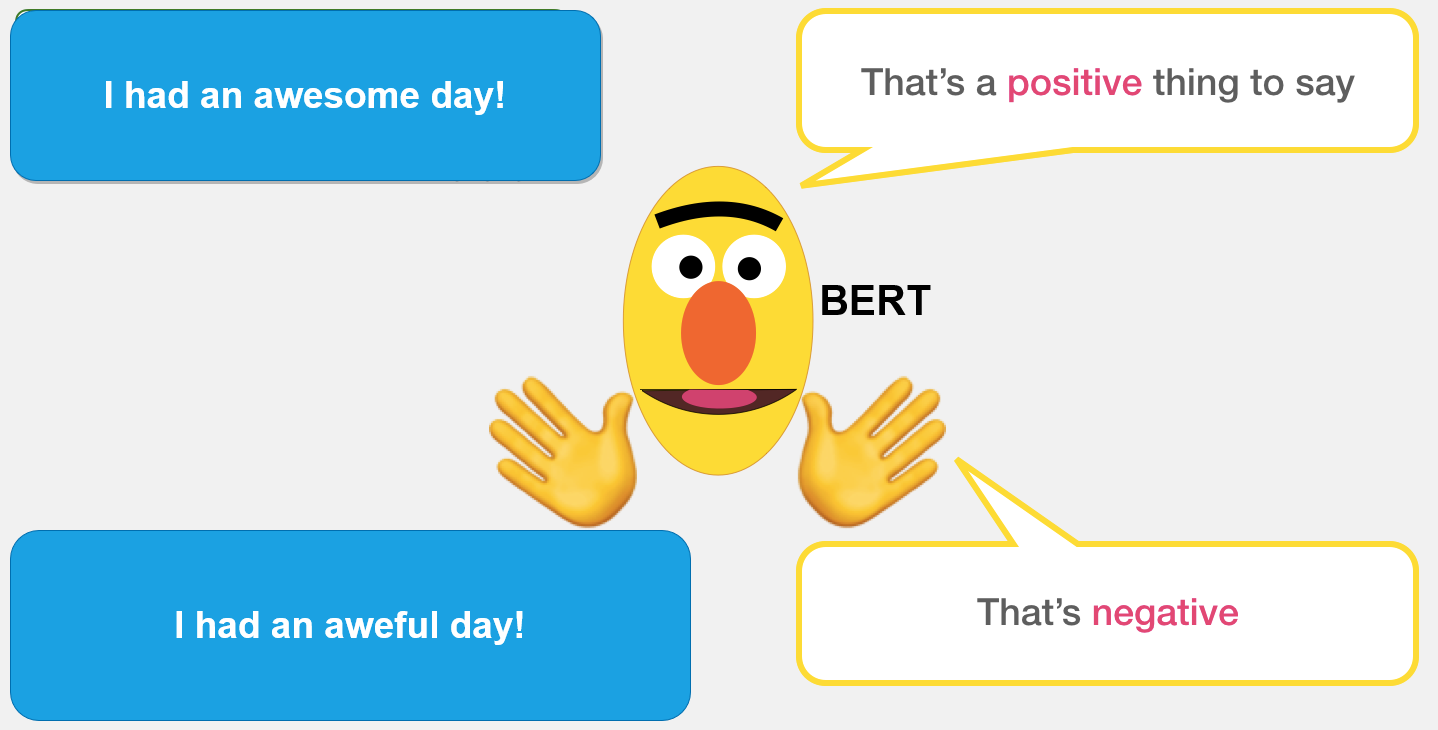

Evaluating on new text

text = "I had an awesome day!" input_eval = tokenizer(text, return_tensors="pt", truncation=True, padding=True, max_length=128)outputs_eval = model(**input_eval)predictions = torch.nn.functional.softmax(outputs_eval.logits, dim=-1)predicted_label = 'positive' if torch.argmax(predictions) > 0 else 'negative' print(f"Text: {text}\nSentiment: {predicted_label}")

Text: I had an awesome day!

Sentiment: positive

Let's practice!

Deep Learning for Text with PyTorch