Introduction to building a text processing pipeline

Deep Learning for Text with PyTorch

Shubham Jain

Data Scientist

Recap: preprocessing

- Preprocessing:

- Tokenization

- Stopword removal

- Stemming

- Rare word removal

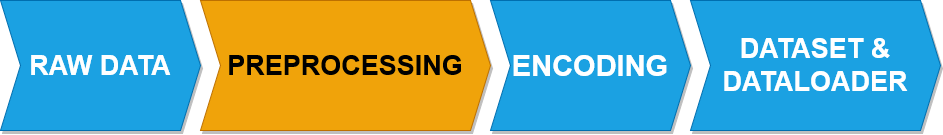

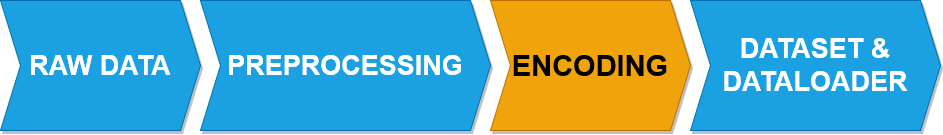

Text processing pipeline

- Encoding:

- One-hot encoding

- Bag-of-words

- TF-IDF

- Embedding

Text processing pipeline

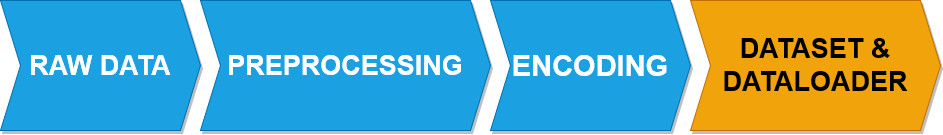

Dataset as a container for processed and encoded text

DataLoader: batching, shuffling and multiprocessing

Recap: implementing Dataset and DataLoader

# Import libraries from torch.utils.data import Dataset, DataLoader# Create a class class TextDataset(Dataset):def __init__(self, text): self.text = textdef __len__(self): return len(self.text)def __getitem__(self, idx): return self.text[idx]

Recap: integrating Dataset and DataLoader

dataset = TextDataset(encoded_text)dataloader = DataLoader(dataset, batch_size=2, shuffle=True)

Using helper functions

def preprocess_sentences(sentences):

processed_sentences = []

for sentence in sentences:

sentence = sentence.lower()

tokens = tokenizer(sentence)

tokens = [token for token in tokens

if token not in stop_words]

tokens = [stemmer.stem(token)

for token in tokens]

freq_dist = FreqDist(tokens)

threshold = 2

tokens = [token for token in tokens if

freq_dist[token] > threshold]

processed_sentences.append(

' '.join(tokens))

return processed_sentences

def encode_sentences(sentences):

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(sentences)

encoded_sentences = X.toarray()

return encoded_sentences, vectorizer

def extract_sentences(data):

sentences = re.findall(r'[A-Z][^.!?]*[.!?]',

data)

return sentences

Constructing the text processing pipeline

def text_processing_pipeline(text):tokens = preprocess_sentences(text)encoded_sentences, vectorizer = encode_sentences(tokens)dataset = TextDataset(encoded_sentences)dataloader = DataLoader(dataset, batch_size=2, shuffle=True)return dataloader, vectorizer

Applying the text processing pipeline

text_data = "This is the first text data. And here is another one."sentences = extract_sentences(text_data) dataloaders, vectorizer = [text_processing_pipeline(text) for text in sentences]print(next(iter(dataloader))[0, :10])

[[1, 1, 1, 1, 1], [0, 0, 0, 1, 1]]

Text processing pipeline: it's a wrap!

Let's practice!

Deep Learning for Text with PyTorch