Adversarial attacks on text classification models

Deep Learning for Text with PyTorch

Shubham Jain

Instructor

What are adversarial attacks?

- Tweaks to input data

- Not random but calculated malicious changes

- Can drastically affect AI's decision-making

Importance of robustness

- AI systems deciding if user comments are toxic or benign

- AI unintentionally amplifying negative stereotypes from biased data

- AI giving misleading information

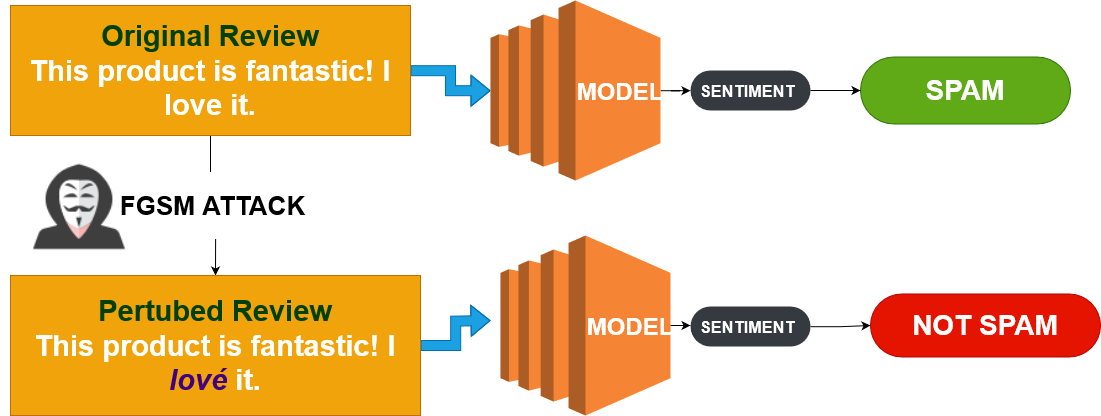

Fast Gradient Sign Method (FGSM)

- Exploits the model's learning information

- Makes the tiniest possible change to deceive the model

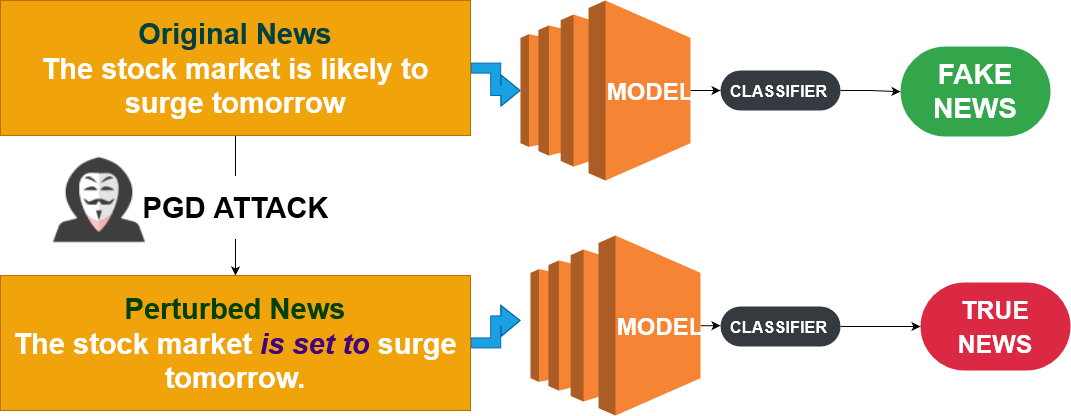

Projected Gradient Descent (PGD)

- More advanced than FGSM: it's iterative

- Tries to find the most effective disturbance

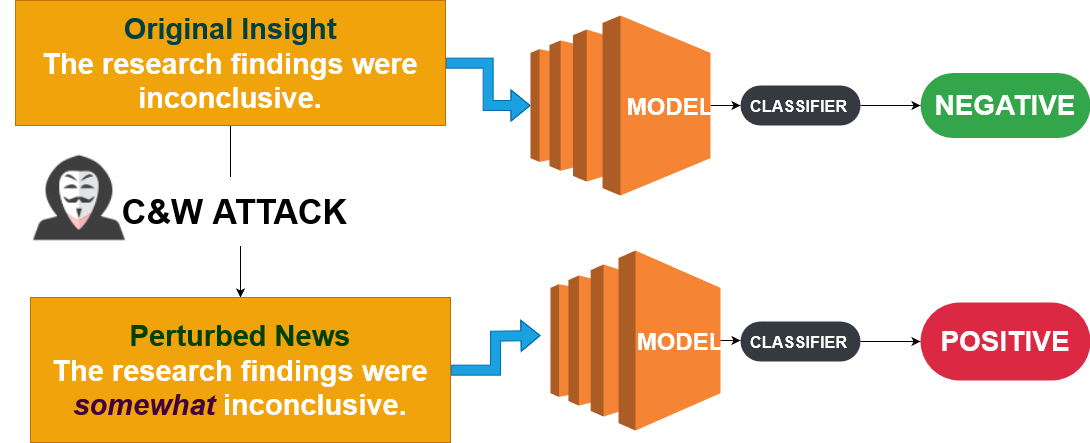

The Carlini & Wagner (C&W) attack

- Focuses on optimizing the loss function

- Not just about deceiving but about being undetectable

Building defenses: strategies

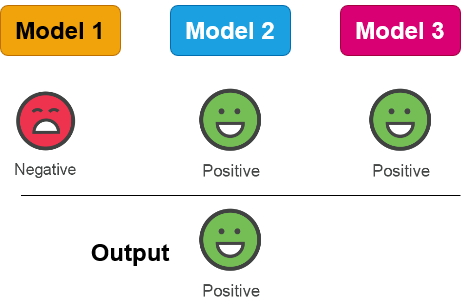

- Model Ensembling:

- Use multiple models

- Robust Data Augmentation:

- Data augmentation

- Adversarial Training:

- Anticipate deception

Building defenses: tools & techniques

- PyTorch's Robustness Toolbox:

- Strengthen text models

- Gradient Masking:

- Add variety to training data to hide exploitable patterns

- Regularization Techniques:

- Ensure model balance

1 https://adversarial-robustness-toolbox.readthedocs.io/en/latest/, https://stock.adobe.com/ie/contributor/209161356/designer-s-circle

Let's practice!

Deep Learning for Text with PyTorch