Model training

End-to-End Machine Learning

Joshua Stapleton

Machine Learning Engineer

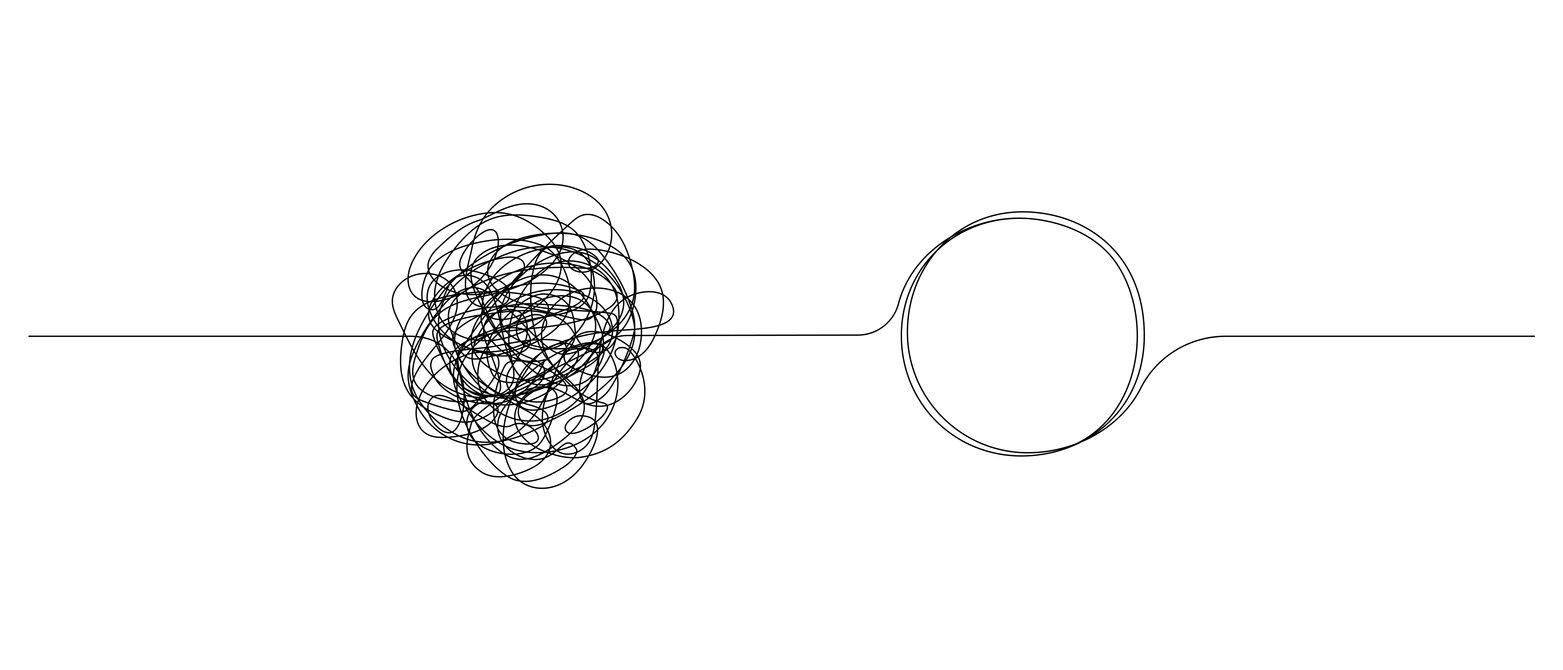

Occam's Razor

- Simplest satisfactory explanation is best

- Lean towards simple models when selecting

Modeling options

Logistic Regression

- Finds decision boundary between classes

sklearn.linear_model.LogisticRegression

Support Vector Classifier

- Finds plane to separate classes

sklearn.svm.SVC

Decision Tree

- Finds simple 'rules' to classify data

sklearn.tree.DecisionTreeClassifier

Random Forest

- Combines multiple decision trees

sklearn.ensemble.RandomForestClassifier

Other models

Deep learning models

- Neural Networks

- Convolutional Neural Networks

- Generative Pretrained Transformer (GPT)

K-Nearest Neighbors (KNN)

- Supervised learning algorithm

XGBoost

- Gradient boosted model

- https://xgboost.readthedocs.io/en/stable/

Training principles

Model:

- Uses cleaned and feature-handled dataset

- Learns patterns in training data

- Aims to predict target of heart disease diagnosis

Principles:

- Model must generalize to unseen data (outside of training set)

- 'Hold-out' some data to test model on after training completes.

- Split of training/testing is normally 70/30 or 80/20

- Can use

sklearn.model_selection.train_test_split

Training a model

# Importing necessary libraries from sklearn.model_selection import train_test_split from sklearn.linear_model import LogisticRegression# Split the data into training and testing sets (80:20) X_train, X_test, y_train, y_test = train_test_split(features, heart_disease_y, test_size=0.2, random_state=42)# Define the models logistic_model = LogisticRegression(max_iter=200)# Train the model logistic_model.fit(X_train, y_train)

Getting model predictions

# Jane Doe's health data, for example: [age, cholesterol level, blood pressure, etc.] jane_doe_data = [45, 230, 120, ...]# Reshape the data to 2D, because scikit-learn expects a 2D array-like input jane_doe_data = jane_doe_data.reshape(1, -1)# Use the model to predict Jane's heart disease diagnosis probabilities jane_doe_probabilities = logistic_model.predict_proba(jane_doe_data) jane_doe_prediction = logistic_model.predict(jane_doe_data)

Getting model predictions (cont.)

# Print the probabilities

print(f"Jane Doe's predicted probabilities: {jane_doe_probabilities[0]}")

print(f"Jane Doe's predicted health condition: {jane_doe_prediction[0]}")

Jane Doe's predicted health condition probabilities: [0.2 0.8]Jane Doe's predicted health condition: 1

Let's practice!

End-to-End Machine Learning